XGrammar

XGrammar is another state-of-the-art backend that takes a different approach. You input your schema as a CFG, regex, JSON template, and it converts them into standard PDAs to support both recursive and non-recursive schema.

Interestingly, XGrammar sees Outlines-core (pre-compute everything) and llguidance (compute everything at runtime) as two opposite extremes. It implements a middle ground via vocabulary splitting.

Remember our grammar for math expressions:

root ::= expr

expr ::= number | "(" expr op expr ")"

op ::= "+" | "-" | "*" | "/"

number ::= [0-9]+

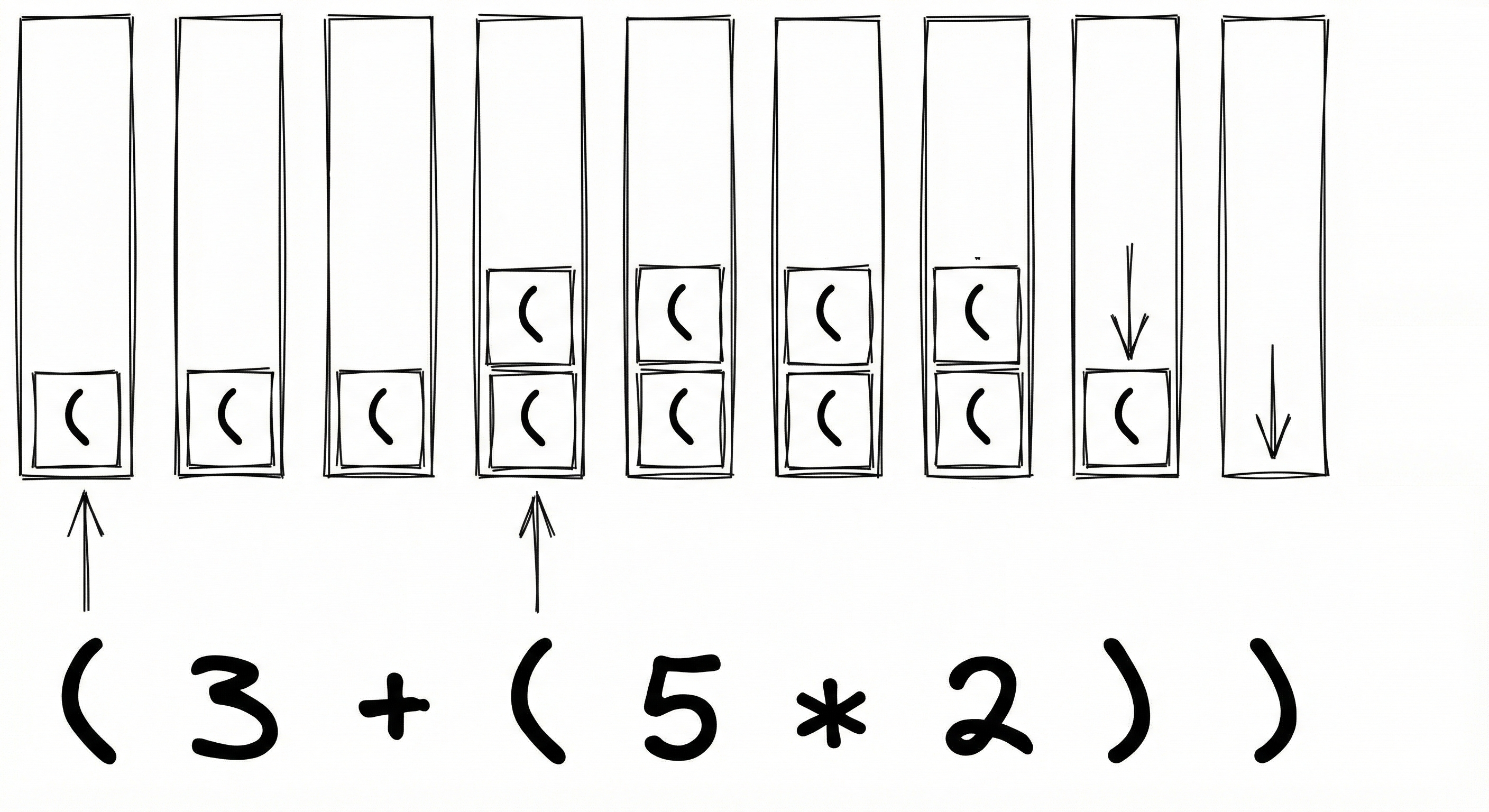

Say an LLM uses it to generate (3 + (5 * 2)) via constrained decoding.

But XGrammar does some work before starting. It splits the token vocabulary into:

- Context-Independent Tokens: The validity of these tokens depends on the current state of the automaton but not on the PDA "stack".

- Context-Dependent Tokens: The validity of these tokens depends on the PDA "stack". In our case, only closing braces

)need to check the stack and match opening braces(seen earlier.

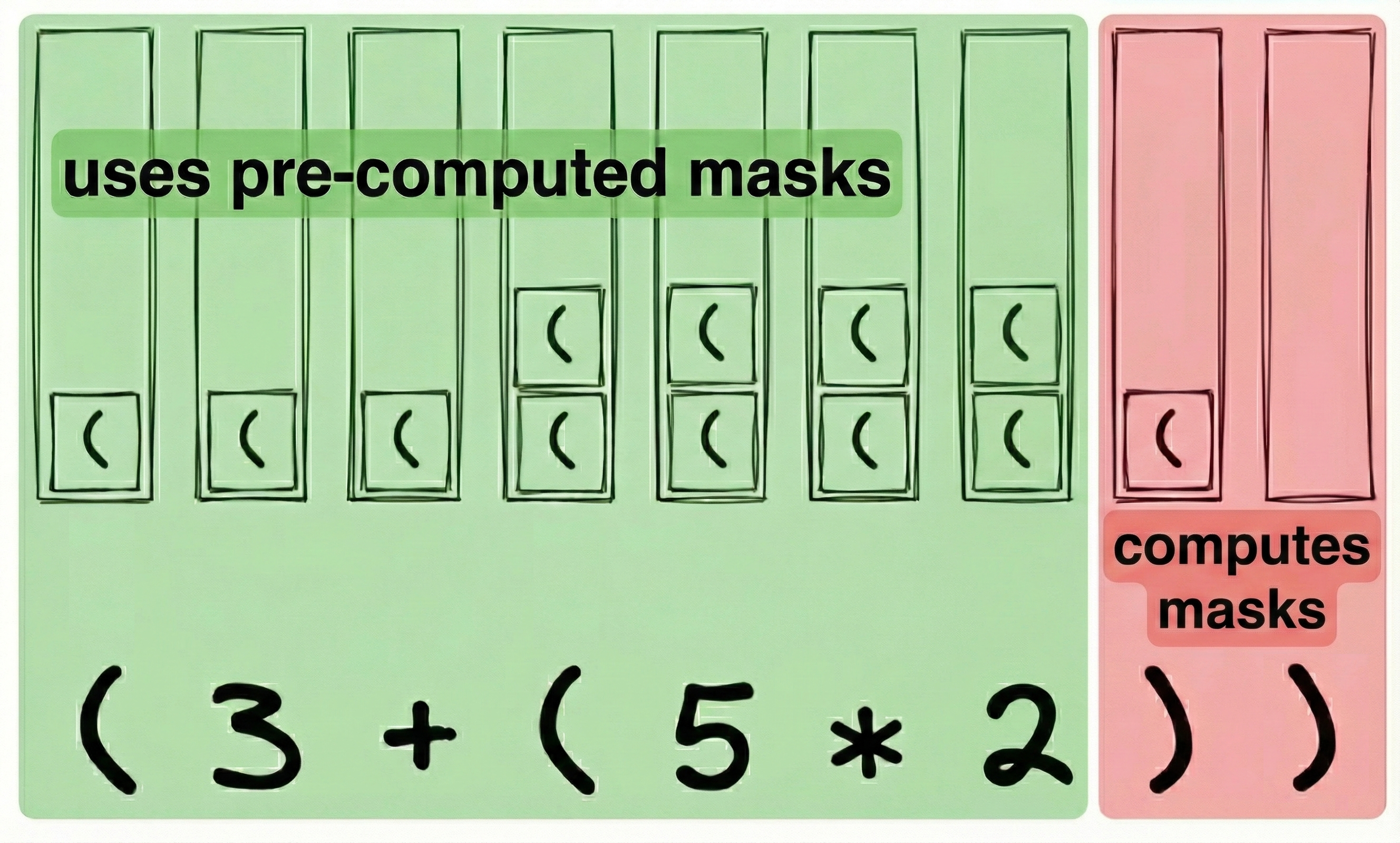

In most structured generations, less than 1% of tokens are context-dependent. Before it starts generating output, XGrammar acts as a ahead-of-time compiler, and writes a set of bytecode instructions. This bytecode is tiny and independent, and contains instructions to do the following:

- For states marking the end of a recursive rule (the "context-dependent" <1%), it writes instructions to compute token masks at runtime, using the PDA stack.

- For all other states (the "context-independent" >99%), it pre-computes token masks, adds them to a lookup table, and writes instructions to retrieve them during output generation.

When to use it?

XGrammar is state-of-the-art for maximizing throughput in high-performance productions, with a slight edge over llguidance in time-per-output-token. This makes sense: In XGrammar, context-independent states (majority) behave like Outlines’ FSMs. They lookup a pre-computed token mask in O(1). Context-dependent states (minority) behave like llguidance, and compute token masks at runtime.

Limitations

-

The bytecode compilation adds initial overhead and TTFT costs. This makes XGrammar slower for dynamic schemas compared to llguidance.

-

XGrammar's pre-computation strategy works well for most schemas, and masks are computed quickly (under ~20μs). However, for some edge schemas, mask computation times can run to tens or hundreds of milliseconds.