Libraries

Working with constrained decoding backends can be painful:

-

You use regex and grammars inputs. These are not intuitive to write, read, debug, maintain.

-

You have to handle caching yourself. Caching FSMs / PDAs for new schemas ensures that you pay the time-to-first-token cost only on the first output generation.

-

After constrained decoding, the LLM returns a text that looks like your schema, but you still need to convert it into a type-safe Python object.

-

Backends need token vocabularies to create token masks, and you need to load and connect the exact tokenizer your LLM uses.

-

You have to deep-dive into architecture of inference engines (vLLM, SGLang, Ollama, etc.), figure out how to integrate the backend, and write unique adapters for every engine.

The maintainers of outlines-core and llguidance have libraries that are wrappers over the cosntrained backends. These libraries do the above tasks for the developer, and also provide other useful features to enhance the developer experience.

Outlines

The Outlines Python library is the official wrapper for Outlines-core. It does the tasks listed above for the developer - caches FSMs, converts LLM outputs into type-safe Python objects, and provides plug-and-play integrations with inference engines.

On top of this, it also adds some useful features:

- Templates: Supports Pydantic models and JSON templates as inputs. Under-the-hood, Outlines converts these inputs into regex schema for the outlines-core backend.

\{\s*"store_name":\s*"[^"]+",\s*"store_address":\s*"[^"]+",\s*"store_number":\s*(null|[0-9]+),\s*"items":\s*\[\s*((\{\s*"name":\s*"[^"]+",\s*"quantity":\s*(null|[0-9]+),\s*"price_per_unit":\s*(null|[0-9]+(\.[0-9]+)?),\s*"total_price":\s*(null|[0-9]+(\.[0-9]+)?)\s*\})(,\s*(\{\s*"name":\s*"[^"]+",\s*"quantity":\s*(null|[0-9]+),\s*"price_per_unit":\s*(null|[0-9]+(\.[0-9]+)?),\s*"total_price":\s*(null|[0-9]+(\.[0-9]+)?)\s*\}))*)?\s*\],\s*"tax":\s*(null|[0-9]+(\.[0-9]+)?),\s*"total":\s*(null|[0-9]+(\.[0-9]+)?),\s*"date":\s*(null|"[0-9]{4}-[0-9]{2}-[0-9]{2}"),\s*"payment_method":\s*"(cash|credit|debit|check|other)"\s*\}

class Item(BaseModel):

name: str

quantity: Optional[int]

price_per_unit: Optional[float]

total_price: Optional[float]

class ReceiptSummary(BaseModel):

store_name: str

store_address: str

store_number: Optional[int]

items: List[Item]

tax: Optional[float]

total: Optional[float]

date: Optional[str] = Field(pattern=r'\d{4}-\d{2}-\d{2}', description="Date in the format YYYY-MM-DD")

payment_method: Literal["cash", "credit", "debit", "check", "other"]

As an example, if your regex or grammar doesn't explicitly account for an optional newline or space between JSON keys, the LLM might try to predict a newline or space token, get masked out, and degrade into nonsense output having been derailed from its natural path. Debugging this will have you fighting with whitespace definitions in regex and grammars.

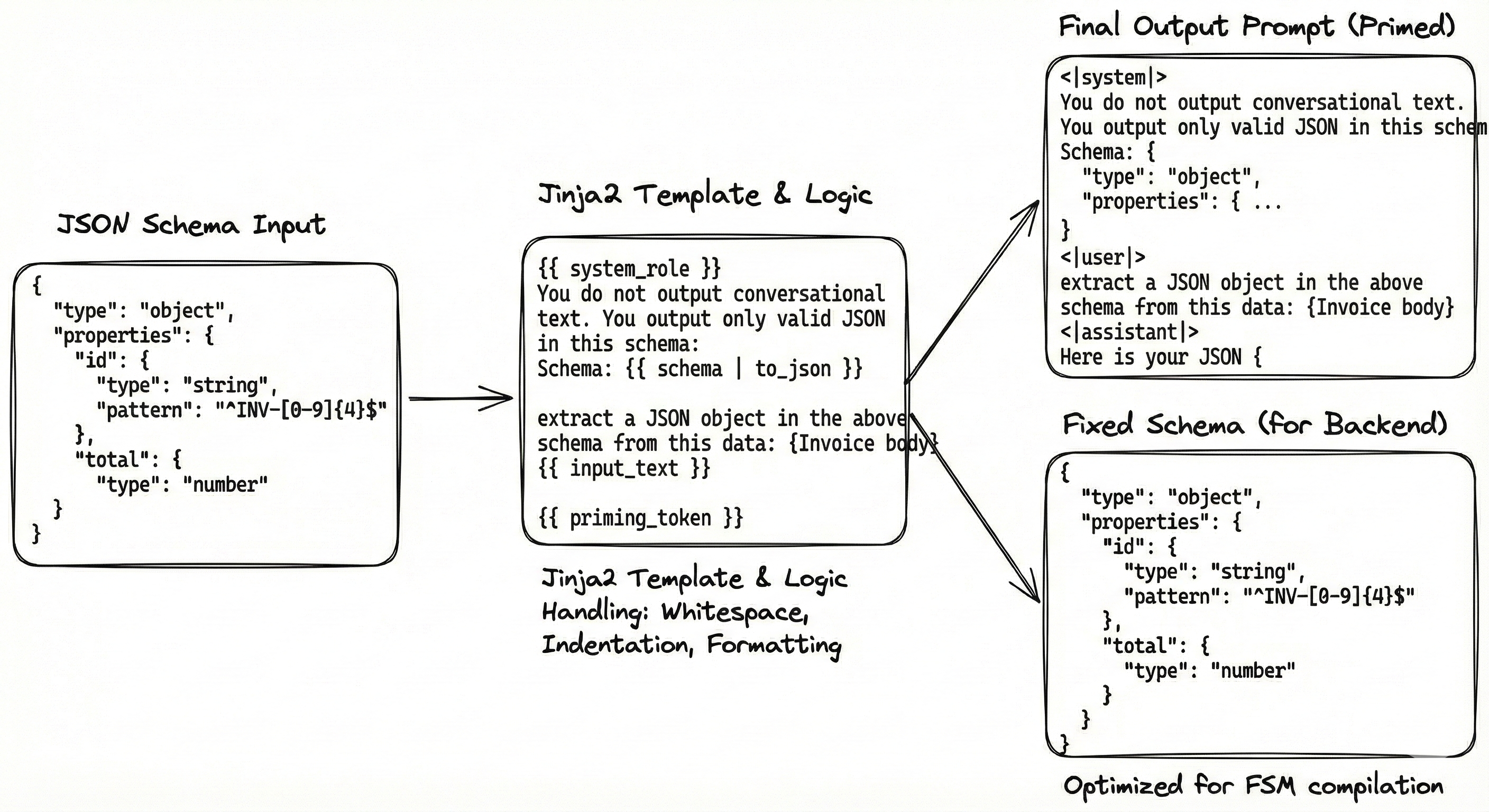

- When you input Pydantic objects or JSON templates, Outlines uses Jinja2, a templating library, to create an underlying prompt with system role, natural language instructions, priming.

-

Simply parsing the LLM output text into JSON object is not enough. Outlines parse the output as JSON object, and ensures specific values within the JSON object are correct data types according to the schema (eg.

30should beintinage: 30). -

Besides inference engines, Outlines also integrates with Hugging face transformers, which is a simple model loader. For single-user tasks and scripts, you can use this setup to get structured LLM outputs without adding an inference engine.

Interestingly, Outlines has recently added support to change the backend from outlines-core to llguidance and XGrammar within the library. But currently, those backend integrations are sub-optimal and you are better off using those backends with a different setup.

Guidance

Guidance is the official Python wrapper for llguidance, and does all of the things Outlines does.

# JSON template for invoices

from guidance import json

schema = {

"type": "object",

"properties": {

"invoice_id": {"type": "string"},

"items": {

"type": "array",

"items": {

"type": "object",

"properties": {

"desc": {"type": "string"},

"qty": {"type": "integer"}

}

}

}

}

}

lm = llama + "Extract invoice data:\n"

lm += json(schema=schema, name="json_data")

# Grammar for math expressions

# Terminals

def INT(name: str | None = None):

# Constrain integers (no sign, no decimals)

return gen(name=name, regex=r"[0-9]+", max_tokens=6)

def OP(ops, name: str | None = None):

# Choose one operator from a list like ["+", "-"]

return select(ops, name=name)

# Nonterminals (recursive grammar)

def factor(max_depth: int, depth: int = 0):

"""

factor := INT | "(" expr ")"

If we hit max_depth, only allow INT to stop recursion.

"""

def _factor(lm):

if depth >= max_depth:

return lm + INT()

return lm + select(

[

INT(),

"(" + expr(max_depth=max_depth, depth=depth + 1) + ")",

]

)

return _factor

def term(max_depth: int, depth: int = 0):

"""

term := factor (("*"|"/") factor)*

"""

def _term(lm):

lm += factor(max_depth=max_depth, depth=depth)

lm += zero_or_more(OP(["*", "/"]) + factor(max_depth=max_depth, depth=depth))

return lm

return _term

def expr(max_depth: int, depth: int = 0):

"""

expr := term (("+"|"-") term)*

"""

def _expr(lm):

lm += term(max_depth=max_depth, depth=depth)

lm += zero_or_more(OP(["+", "-"]) + term(max_depth=max_depth, depth=depth))

return lm

return _expr

lm = models.LlamaCpp(model="/path/to/llama-3-8b.gguf")

lm += "Generate an arithmetic expression:\n"

lm += expr(max_depth=11)

print(str(lm))

But Guidance is more than just the sum of these features. It is a programming paradigm for structured outputs. We'll show what this means.

In standard LLM inference, every request is stateless. You send the prompt, wait, and get the LLM output. Once you get the LLM output, the LLM state (prompt + output + LLM cache) is lost. When you send a new request, there is no way to access or refer the lost LLM state.

To solve this, you have to maintain conversation history on your end, in pairs of [prompt, output] messages (often called [User, Assistant] messages). When you send a new request, you attach the conversation history as a payload. This is the basis of stateful generation, which is what happens in LLM chat assistants like ChatGPT, Gemini, etc. Some inference engines also maintain this conversation history for you and allow you to use it for consecutive structured outputs.

But Guidance makes stateful generation ever so easy. Once you initiate a model in Guidance, it creates an immutable model object. You can now create copies of this model object, and each model object copy will maintain the conversation history on its own.

from guidance import models, gen

llama = models.LlamaCpp("model.gguf")

lm = llama + "The capital of France is " + gen(name="capital")

Logic seamlessly flows between python code and LLM output generations:

lm = llama + "Three synonyms for 'happy':\n"

for i in range(3):

lm += f"{i+1}. " + gen(name="synonyms", list_append=True, stop="\n") + "\n"

This is especially useful for interleaved flows. Say we want to check our emails for invoices. If we find an invoice email, we check a database for the vendor, and if the vendor exists, we approve the invoice.

# Function to check if vendor exists in the database

def check_vendor_database(vendor_name):

known_vendors = fetch_from_database()

return vendor_name in known_vendors

# Classify invoices

lm = llama + f"""

Email: email_subject + email_text + email_attachments

Type: {select(['Invoice', 'Not an invoice'], name='email_type')}

"""

# Interleaved Python Logic

if lm['email_type'] == 'Invoice':

# Extract the vendor name using the model

lm += f"\nVendor: {gen(name='vendor', stop='\n')}"

# PAUSE: Run actual Python code to check our database

# The model effectively "waits" here.

is_known = check_database(lm['vendor'])

# INJECT: We force the result back into the prompt

lm += f"\nVendor Verified: {is_known}"

# RESUME: The model uses this new fact to make a decision

lm += f"\nAction: {select(['Pay', 'Reject'])}"

You can build a library of reusable "prompt components" rather than rewriting strings.

from guidance import guidance

# Define a reusable component

@guidance

def character_card(lm, name, role):

lm += f"""

--- CHARACTER PROFILE ---

Name: {name}

Role: {role}

Backstory: {gen(name='backstory', max_tokens=50)}

-------------------------

"""

return lm

# Use the component dynamically in a loop

party = [('Gimli', 'Dwarf'), ('Legolas', 'Elf')]

for name, role in party:

# The component appends to the state automatically

lm += character_card(name, role)

Chain-of-thought (CoT) helps neutralize any output quality degradation effects caused by the distance between the natural path of the LLM and the path being forced, created because of constrained decoding.

Instead, letting the model output token freely without any constraints improves the actual accuracy of probability distributions. Once, the probability distributions are accurate, we apply constrained decoding to get an accurate answer in the right format.

# We want the answer, but we want the model to show its work first

lm = llama + "Question: What is 42 * 15? "

# Enter a hidden block. Text generated here enters the cache but is hidden from the final 'lm' string representation.

with lm.block(hidden=True):

lm += "Thinking Process: " + gen(name="thoughts")

# The model generates the answer based on the hidden thoughts

lm += "Final Answer: " + gen(name="answer")

# Result: lm['answer'] is accurate, but str(lm) remains clean.

Because the model object is immutable, you can fork the generation process to generate outputs in parallel, without re-processing the prompt history. This is significantly faster than sending three separate requests.

# Shared context

base_state = llama + "The sentiment of this customer review is"

# Branch 1: Ask for a classification

classification = base_state + select([" positive", " negative"])

# Branch 2: Ask for a summary (independent of Branch 1)

summary = base_state + " summarized as follows: " + gen(stop=".")

# The 'base_state' remains clean and reusable for a third branch if needed.

Guidance excels at stateful generation because it uses the llguidance backend, which can generate token masks on-the-fly, without any TTFT costs or overhead for new schemas.