Compact prompts

All tokens in your input are loaded into the LLM in parallel. GPUs are designed to handle parallel processing in a single forward pass. Output tokens are completely different. Each token is generated sequentially, with new tokens depending on all previously generated tokens. Every output token requires a separate forward pass.

You can't parallelize output tokens. The time to generate an output scales roughly linearly with the number of output tokens. It makes sense to start optimizing your output tokens before your input tokens.

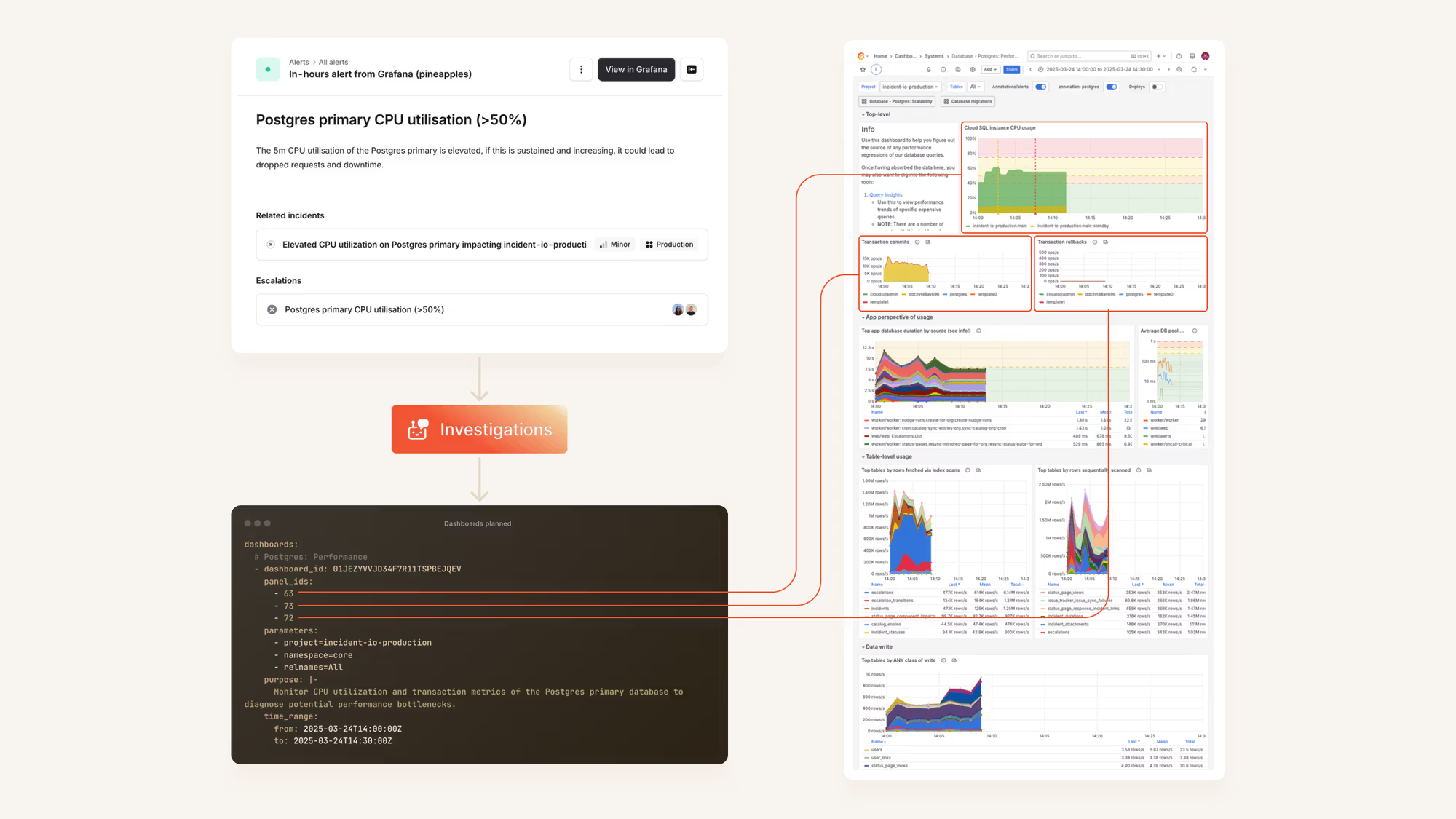

We can take some inspiration from folks at incident.io. They built an agent that opens relevant Grafana dashboards corresponding to an incident.

Initial performance:

- Input: 15,000 tokens (JSON definitions of dashboards).

- Output: 300+ tokens (JSON selection with reasoning).

- Latency: 11 seconds.

Here's how they optimized tokens:

-

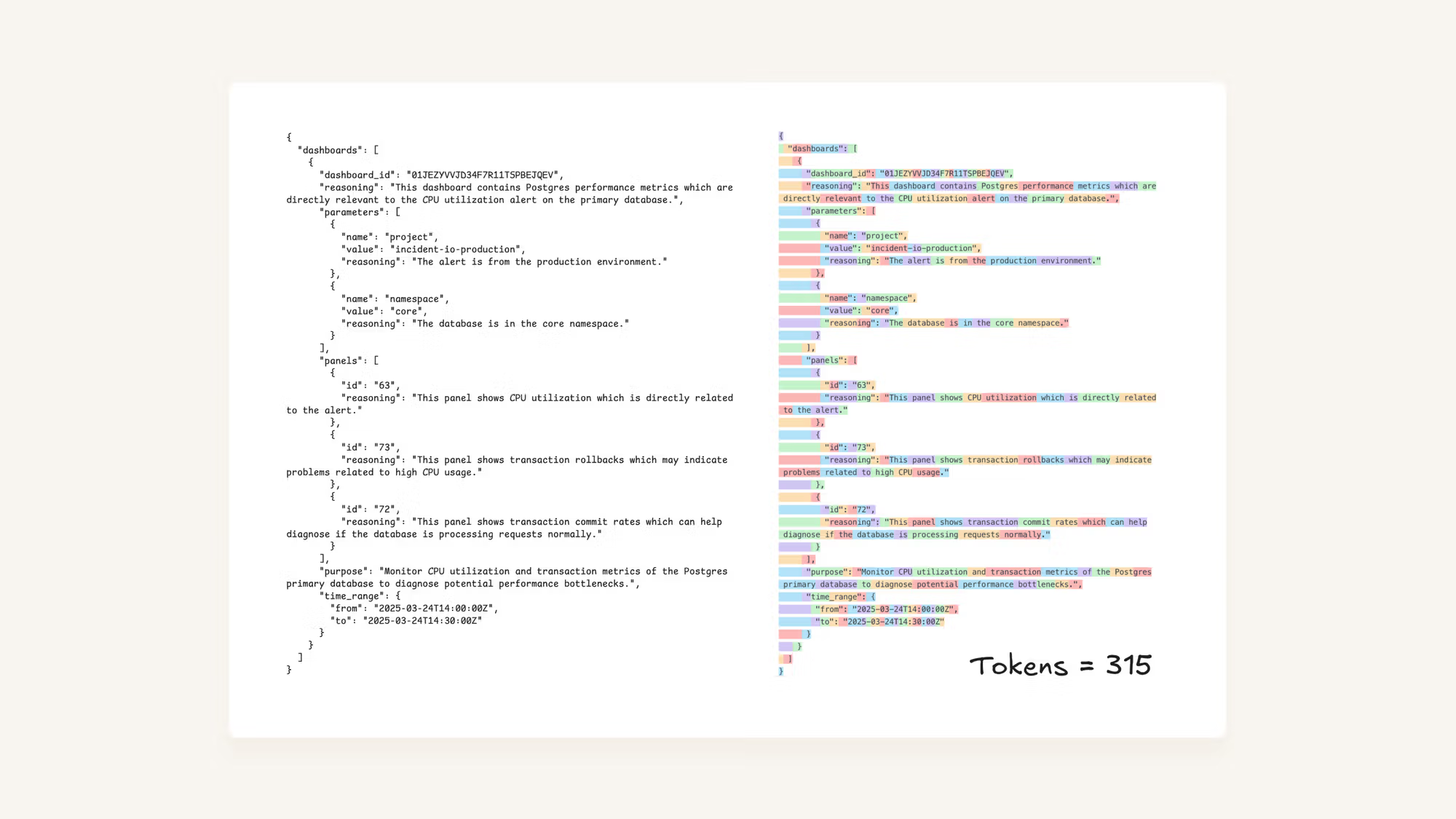

The original prompt asked the model to explain why it chose a specific dashboard. This consumed valuable output tokens. They removed the reasoning field from the JSON schema.

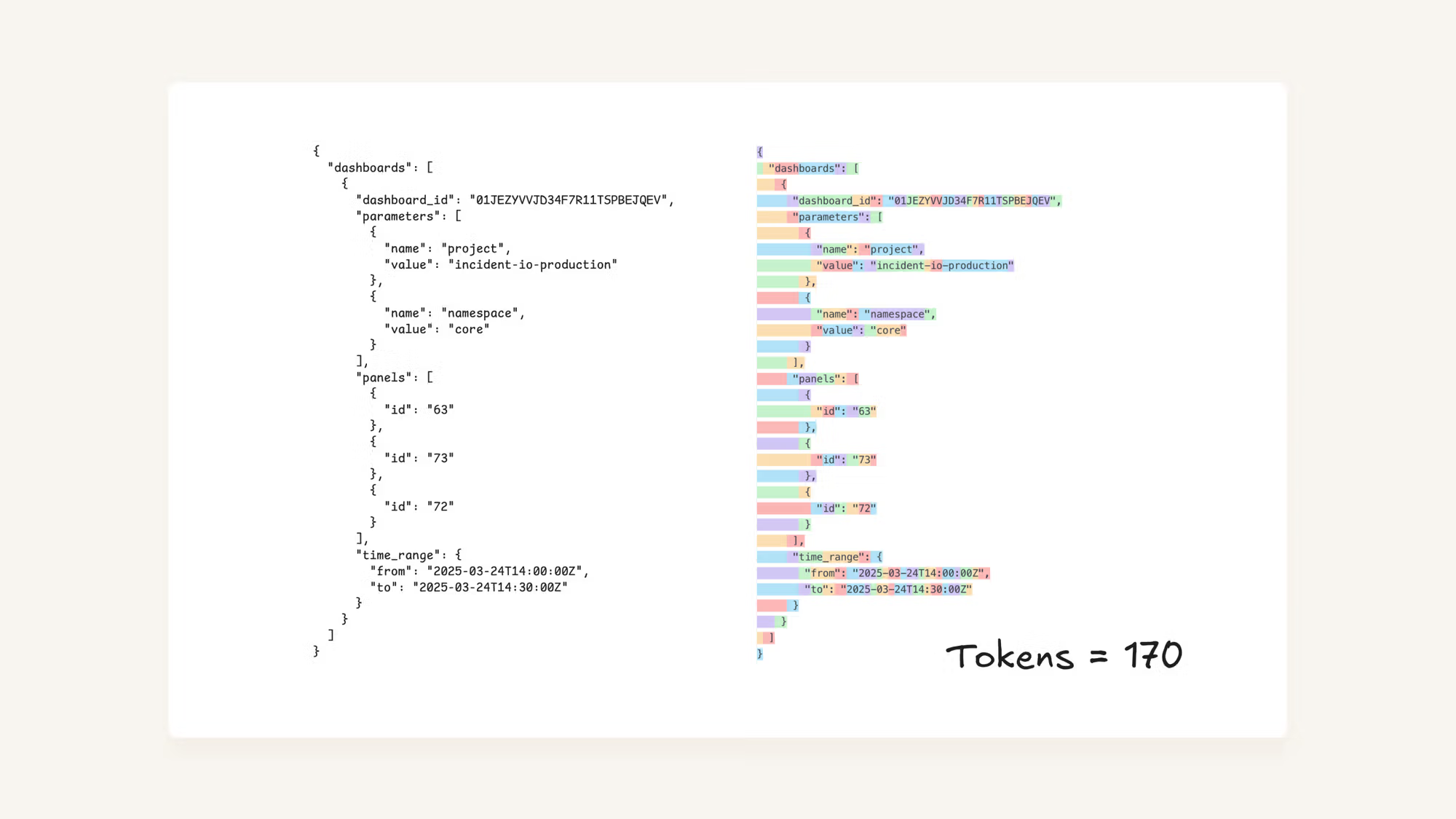

- Output tokens: 315 → 170.

- Latency: 11s → 7s.

- Result: 40% faster.

-

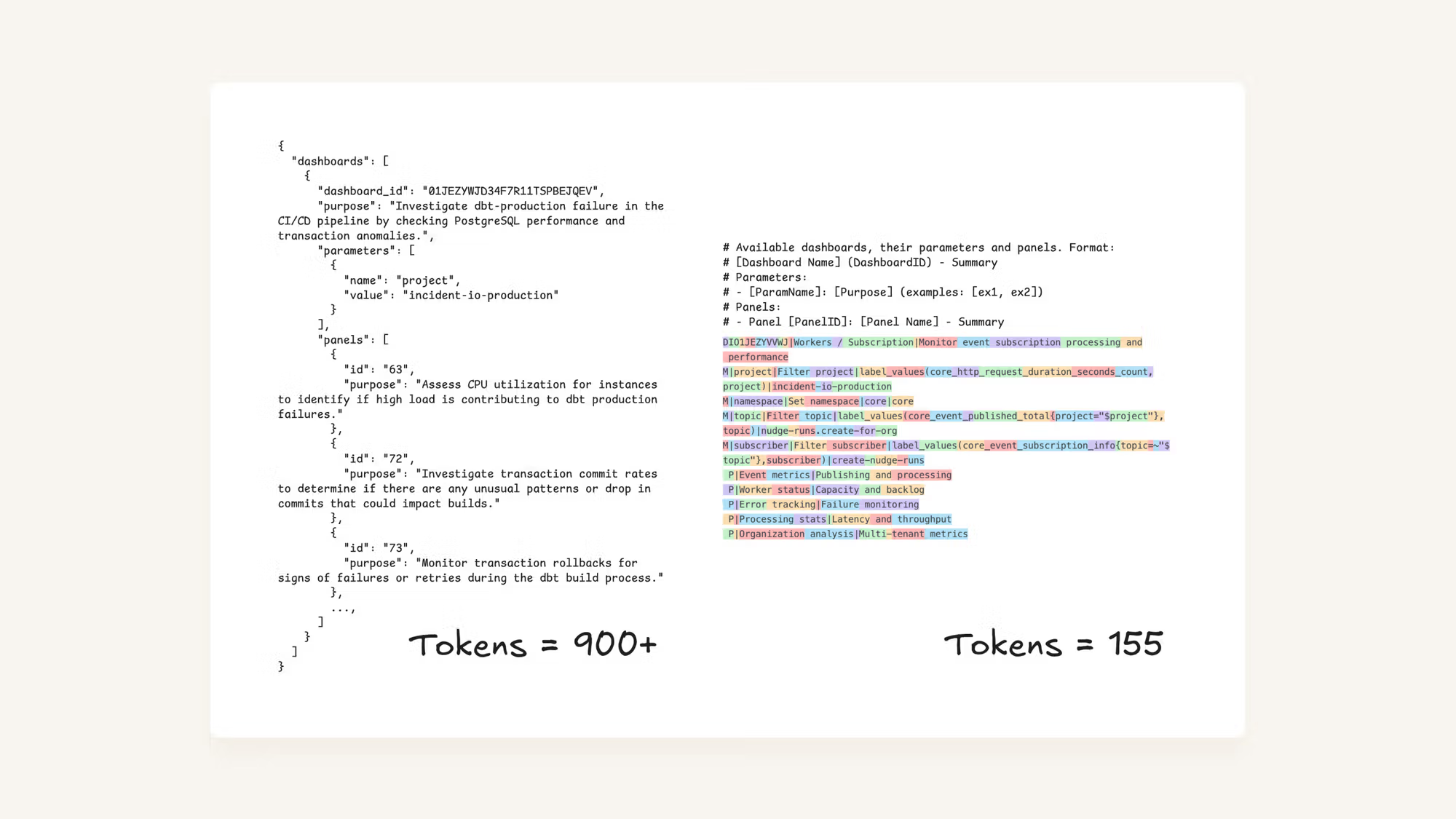

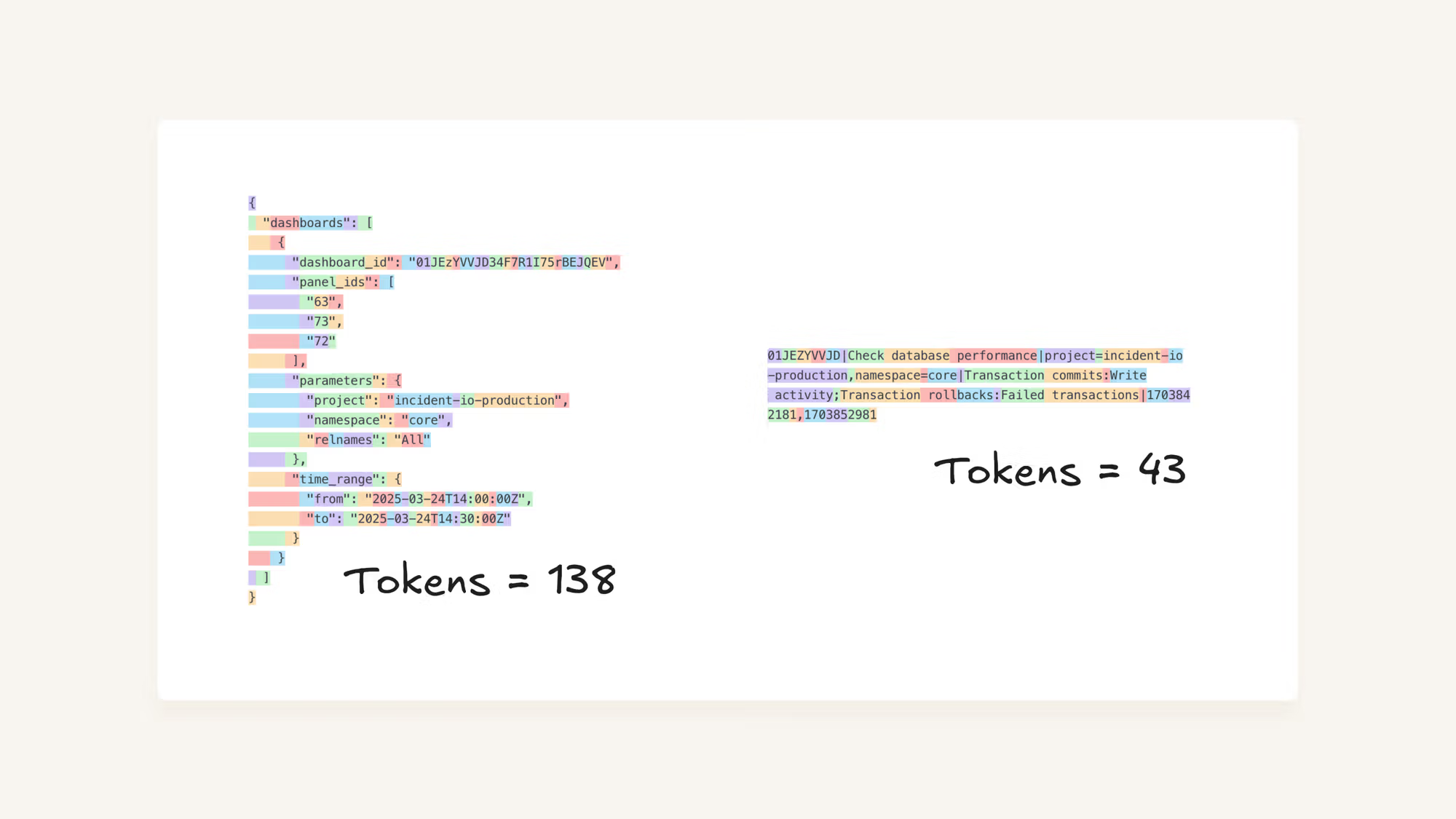

JSON is verbose. It repeats keys and uses excessive punctuation. The team replaced the JSON dashboard definitions with a custom, compact text format.

- Input tokens: 15,000 → 2,000.

- Latency: 7s → 5.7s.

- Result: 20% faster.

-

They instructed the model to output a pipe-delimited custom format instead of JSON.

- Output tokens: 170 → ~50.

- Latency: 5.7s → 2.3s.

- Result: 60% faster.

They were able to reduce latency from 11s to 2.3s. The whole idea was to use compact data representations to reduce the number of input and output tokens.

LLMs are very familiar with JSON format and you need to be careful when opting for a different format. On complex tasks, it can hurt performance in subtle ways.

You can also use existing schemas (like minified JSON) or prompting languages (like BAML) that build compact schemas for you under-the-hood.

Another useful trick is to optimize values in your data that the LLM needs to read and produce.

Say you have a list of messages with UUIDs, and you want to filter the list down to the UUIDs of messages that have a positive sentiment.

The trick is simple:

- Collect all the UUIDs from your input data.

- Find the unique ones and assign them int ids.

- Replace all the UUIDs in your prompt with their corresponding ints.

- Make your LLM request.

- Map all the ints in the response back to their corresponding UUIDs.

Real world comparisons on such tasks show >20% accuracy jump when we shift from UUIDs to ints.

Your data will contain many such values that you can optimize.

Do not optimize values if it causes loss of meaning. eg. mapping locations (London, Paris, Los Angeles) to ints (1, 2, 3) will impair your LLMs knowledge and reasoning.