At its core, LangChain is an innovative framework tailored for crafting applications that leverage the capabilities of language models. It's a toolkit designed for developers to create applications that are context-aware and capable of sophisticated reasoning. This framework is highly relevant when discussing Retrieval-Augmented Generation (RAG), a concept that enhances the effectiveness of language models in generating responses based on retrieved data.

This means LangChain applications can understand the context, such as prompt instructions or content grounding responses and use Large Language Models for complex reasoning tasks, like deciding how to respond or what actions to take. LangChain represents a unified approach to developing intelligent applications, simplifying the journey from concept to execution with its diverse components.

Understanding LangChain

LangChain is much more than just a framework; it's a full-fledged ecosystem comprising several integral parts.

- Firstly, there are the LangChain Libraries, available in both Python and JavaScript. These libraries are the backbone of LangChain, offering interfaces and integrations for various components. They provide a basic runtime for combining these components into cohesive chains and agents, along with ready-made implementations for immediate use.

- Next, we have LangChain Templates. These are a collection of deployable reference architectures tailored for a wide array of tasks. Whether you're building a chatbot or a complex analytical tool, these templates offer a solid starting point. If you're looking for insights into how LangChain can integrate with other systems, including leveraging powerful tools like LLamaIndex, this blog will be of great help.

- LangServe steps in as a versatile library for deploying LangChain chains as REST APIs. This tool is essential for turning your LangChain projects into accessible and scalable web services.

- Lastly, LangSmith serves as a developer platform. It's designed to debug, test, evaluate, and monitor chains built on any LLM framework. The seamless integration with LangChain makes it an indispensable tool for developers aiming to refine and perfect their applications. For more information on how you can streamline and automate your workflows with LLMs, check out our post on Leveraging LLMs to Streamline and Automate Your Workflows.

Together, these components empower you to develop, productionize, and deploy applications with ease. With LangChain, you start by writing your applications using the libraries, referencing templates for guidance. LangSmith then helps you in inspecting, testing, and monitoring your chains, ensuring that your applications are constantly improving and ready for deployment. Finally, with LangServe, you can easily transform any chain into an API, making deployment a breeze.

In the next sections, we will delve deeper into how to set up LangChain and begin your journey in creating intelligent, language model-powered applications.

Installation and Setup

Are you ready to dive into the world of LangChain? Setting it up is straightforward, and this guide will walk you through the process step-by-step.

The first step in your LangChain journey is to install it. You can do this easily using pip or conda. Run the following command in your terminal:

pip install langchain

For those who prefer the latest features and are comfortable with a bit more adventure, you can install LangChain directly from the source. Clone the repository and navigate to the langchain/libs/langchain directory. Then, run:

pip install -e .

For experimental features, consider installing langchain-experimental. It's a package that contains cutting-edge code and is intended for research and experimental purposes. Install it using:

pip install langchain-experimental

LangChain CLI is a handy tool for working with LangChain templates and LangServe projects. To install the LangChain CLI, use:

pip install langchain-cli

LangServe is essential for deploying your LangChain chains as a REST API. It gets installed alongside the LangChain CLI.

LangChain often requires integrations with model providers, data stores, APIs, etc. For this example, we'll use OpenAI's model APIs. Install the OpenAI Python package using:

pip install openai

To access the API, set your OpenAI API key as an environment variable:

export OPENAI_API_KEY="your_api_key"

Alternatively, pass the key directly in your python environment:

import os

os.environ['OPENAI_API_KEY'] = 'your_api_key'

LangChain allows for the creation of language model applications through modules. These modules can either stand alone or be composed for complex use cases. These modules are -

- Model I/O: Facilitates interaction with various language models, handling their inputs and outputs efficiently.

- Retrieval: Enables access to and interaction with application-specific data, crucial for dynamic data utilization.

- Agents: Empower applications to select appropriate tools based on high-level directives, enhancing decision-making capabilities.

- Chains: Offers pre-defined, reusable compositions that serve as building blocks for application development.

- Memory: Maintains application state across multiple chain executions, essential for context-aware interactions.

Each module targets specific development needs, making LangChain a comprehensive toolkit for creating advanced language model applications.

Along with the above components, we also have LangChain Expression Language (LCEL), which is a declarative way to easily compose modules together, and this enables the chaining of components using a universal Runnable interface.

LCEL looks something like this -

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.schema import BaseOutputParser

# Example chain

chain = ChatPromptTemplate() | ChatOpenAI() | CustomOutputParser()

Now that we have covered the basics, we will continue on to:

- Dig deeper into each Langchain module in detail.

- Learn how to use LangChain Expression Language.

- Explore common LangChain examples and implement them.

- Explore common use cases

- Deploy an end-to-end application with LangServe.

- Check out LangSmith for debugging, testing, and monitoring.

Let's get started!

Module I : Model I/O

In LangChain, the core element of any application revolves around the language model. This module provides the essential building blocks to interface effectively with any language model, ensuring seamless integration and communication.

Key Components of Model I/O

- LLMs and Chat Models (used interchangeably):

- LLMs:

- Definition: Pure text completion models.

- Input/Output: Take a text string as input and return a text string as output.

- Chat Models

- LLMs:

- Definition: Models that use a language model as a base but differ in input and output formats.

- Input/Output: Accept a list of chat messages as input and return a Chat Message.

- Prompts: Templatize, dynamically select, and manage model inputs. Allows for the creation of flexible and context-specific prompts that guide the language model's responses.

- Output Parsers: Extract and format information from model outputs. Useful for converting the raw output of language models into structured data or specific formats needed by the application.

LLMs

LangChain's integration with Large Language Models (LLMs) like OpenAI, Cohere, and Hugging Face is a fundamental aspect of its functionality. LangChain itself does not host LLMs but offers a uniform interface to interact with various LLMs.

This section provides an overview of using the OpenAI LLM wrapper in LangChain, applicable to other LLM types as well. We have already installed this in the "Getting Started" section. Let us initialize the LLM.

from langchain.llms import OpenAI

llm = OpenAI()

- LLMs implement the Runnable interface, the basic building block of the LangChain Expression Language (LCEL). This means they support

invoke,ainvoke,stream,astream,batch,abatch,astream_logcalls. - LLMs accept strings as inputs, or objects which can be coerced to string prompts, including

List[BaseMessage]andPromptValue. (more on these later)

Let us look at some examples.

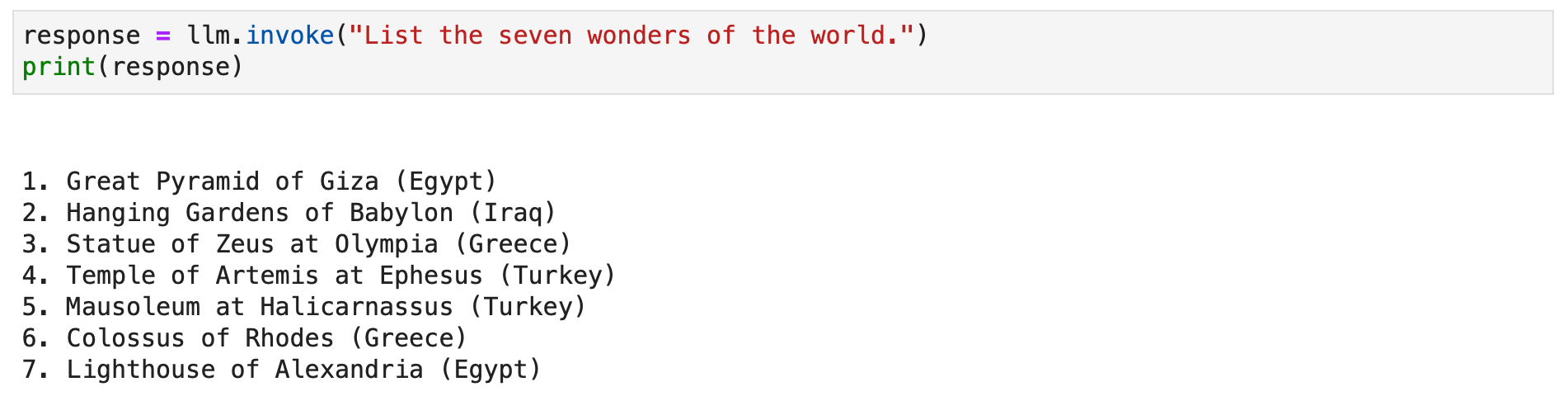

response = llm.invoke("List the seven wonders of the world.")

print(response)

You can alternatively call the stream method to stream the text response.

for chunk in llm.stream("Where were the 2012 Olympics held?"):

print(chunk, end="", flush=True)

Chat Models

LangChain's integration with chat models, a specialized variation of language models, is essential for creating interactive chat applications. While they utilize language models internally, chat models present a distinct interface centered around chat messages as inputs and outputs. This section provides a detailed overview of using OpenAI's chat model in LangChain.

from langchain.chat_models import ChatOpenAI

chat = ChatOpenAI()

Chat models in LangChain work with different message types such as AIMessage, HumanMessage, SystemMessage, FunctionMessage, and ChatMessage (with an arbitrary role parameter). Generally, HumanMessage, AIMessage, and SystemMessage are the most frequently used.

Chat models primarily accept List[BaseMessage] as inputs. Strings can be converted to HumanMessage, and PromptValue is also supported.

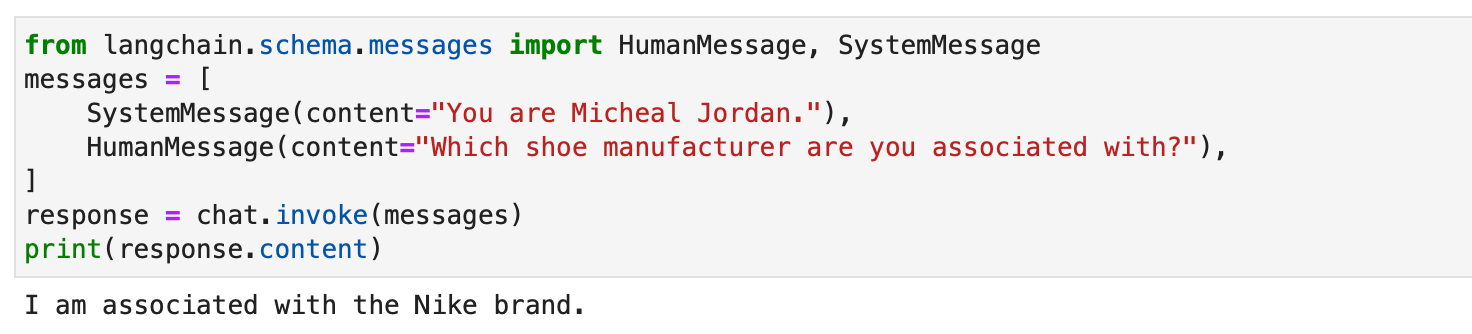

from langchain.schema.messages import HumanMessage, SystemMessage

messages = [

SystemMessage(content="You are Micheal Jordan."),

HumanMessage(content="Which shoe manufacturer are you associated with?"),

]

response = chat.invoke(messages)

print(response.content)

Prompts

Prompts are essential in guiding language models to generate relevant and coherent outputs. They can range from simple instructions to complex few-shot examples. In LangChain, handling prompts can be a very streamlined process, thanks to several dedicated classes and functions.

LangChain's PromptTemplate class is a versatile tool for creating string prompts. It uses Python's str.format syntax, allowing for dynamic prompt generation. You can define a template with placeholders and fill them with specific values as needed.

from langchain.prompts import PromptTemplate

# Simple prompt with placeholders

prompt_template = PromptTemplate.from_template(

"Tell me a {adjective} joke about {content}."

)

# Filling placeholders to create a prompt

filled_prompt = prompt_template.format(adjective="funny", content="robots")

print(filled_prompt)For chat models, prompts are more structured, involving messages with specific roles. LangChain offers ChatPromptTemplate for this purpose.

from langchain.prompts import ChatPromptTemplate

# Defining a chat prompt with various roles

chat_template = ChatPromptTemplate.from_messages(

[

("system", "You are a helpful AI bot. Your name is {name}."),

("human", "Hello, how are you doing?"),

("ai", "I'm doing well, thanks!"),

("human", "{user_input}"),

]

)

# Formatting the chat prompt

formatted_messages = chat_template.format_messages(name="Bob", user_input="What is your name?")

for message in formatted_messages:

print(message)

This approach allows for the creation of interactive, engaging chatbots with dynamic responses.

Both PromptTemplate and ChatPromptTemplate integrate seamlessly with the LangChain Expression Language (LCEL), enabling them to be part of larger, complex workflows. We will discuss more on this later.

Custom prompt templates are sometimes essential for tasks requiring unique formatting or specific instructions. Creating a custom prompt template involves defining input variables and a custom formatting method. This flexibility allows LangChain to cater to a wide array of application-specific requirements. Read more here.

LangChain also supports few-shot prompting, enabling the model to learn from examples. This feature is vital for tasks requiring contextual understanding or specific patterns. Few-shot prompt templates can be built from a set of examples or by utilizing an Example Selector object. Read more here.

Output Parsers

Output parsers play a crucial role in Langchain, enabling users to structure the responses generated by language models. In this section, we will explore the concept of output parsers and provide code examples using Langchain's PydanticOutputParser, SimpleJsonOutputParser, CommaSeparatedListOutputParser, DatetimeOutputParser, and XMLOutputParser.

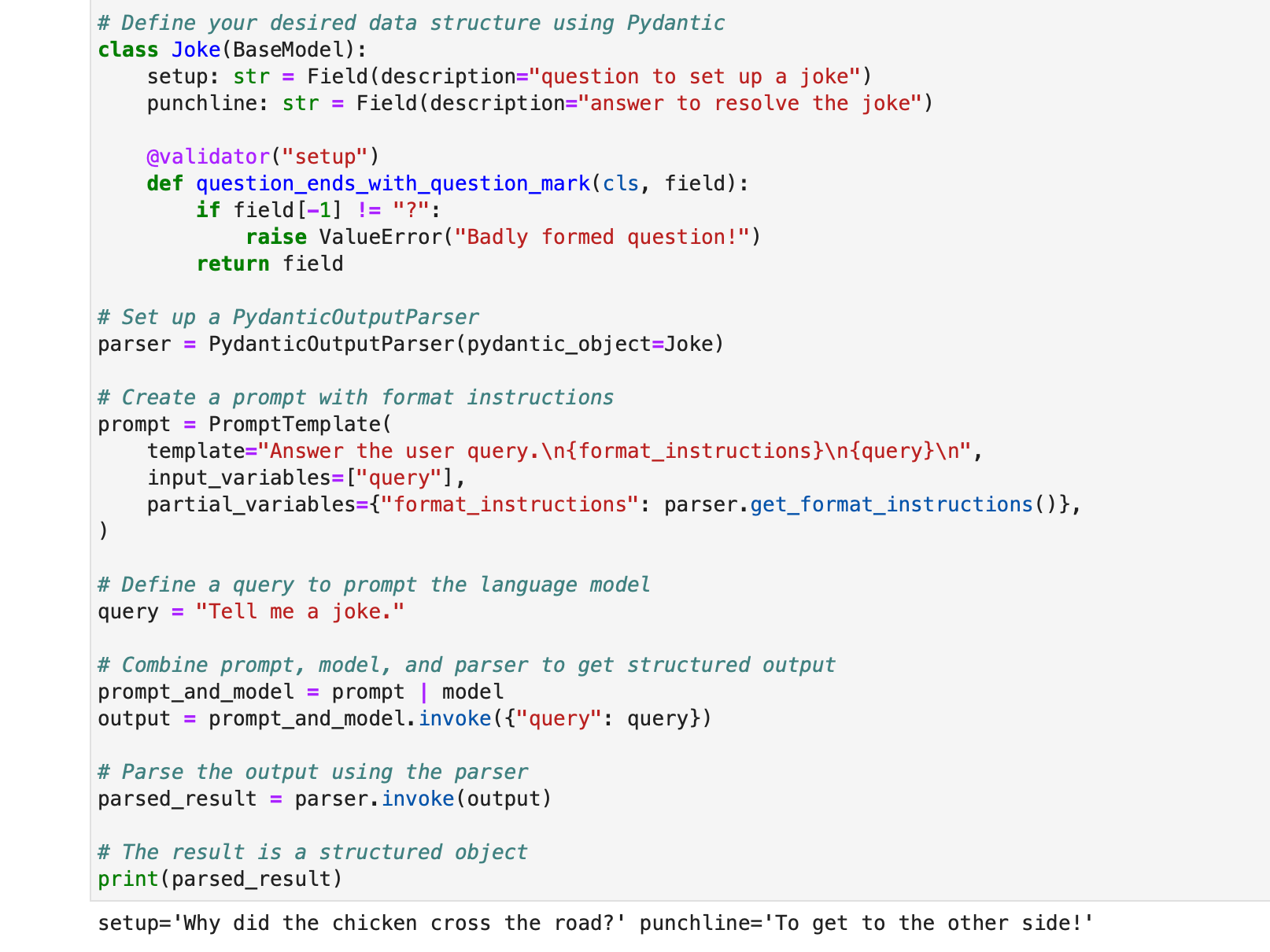

PydanticOutputParser

Langchain provides the PydanticOutputParser for parsing responses into Pydantic data structures. Below is a step-by-step example of how to use it:

from typing import List

from langchain.llms import OpenAI

from langchain.output_parsers import PydanticOutputParser

from langchain.prompts import PromptTemplate

from langchain.pydantic_v1 import BaseModel, Field, validator

# Initialize the language model

model = OpenAI(model_name="text-davinci-003", temperature=0.0)

# Define your desired data structure using Pydantic

class Joke(BaseModel):

setup: str = Field(description="question to set up a joke")

punchline: str = Field(description="answer to resolve the joke")

@validator("setup")

def question_ends_with_question_mark(cls, field):

if field[-1] != "?":

raise ValueError("Badly formed question!")

return field

# Set up a PydanticOutputParser

parser = PydanticOutputParser(pydantic_object=Joke)

# Create a prompt with format instructions

prompt = PromptTemplate(

template="Answer the user query.\n{format_instructions}\n{query}\n",

input_variables=["query"],

partial_variables={"format_instructions": parser.get_format_instructions()},

)

# Define a query to prompt the language model

query = "Tell me a joke."

# Combine prompt, model, and parser to get structured output

prompt_and_model = prompt | model

output = prompt_and_model.invoke({"query": query})

# Parse the output using the parser

parsed_result = parser.invoke(output)

# The result is a structured object

print(parsed_result)

The output will be:

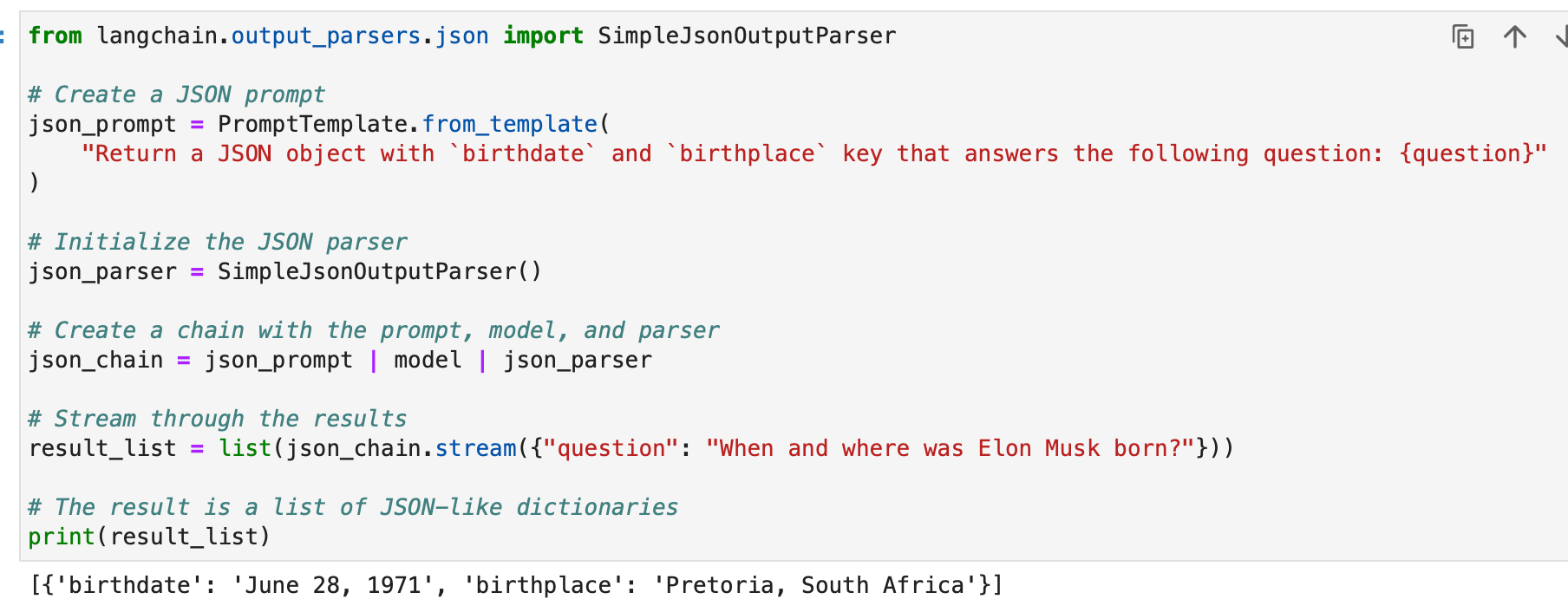

SimpleJsonOutputParser

Langchain's SimpleJsonOutputParser is used when you want to parse JSON-like outputs. Here's an example:

from langchain.output_parsers.json import SimpleJsonOutputParser

# Create a JSON prompt

json_prompt = PromptTemplate.from_template(

"Return a JSON object with `birthdate` and `birthplace` key that answers the following question: {question}"

)

# Initialize the JSON parser

json_parser = SimpleJsonOutputParser()

# Create a chain with the prompt, model, and parser

json_chain = json_prompt | model | json_parser

# Stream through the results

result_list = list(json_chain.stream({"question": "When and where was Elon Musk born?"}))

# The result is a list of JSON-like dictionaries

print(result_list)

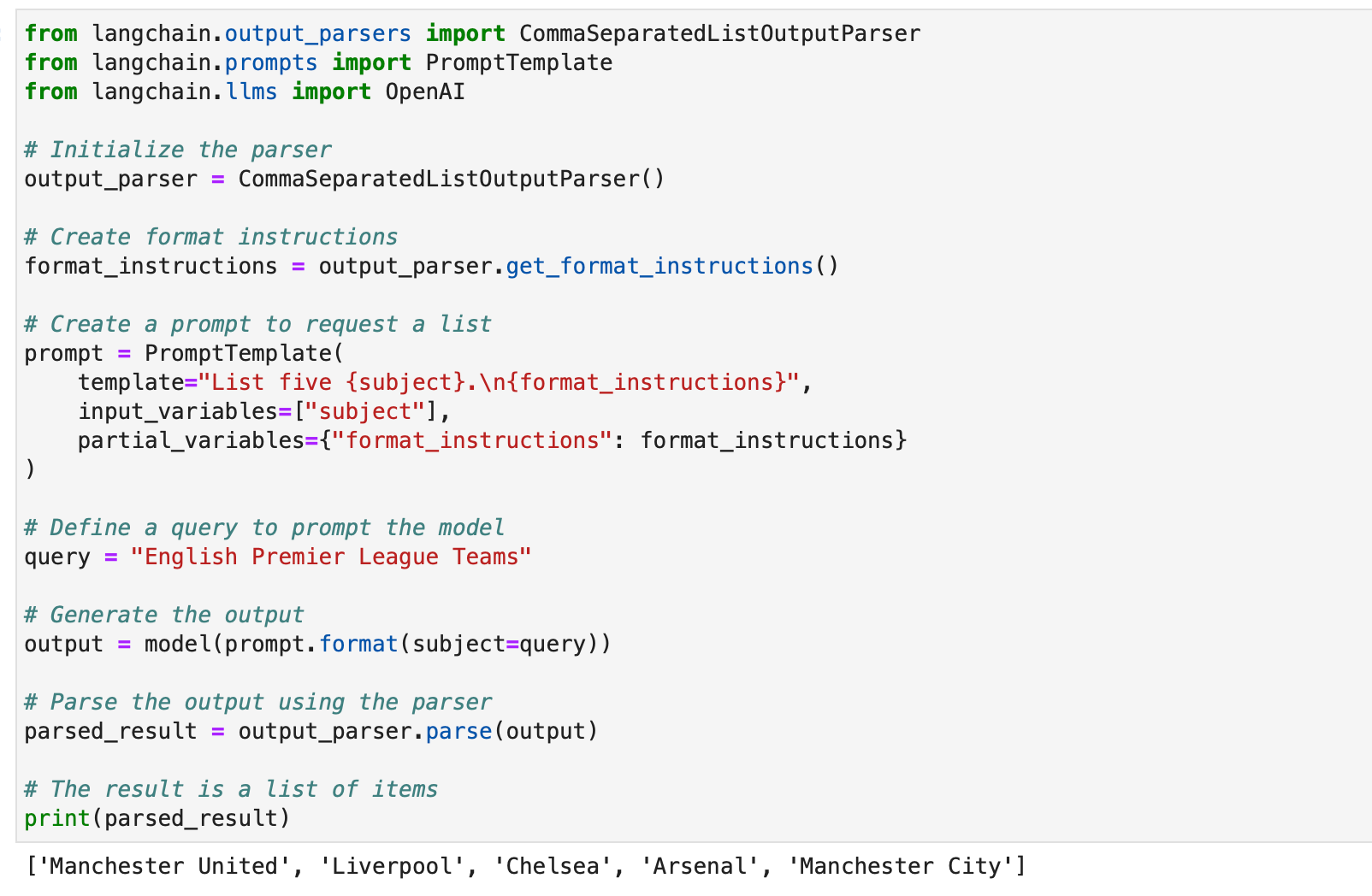

CommaSeparatedListOutputParser

The CommaSeparatedListOutputParser is handy when you want to extract comma-separated lists from model responses. Here's an example:

from langchain.output_parsers import CommaSeparatedListOutputParser

from langchain.prompts import PromptTemplate

from langchain.llms import OpenAI

# Initialize the parser

output_parser = CommaSeparatedListOutputParser()

# Create format instructions

format_instructions = output_parser.get_format_instructions()

# Create a prompt to request a list

prompt = PromptTemplate(

template="List five {subject}.\n{format_instructions}",

input_variables=["subject"],

partial_variables={"format_instructions": format_instructions}

)

# Define a query to prompt the model

query = "English Premier League Teams"

# Generate the output

output = model(prompt.format(subject=query))

# Parse the output using the parser

parsed_result = output_parser.parse(output)

# The result is a list of items

print(parsed_result)

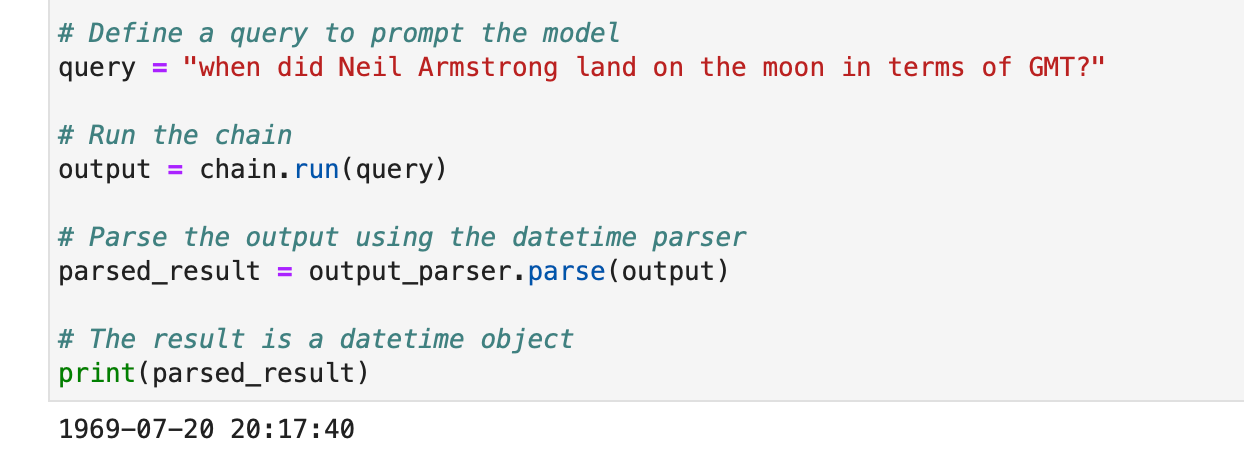

DatetimeOutputParser

Langchain's DatetimeOutputParser is designed to parse datetime information. Here's how to use it:

from langchain.prompts import PromptTemplate

from langchain.output_parsers import DatetimeOutputParser

from langchain.chains import LLMChain

from langchain.llms import OpenAI

# Initialize the DatetimeOutputParser

output_parser = DatetimeOutputParser()

# Create a prompt with format instructions

template = """

Answer the user's question:

{question}

{format_instructions}

"""

prompt = PromptTemplate.from_template(

template,

partial_variables={"format_instructions": output_parser.get_format_instructions()},

)

# Create a chain with the prompt and language model

chain = LLMChain(prompt=prompt, llm=OpenAI())

# Define a query to prompt the model

query = "when did Neil Armstrong land on the moon in terms of GMT?"

# Run the chain

output = chain.run(query)

# Parse the output using the datetime parser

parsed_result = output_parser.parse(output)

# The result is a datetime object

print(parsed_result)

These examples showcase how Langchain's output parsers can be used to structure various types of model responses, making them suitable for different applications and formats. Output parsers are a valuable tool for enhancing the usability and interpretability of language model outputs in Langchain.

Module II : Retrieval

Retrieval in LangChain plays a crucial role in applications that require user-specific data, not included in the model's training set. This process, known as Retrieval Augmented Generation (RAG), involves fetching external data and integrating it into the language model's generation process. LangChain provides a comprehensive suite of tools and functionalities to facilitate this process, catering to both simple and complex applications.

LangChain achieves retrieval through a series of components which we will discuss one by one.

Document Loaders

Document loaders in LangChain enable the extraction of data from various sources. With over 100 loaders available, they support a range of document types, apps and sources (private s3 buckets, public websites, databases).

You can choose a document loader based on your requirements here.

All these loaders ingest data into Document classes. We'll learn how to use data ingested into Document classes later.

Text File Loader: Load a simple .txt file into a document.

from langchain.document_loaders import TextLoader

loader = TextLoader("./sample.txt")

document = loader.load()

CSV Loader: Load a CSV file into a document.

from langchain.document_loaders.csv_loader import CSVLoader

loader = CSVLoader(file_path='./example_data/sample.csv')

documents = loader.load()

We can choose to customize the parsing by specifying field names -

loader = CSVLoader(file_path='./example_data/mlb_teams_2012.csv', csv_args={

'delimiter': ',',

'quotechar': '"',

'fieldnames': ['MLB Team', 'Payroll in millions', 'Wins']

})

documents = loader.load()

PDF Loaders: PDF Loaders in LangChain offer various methods for parsing and extracting content from PDF files. Each loader caters to different requirements and uses different underlying libraries. Below are detailed examples for each loader.

PyPDFLoader is used for basic PDF parsing.

from langchain.document_loaders import PyPDFLoader

loader = PyPDFLoader("example_data/layout-parser-paper.pdf")

pages = loader.load_and_split()

MathPixLoader is ideal for extracting mathematical content and diagrams.

from langchain.document_loaders import MathpixPDFLoader

loader = MathpixPDFLoader("example_data/math-content.pdf")

data = loader.load()

PyMuPDFLoader is fast and includes detailed metadata extraction.

from langchain.document_loaders import PyMuPDFLoader

loader = PyMuPDFLoader("example_data/layout-parser-paper.pdf")

data = loader.load()

# Optionally pass additional arguments for PyMuPDF's get_text() call

data = loader.load(option="text")

PDFMiner Loader is used for more granular control over text extraction.

from langchain.document_loaders import PDFMinerLoader

loader = PDFMinerLoader("example_data/layout-parser-paper.pdf")

data = loader.load()

AmazonTextractPDFParser utilizes AWS Textract for OCR and other advanced PDF parsing features.

from langchain.document_loaders import AmazonTextractPDFLoader

# Requires AWS account and configuration

loader = AmazonTextractPDFLoader("example_data/complex-layout.pdf")

documents = loader.load()

PDFMinerPDFasHTMLLoader generates HTML from PDF for semantic parsing.

from langchain.document_loaders import PDFMinerPDFasHTMLLoader

loader = PDFMinerPDFasHTMLLoader("example_data/layout-parser-paper.pdf")

data = loader.load()

PDFPlumberLoader provides detailed metadata and supports one document per page.

from langchain.document_loaders import PDFPlumberLoader

loader = PDFPlumberLoader("example_data/layout-parser-paper.pdf")

data = loader.load()

Integrated Loaders: LangChain offers a wide variety of custom loaders to directly load data from your apps (such as Slack, Sigma, Notion, Confluence, Google Drive and many more) and databases and use them in LLM applications.

The complete list is here.

Below are a couple of examples to illustrate this -

Example I - Slack

Slack, a widely-used instant messaging platform, can be integrated into LLM workflows and applications.

- Go to your Slack Workspace Management page.

- Navigate to

{your_slack_domain}.slack.com/services/export. - Select the desired date range and initiate the export.

- Slack notifies via email and DM once the export is ready.

- The export results in a

.zipfile located in your Downloads folder or your designated download path. - Assign the path of the downloaded

.zipfile toLOCAL_ZIPFILE. - Use the

SlackDirectoryLoaderfrom thelangchain.document_loaderspackage.

from langchain.document_loaders import SlackDirectoryLoader

SLACK_WORKSPACE_URL = "https://xxx.slack.com" # Replace with your Slack URL

LOCAL_ZIPFILE = "" # Path to the Slack zip file

loader = SlackDirectoryLoader(LOCAL_ZIPFILE, SLACK_WORKSPACE_URL)

docs = loader.load()

print(docs)

Example II - Figma

Figma, a popular tool for interface design, offers a REST API for data integration.

- Obtain the Figma file key from the URL format:

https://www.figma.com/file/{filekey}/sampleFilename. - Node IDs are found in the URL parameter

?node-id={node_id}. - Generate an access token following instructions at the Figma Help Center.

- The

FigmaFileLoaderclass fromlangchain.document_loaders.figmais used to load Figma data. - Various LangChain modules like

CharacterTextSplitter,ChatOpenAI, etc., are employed for processing.

import os

from langchain.document_loaders.figma import FigmaFileLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain.chat_models import ChatOpenAI

from langchain.indexes import VectorstoreIndexCreator

from langchain.chains import ConversationChain, LLMChain

from langchain.memory import ConversationBufferWindowMemory

from langchain.prompts.chat import ChatPromptTemplate, SystemMessagePromptTemplate, AIMessagePromptTemplate, HumanMessagePromptTemplate

figma_loader = FigmaFileLoader(

os.environ.get("ACCESS_TOKEN"),

os.environ.get("NODE_IDS"),

os.environ.get("FILE_KEY"),

)

index = VectorstoreIndexCreator().from_loaders([figma_loader])

figma_doc_retriever = index.vectorstore.as_retriever()

- The

generate_codefunction uses the Figma data to create HTML/CSS code. - It employs a templated conversation with a GPT-based model.

def generate_code(human_input):

# Template for system and human prompts

system_prompt_template = "Your coding instructions..."

human_prompt_template = "Code the {text}. Ensure it's mobile responsive"

# Creating prompt templates

system_message_prompt = SystemMessagePromptTemplate.from_template(system_prompt_template)

human_message_prompt = HumanMessagePromptTemplate.from_template(human_prompt_template)

# Setting up the AI model

gpt_4 = ChatOpenAI(temperature=0.02, model_name="gpt-4")

# Retrieving relevant documents

relevant_nodes = figma_doc_retriever.get_relevant_documents(human_input)

# Generating and formatting the prompt

conversation = [system_message_prompt, human_message_prompt]

chat_prompt = ChatPromptTemplate.from_messages(conversation)

response = gpt_4(chat_prompt.format_prompt(context=relevant_nodes, text=human_input).to_messages())

return response

# Example usage

response = generate_code("page top header")

print(response.content)

- The

generate_codefunction, when executed, returns HTML/CSS code based on the Figma design input.

Let us now use our knowledge to create a few document sets.

We first load a PDF, the BCG annual sustainability report.

We use the PyPDFLoader for this.

from langchain.document_loaders import PyPDFLoader

loader = PyPDFLoader("bcg-2022-annual-sustainability-report-apr-2023.pdf")

pdfpages = loader.load_and_split()

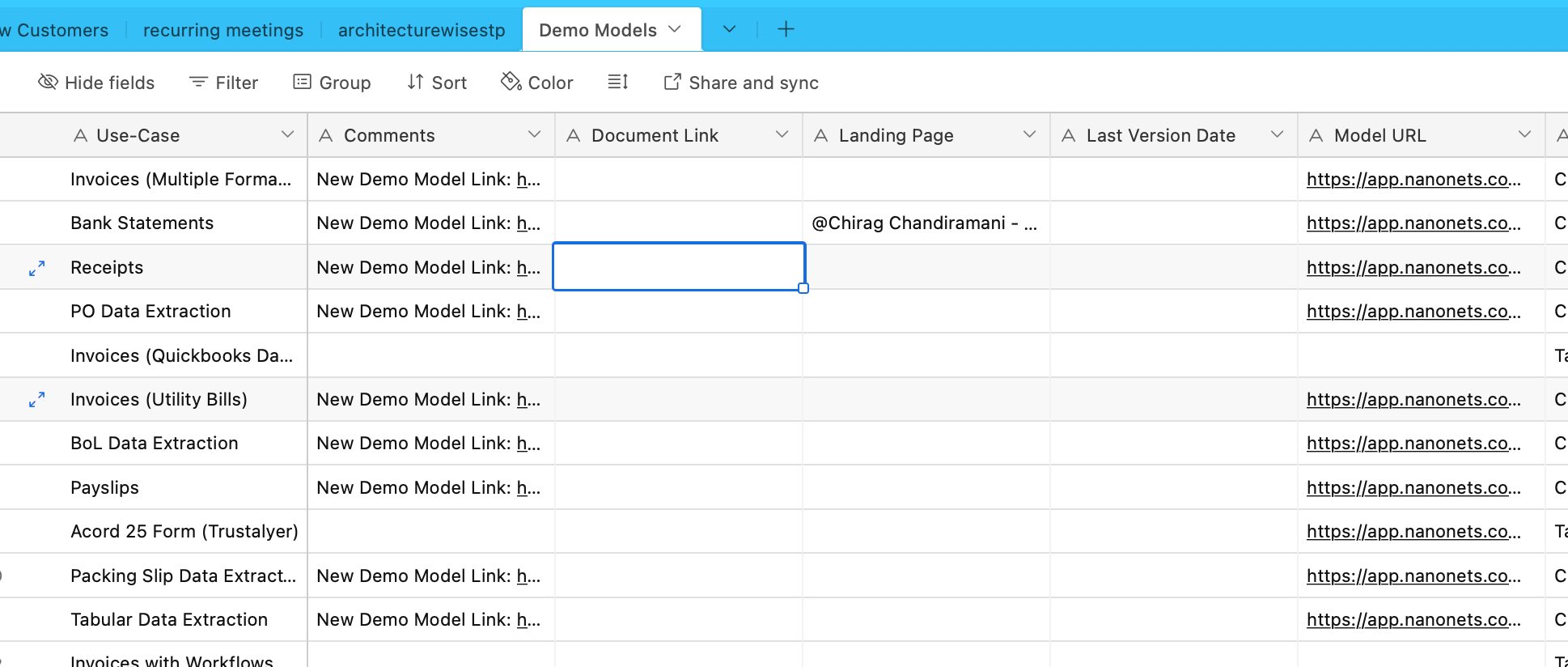

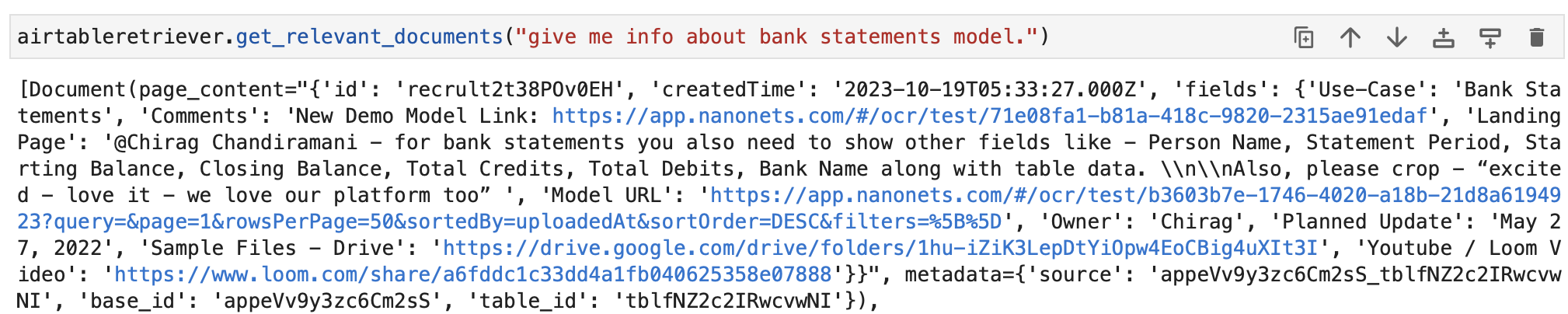

We will ingest data from Airtable now. We have an Airtable containing information about various OCR and data extraction models -

Let us use the AirtableLoader for this, found in the list of integrated loaders.

from langchain.document_loaders import AirtableLoader

api_key = "XXXXX"

base_id = "XXXXX"

table_id = "XXXXX"

loader = AirtableLoader(api_key, table_id, base_id)

airtabledocs = loader.load()

Let us now proceed and learn how to use these document classes.

Document Transformers

Document transformers in LangChain are essential tools designed to manipulate documents, which we created in our previous subsection.

They are used for tasks such as splitting long documents into smaller chunks, combining, and filtering, which are crucial for adapting documents to a model's context window or meeting specific application needs.

One such tool is the RecursiveCharacterTextSplitter, a versatile text splitter that uses a character list for splitting. It allows parameters like chunk size, overlap, and starting index. Here's an example of how it's used in Python:

from langchain.text_splitter import RecursiveCharacterTextSplitter

state_of_the_union = "Your long text here..."

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=100,

chunk_overlap=20,

length_function=len,

add_start_index=True,

)

texts = text_splitter.create_documents([state_of_the_union])

print(texts[0])

print(texts[1])

Another tool is the CharacterTextSplitter, which splits text based on a specified character and includes controls for chunk size and overlap:

from langchain.text_splitter import CharacterTextSplitter

text_splitter = CharacterTextSplitter(

separator="\n\n",

chunk_size=1000,

chunk_overlap=200,

length_function=len,

is_separator_regex=False,

)

texts = text_splitter.create_documents([state_of_the_union])

print(texts[0])

The HTMLHeaderTextSplitter is designed to split HTML content based on header tags, retaining the semantic structure:

from langchain.text_splitter import HTMLHeaderTextSplitter

html_string = "Your HTML content here..."

headers_to_split_on = [("h1", "Header 1"), ("h2", "Header 2")]

html_splitter = HTMLHeaderTextSplitter(headers_to_split_on=headers_to_split_on)

html_header_splits = html_splitter.split_text(html_string)

print(html_header_splits[0])

A more complex manipulation can be achieved by combining HTMLHeaderTextSplitter with another splitter, like the Pipelined Splitter:

from langchain.text_splitter import HTMLHeaderTextSplitter, RecursiveCharacterTextSplitter

url = "https://example.com"

headers_to_split_on = [("h1", "Header 1"), ("h2", "Header 2")]

html_splitter = HTMLHeaderTextSplitter(headers_to_split_on=headers_to_split_on)

html_header_splits = html_splitter.split_text_from_url(url)

chunk_size = 500

text_splitter = RecursiveCharacterTextSplitter(chunk_size=chunk_size)

splits = text_splitter.split_documents(html_header_splits)

print(splits[0])

LangChain also offers specific splitters for different programming languages, like the Python Code Splitter and the JavaScript Code Splitter:

from langchain.text_splitter import RecursiveCharacterTextSplitter, Language

python_code = """

def hello_world():

print("Hello, World!")

hello_world()

"""

python_splitter = RecursiveCharacterTextSplitter.from_language(

language=Language.PYTHON, chunk_size=50

)

python_docs = python_splitter.create_documents([python_code])

print(python_docs[0])

js_code = """

function helloWorld() {

console.log("Hello, World!");

}

helloWorld();

"""

js_splitter = RecursiveCharacterTextSplitter.from_language(

language=Language.JS, chunk_size=60

)

js_docs = js_splitter.create_documents([js_code])

print(js_docs[0])

For splitting text based on token count, which is useful for language models with token limits, the TokenTextSplitter is used:

from langchain.text_splitter import TokenTextSplitter

text_splitter = TokenTextSplitter(chunk_size=10)

texts = text_splitter.split_text(state_of_the_union)

print(texts[0])

Finally, the LongContextReorder reorders documents to prevent performance degradation in models due to long contexts:

from langchain.document_transformers import LongContextReorder

reordering = LongContextReorder()

reordered_docs = reordering.transform_documents(docs)

print(reordered_docs[0])

These tools demonstrate various ways to transform documents in LangChain, from simple text splitting to complex reordering and language-specific splitting. For more in-depth and specific use cases, the LangChain documentation and Integrations section should be consulted.

In our examples, the loaders have already created chunked documents for us, and this part is already handled.

Text Embedding Models

Text embedding models in LangChain provide a standardized interface for various embedding model providers like OpenAI, Cohere, and Hugging Face. These models transform text into vector representations, enabling operations like semantic search through text similarity in vector space.

To get started with text embedding models, you typically need to install specific packages and set up API keys. We have already done this for OpenAI

In LangChain, the embed_documents method is used to embed multiple texts, providing a list of vector representations. For instance:

from langchain.embeddings import OpenAIEmbeddings

# Initialize the model

embeddings_model = OpenAIEmbeddings()

# Embed a list of texts

embeddings = embeddings_model.embed_documents(

["Hi there!", "Oh, hello!", "What's your name?", "My friends call me World", "Hello World!"]

)

print("Number of documents embedded:", len(embeddings))

print("Dimension of each embedding:", len(embeddings[0]))

For embedding a single text, such as a search query, the embed_query method is used. This is useful for comparing a query to a set of document embeddings. For example:

from langchain.embeddings import OpenAIEmbeddings

# Initialize the model

embeddings_model = OpenAIEmbeddings()

# Embed a single query

embedded_query = embeddings_model.embed_query("What was the name mentioned in the conversation?")

print("First five dimensions of the embedded query:", embedded_query[:5])

Understanding these embeddings is crucial. Each piece of text is converted into a vector, the dimension of which depends on the model used. For instance, OpenAI models typically produce 1536-dimensional vectors. These embeddings are then used for retrieving relevant information.

LangChain's embedding functionality is not limited to OpenAI but is designed to work with various providers. The setup and usage might slightly differ depending on the provider, but the core concept of embedding texts into vector space remains the same. For detailed usage, including advanced configurations and integrations with different embedding model providers, the LangChain documentation in the Integrations section is a valuable resource.

Vector Stores

Vector stores in LangChain support the efficient storage and searching of text embeddings. LangChain integrates with over 50 vector stores, providing a standardized interface for ease of use.

Example: Storing and Searching Embeddings

After embedding texts, we can store them in a vector store like Chroma and perform similarity searches:

from langchain.vectorstores import Chroma

db = Chroma.from_texts(embedded_texts)

similar_texts = db.similarity_search("search query")

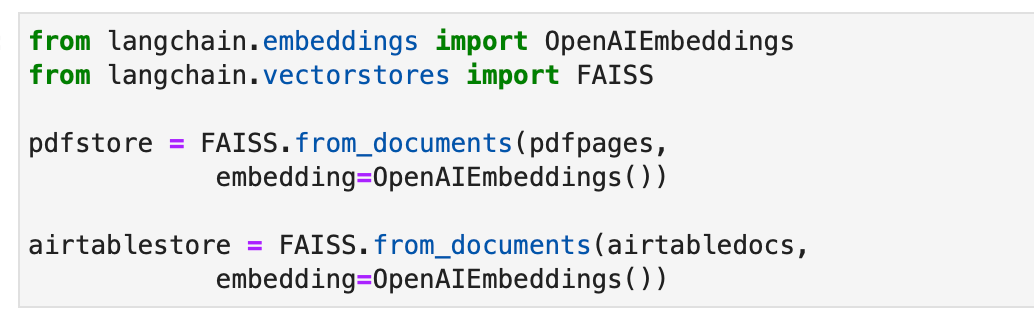

Let us alternatively use the FAISS vector store to create indexes for our documents.

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import FAISS

pdfstore = FAISS.from_documents(pdfpages,

embedding=OpenAIEmbeddings())

airtablestore = FAISS.from_documents(airtabledocs,

embedding=OpenAIEmbeddings())

Retrievers

Retrievers in LangChain are interfaces that return documents in response to an unstructured query. They are more general than vector stores, focusing on retrieval rather than storage. Although vector stores can be used as a retriever's backbone, there are other types of retrievers as well.

To set up a Chroma retriever, you first install it using pip install chromadb. Then, you load, split, embed, and retrieve documents using a series of Python commands. Here's a code example for setting up a Chroma retriever:

from langchain.embeddings import OpenAIEmbeddings

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores import Chroma

full_text = open("state_of_the_union.txt", "r").read()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

texts = text_splitter.split_text(full_text)

embeddings = OpenAIEmbeddings()

db = Chroma.from_texts(texts, embeddings)

retriever = db.as_retriever()

retrieved_docs = retriever.invoke("What did the president say about Ketanji Brown Jackson?")

print(retrieved_docs[0].page_content)

The MultiQueryRetriever automates prompt tuning by generating multiple queries for a user input query and combines the results. Here's an example of its simple usage:

from langchain.chat_models import ChatOpenAI

from langchain.retrievers.multi_query import MultiQueryRetriever

question = "What are the approaches to Task Decomposition?"

llm = ChatOpenAI(temperature=0)

retriever_from_llm = MultiQueryRetriever.from_llm(

retriever=db.as_retriever(), llm=llm

)

unique_docs = retriever_from_llm.get_relevant_documents(query=question)

print("Number of unique documents:", len(unique_docs))

Contextual Compression in LangChain compresses retrieved documents using the context of the query, ensuring only relevant information is returned. This involves content reduction and filtering out less relevant documents. The following code example shows how to use Contextual Compression Retriever:

from langchain.llms import OpenAI

from langchain.retrievers import ContextualCompressionRetriever

from langchain.retrievers.document_compressors import LLMChainExtractor

llm = OpenAI(temperature=0)

compressor = LLMChainExtractor.from_llm(llm)

compression_retriever = ContextualCompressionRetriever(base_compressor=compressor, base_retriever=retriever)

compressed_docs = compression_retriever.get_relevant_documents("What did the president say about Ketanji Jackson Brown")

print(compressed_docs[0].page_content)

The EnsembleRetriever combines different retrieval algorithms to achieve better performance. An example of combining BM25 and FAISS Retrievers is shown in the following code:

from langchain.retrievers import BM25Retriever, EnsembleRetriever

from langchain.vectorstores import FAISS

bm25_retriever = BM25Retriever.from_texts(doc_list).set_k(2)

faiss_vectorstore = FAISS.from_texts(doc_list, OpenAIEmbeddings())

faiss_retriever = faiss_vectorstore.as_retriever(search_kwargs={"k": 2})

ensemble_retriever = EnsembleRetriever(

retrievers=[bm25_retriever, faiss_retriever], weights=[0.5, 0.5]

)

docs = ensemble_retriever.get_relevant_documents("apples")

print(docs[0].page_content)

MultiVector Retriever in LangChain allows querying documents with multiple vectors per document, which is useful for capturing different semantic aspects within a document. Methods for creating multiple vectors include splitting into smaller chunks, summarizing, or generating hypothetical questions. For splitting documents into smaller chunks, the following Python code can be used:

python

from langchain.retrievers.multi_vector import MultiVectorRetriever

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.storage import InMemoryStore

from langchain.document_loaders from TextLoader

import uuid

loaders = [TextLoader("file1.txt"), TextLoader("file2.txt")]

docs = [doc for loader in loaders for doc in loader.load()]

text_splitter = RecursiveCharacterTextSplitter(chunk_size=10000)

docs = text_splitter.split_documents(docs)

vectorstore = Chroma(collection_name="full_documents", embedding_function=OpenAIEmbeddings())

store = InMemoryStore()

id_key = "doc_id"

retriever = MultiVectorRetriever(vectorstore=vectorstore, docstore=store, id_key=id_key)

doc_ids = [str(uuid.uuid4()) for _ in docs]

child_text_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

sub_docs = [sub_doc for doc in docs for sub_doc in child_text_splitter.split_documents([doc])]

for sub_doc in sub_docs:

sub_doc.metadata[id_key] = doc_ids[sub_docs.index(sub_doc)]

retriever.vectorstore.add_documents(sub_docs)

retriever.docstore.mset(list(zip(doc_ids, docs)))

Generating summaries for better retrieval due to more focused content representation is another method. Here's an example of generating summaries:

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.schema.output_parser import StrOutputParser

from langchain.schema.document import Document

chain = (lambda x: x.page_content) | ChatPromptTemplate.from_template("Summarize the following document:\n\n{doc}") | ChatOpenAI(max_retries=0) | StrOutputParser()

summaries = chain.batch(docs, {"max_concurrency": 5})

summary_docs = [Document(page_content=s, metadata={id_key: doc_ids[i]}) for i, s in enumerate(summaries)]

retriever.vectorstore.add_documents(summary_docs)

retriever.docstore.mset(list(zip(doc_ids, docs)))

Generating hypothetical questions relevant to each document using LLM is another approach. This can be done with the following code:

functions = [{"name": "hypothetical_questions", "parameters": {"questions": {"type": "array", "items": {"type": "string"}}}}]

from langchain.output_parsers.openai_functions import JsonKeyOutputFunctionsParser

chain = (lambda x: x.page_content) | ChatPromptTemplate.from_template("Generate 3 hypothetical questions:\n\n{doc}") | ChatOpenAI(max_retries=0).bind(functions=functions, function_call={"name": "hypothetical_questions"}) | JsonKeyOutputFunctionsParser(key_name="questions")

hypothetical_questions = chain.batch(docs, {"max_concurrency": 5})

question_docs = [Document(page_content=q, metadata={id_key: doc_ids[i]}) for i, questions in enumerate(hypothetical_questions) for q in questions]

retriever.vectorstore.add_documents(question_docs)

retriever.docstore.mset(list(zip(doc_ids, docs)))

The Parent Document Retriever is another retriever that strikes a balance between embedding accuracy and context retention by storing small chunks and retrieving their larger parent documents. Its implementation is as follows:

from langchain.retrievers import ParentDocumentRetriever

loaders = [TextLoader("file1.txt"), TextLoader("file2.txt")]

docs = [doc for loader in loaders for doc in loader.load()]

child_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

vectorstore = Chroma(collection_name="full_documents", embedding_function=OpenAIEmbeddings())

store = InMemoryStore()

retriever = ParentDocumentRetriever(vectorstore=vectorstore, docstore=store, child_splitter=child_splitter)

retriever.add_documents(docs, ids=None)

retrieved_docs = retriever.get_relevant_documents("query")

A self-querying retriever constructs structured queries from natural language inputs and applies them to its underlying VectorStore. Its implementation is shown in the following code:

from langchain.chat_models from ChatOpenAI

from langchain.chains.query_constructor.base from AttributeInfo

from langchain.retrievers.self_query.base from SelfQueryRetriever

metadata_field_info = [AttributeInfo(name="genre", description="...", type="string"), ...]

document_content_description = "Brief summary of a movie"

llm = ChatOpenAI(temperature=0)

retriever = SelfQueryRetriever.from_llm(llm, vectorstore, document_content_description, metadata_field_info)

retrieved_docs = retriever.invoke("query")

The WebResearchRetriever performs web research based on a given query -

from langchain.retrievers.web_research import WebResearchRetriever

# Initialize components

llm = ChatOpenAI(temperature=0)

search = GoogleSearchAPIWrapper()

vectorstore = Chroma(embedding_function=OpenAIEmbeddings())

# Instantiate WebResearchRetriever

web_research_retriever = WebResearchRetriever.from_llm(vectorstore=vectorstore, llm=llm, search=search)

# Retrieve documents

docs = web_research_retriever.get_relevant_documents("query")

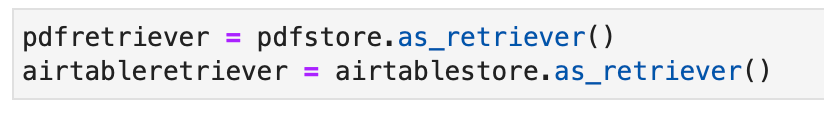

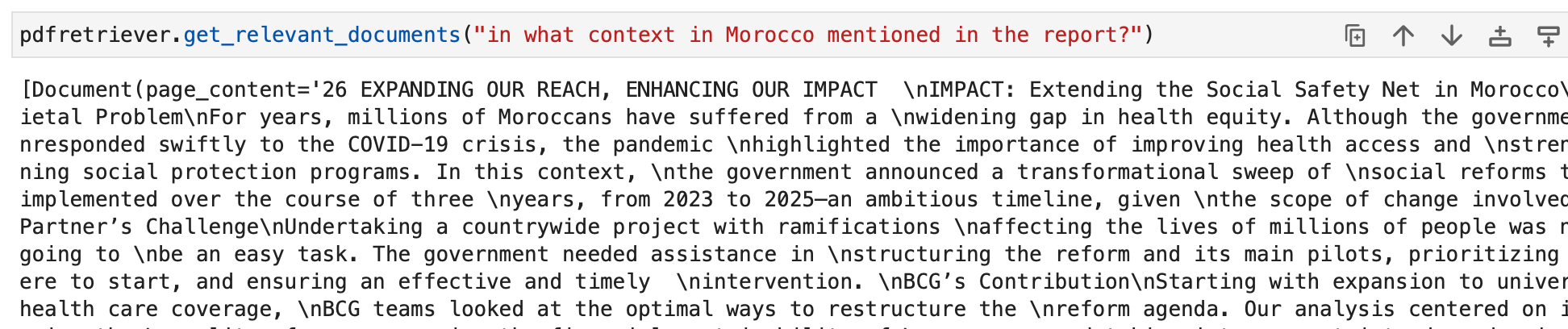

For our examples, we can also use the standard retriever already implemented as part of our vector store object as follows -

We can now query the retrievers. The output of our query will be document objects relevant to the query. These will be ultimately utilized to create relevant responses in further sections.

Module III : Agents

LangChain introduces a powerful concept called "Agents" that takes the idea of chains to a whole new level. Agents leverage language models to dynamically determine sequences of actions to perform, making them incredibly versatile and adaptive. Unlike traditional chains, where actions are hardcoded in code, agents employ language models as reasoning engines to decide which actions to take and in what order.

The Agent is the core component responsible for decision-making. It harnesses the power of a language model and a prompt to determine the next steps to achieve a specific objective. The inputs to an agent typically include:

- Tools: Descriptions of available tools (more on this later).

- User Input: The high-level objective or query from the user.

- Intermediate Steps: A history of (action, tool output) pairs executed to reach the current user input.

The output of an agent can be the next action to take actions (AgentActions) or the final response to send to the user (AgentFinish). An action specifies a tool and the input for that tool.

Tools

Tools are interfaces that an agent can use to interact with the world. They enable agents to perform various tasks, such as searching the web, running shell commands, or accessing external APIs. In LangChain, tools are essential for extending the capabilities of agents and enabling them to accomplish diverse tasks.

To use tools in LangChain, you can load them using the following snippet:

from langchain.agents import load_tools

tool_names = [...]

tools = load_tools(tool_names)

Some tools may require a base Language Model (LLM) to initialize. In such cases, you can pass an LLM as well:

from langchain.agents import load_tools

tool_names = [...]

llm = ...

tools = load_tools(tool_names, llm=llm)

This setup allows you to access a variety of tools and integrate them into your agent's workflows. The complete list of tools with usage documentation is here.

Let us look at some examples of Tools.

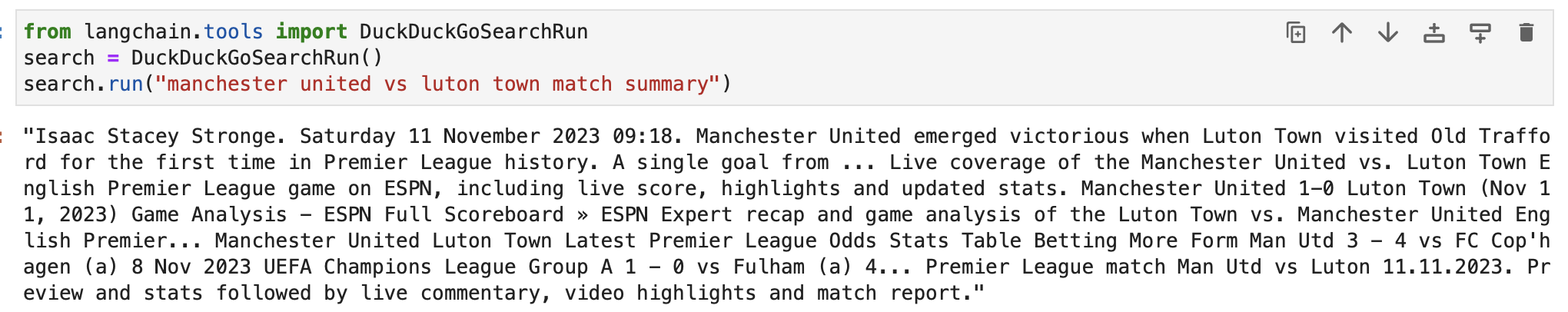

DuckDuckGo

The DuckDuckGo tool enables you to perform web searches using its search engine. Here's how to use it:

from langchain.tools import DuckDuckGoSearchRun

search = DuckDuckGoSearchRun()

search.run("manchester united vs luton town match summary")

DataForSeo

The DataForSeo toolkit allows you to obtain search engine results using the DataForSeo API. To use this toolkit, you'll need to set up your API credentials. Here's how to configure the credentials:

import os

os.environ["DATAFORSEO_LOGIN"] = "<your_api_access_username>"

os.environ["DATAFORSEO_PASSWORD"] = "<your_api_access_password>"

Once your credentials are set, you can create a DataForSeoAPIWrapper tool to access the API:

from langchain.utilities.dataforseo_api_search import DataForSeoAPIWrapper

wrapper = DataForSeoAPIWrapper()

result = wrapper.run("Weather in Los Angeles")

The DataForSeoAPIWrapper tool retrieves search engine results from various sources.

You can customize the type of results and fields returned in the JSON response. For example, you can specify the result types, fields, and set a maximum count for the number of top results to return:

json_wrapper = DataForSeoAPIWrapper(

json_result_types=["organic", "knowledge_graph", "answer_box"],

json_result_fields=["type", "title", "description", "text"],

top_count=3,

)

json_result = json_wrapper.results("Bill Gates")

This example customizes the JSON response by specifying result types, fields, and limiting the number of results.

You can also specify the location and language for your search results by passing additional parameters to the API wrapper:

customized_wrapper = DataForSeoAPIWrapper(

top_count=10,

json_result_types=["organic", "local_pack"],

json_result_fields=["title", "description", "type"],

params={"location_name": "Germany", "language_code": "en"},

)

customized_result = customized_wrapper.results("coffee near me")

By providing location and language parameters, you can tailor your search results to specific regions and languages.

You have the flexibility to choose the search engine you want to use. Simply specify the desired search engine:

customized_wrapper = DataForSeoAPIWrapper(

top_count=10,

json_result_types=["organic", "local_pack"],

json_result_fields=["title", "description", "type"],

params={"location_name": "Germany", "language_code": "en", "se_name": "bing"},

)

customized_result = customized_wrapper.results("coffee near me")

In this example, the search is customized to use Bing as the search engine.

The API wrapper also allows you to specify the type of search you want to perform. For instance, you can perform a maps search:

maps_search = DataForSeoAPIWrapper(

top_count=10,

json_result_fields=["title", "value", "address", "rating", "type"],

params={

"location_coordinate": "52.512,13.36,12z",

"language_code": "en",

"se_type": "maps",

},

)

maps_search_result = maps_search.results("coffee near me")

This customizes the search to retrieve maps-related information.

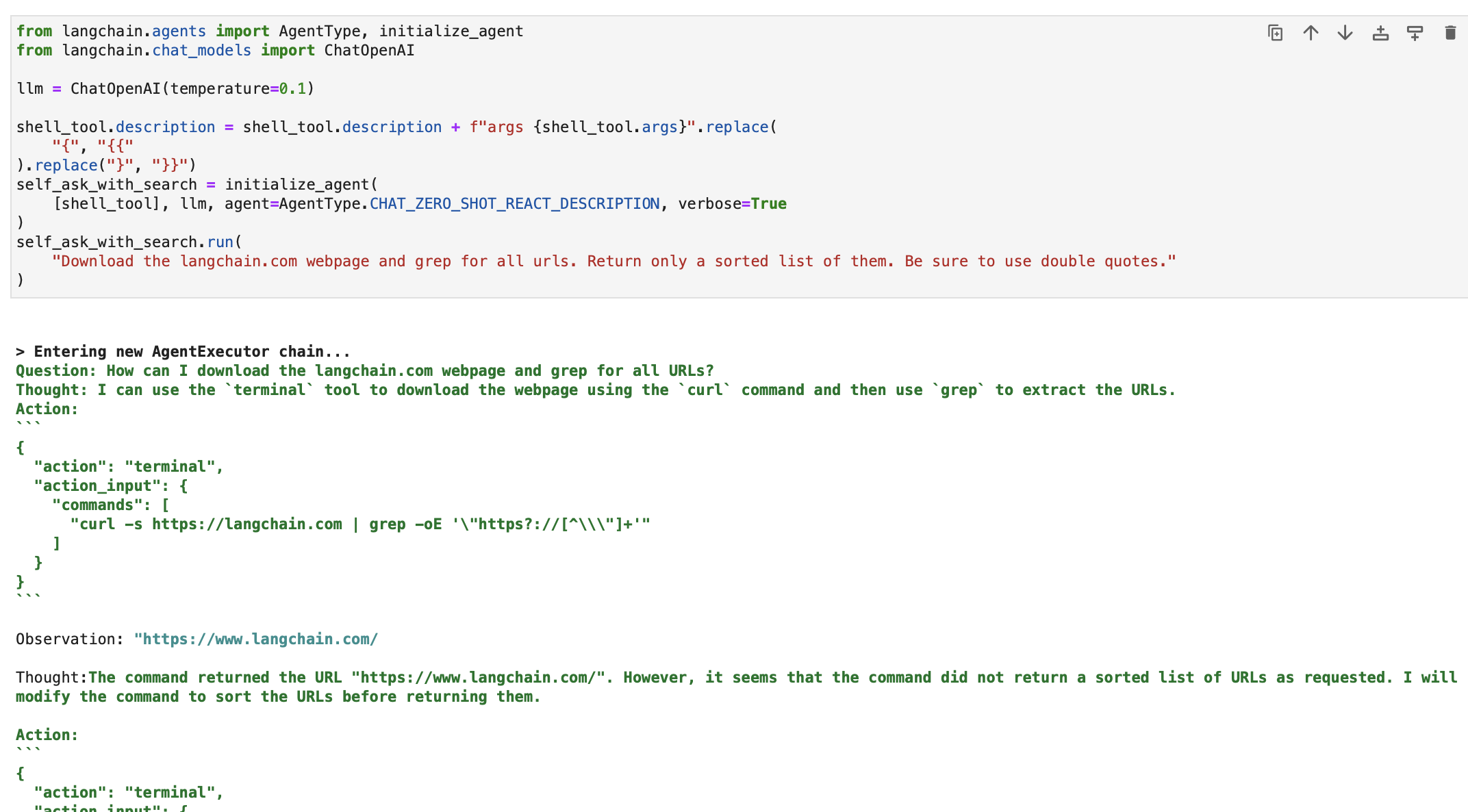

Shell (bash)

The Shell toolkit provides agents with access to the shell environment, allowing them to execute shell commands. This feature is powerful but should be used with caution, especially in sandboxed environments. Here's how you can use the Shell tool:

from langchain.tools import ShellTool

shell_tool = ShellTool()

result = shell_tool.run({"commands": ["echo 'Hello World!'", "time"]})

In this example, the Shell tool runs two shell commands: echoing "Hello World!" and displaying the current time.

You can provide the Shell tool to an agent to perform more complex tasks. Here's an example of an agent fetching links from a web page using the Shell tool:

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0.1)

shell_tool.description = shell_tool.description + f"args {shell_tool.args}".replace(

"{", "{{"

).replace("}", "}}")

self_ask_with_search = initialize_agent(

[shell_tool], llm, agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

self_ask_with_search.run(

"Download the langchain.com webpage and grep for all urls. Return only a sorted list of them. Be sure to use double quotes."

)

In this scenario, the agent uses the Shell tool to execute a sequence of commands to fetch, filter, and sort URLs from a web page.

The examples provided demonstrate some of the tools available in LangChain. These tools ultimately extend the capabilities of agents (explored in next subsection) and empower them to perform various tasks efficiently. Depending on your requirements, you can choose the tools and toolkits that best suit your project's needs and integrate them into your agent's workflows.

Back to Agents

Let's move on to agents now.

The AgentExecutor is the runtime environment for an agent. It is responsible for calling the agent, executing the actions it selects, passing the action outputs back to the agent, and repeating the process until the agent finishes. In pseudocode, the AgentExecutor might look something like this:

next_action = agent.get_action(...)

while next_action != AgentFinish:

observation = run(next_action)

next_action = agent.get_action(..., next_action, observation)

return next_action

The AgentExecutor handles various complexities, such as dealing with cases where the agent selects a non-existent tool, handling tool errors, managing agent-produced outputs, and providing logging and observability at all levels.

While the AgentExecutor class is the primary agent runtime in LangChain, there are other, more experimental runtimes supported, including:

- Plan-and-execute Agent

- Baby AGI

- Auto GPT

To gain a better understanding of the agent framework, let's build a basic agent from scratch, and then move on to explore pre-built agents.

Before we dive into building the agent, it's essential to revisit some key terminology and schema:

- AgentAction: This is a data class representing the action an agent should take. It consists of a

toolproperty (the name of the tool to invoke) and atool_inputproperty (the input for that tool). - AgentFinish: This data class indicates that the agent has finished its task and should return a response to the user. It typically includes a dictionary of return values, often with a key "output" containing the response text.

- Intermediate Steps: These are the records of previous agent actions and corresponding outputs. They are crucial for passing context to future iterations of the agent.

In our example, we will use OpenAI Function Calling to create our agent. This approach is reliable for agent creation. We'll start by creating a simple tool that calculates the length of a word. This tool is useful because language models can sometimes make mistakes due to tokenization when counting word lengths.

First, let's load the language model we'll use to control the agent:

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)

Let's test the model with a word length calculation:

llm.invoke("how many letters in the word educa?")

The response should indicate the number of letters in the word "educa."

Next, we'll define a simple Python function to calculate the length of a word:

from langchain.agents import tool

@tool

def get_word_length(word: str) -> int:

"""Returns the length of a word."""

return len(word)

We've created a tool named get_word_length that takes a word as input and returns its length.

Now, let's create the prompt for the agent. The prompt instructs the agent on how to reason and format the output. In our case, we're using OpenAI Function Calling, which requires minimal instructions. We'll define the prompt with placeholders for user input and agent scratchpad:

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a very powerful assistant but not great at calculating word lengths.",

),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

]

)

Now, how does the agent know which tools it can use? We're relying on OpenAI function calling language models, which require functions to be passed separately. To provide our tools to the agent, we'll format them as OpenAI function calls:

from langchain.tools.render import format_tool_to_openai_function

llm_with_tools = llm.bind(functions=[format_tool_to_openai_function(t) for t in tools])

Now, we can create the agent by defining input mappings and connecting the components:

This is LCEL language. We will discuss this later in detail.

from langchain.agents.format_scratchpad import format_to_openai_function_messages

from langchain.agents.output_parsers import OpenAIFunctionsAgentOutputParser

agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai

_function_messages(

x["intermediate_steps"]

),

}

| prompt

| llm_with_tools

| OpenAIFunctionsAgentOutputParser()

)

We've created our agent, which understands user input, uses available tools, and formats output. Now, let's interact with it:

agent.invoke({"input": "how many letters in the word educa?", "intermediate_steps": []})

The agent should respond with an AgentAction, indicating the next action to take.

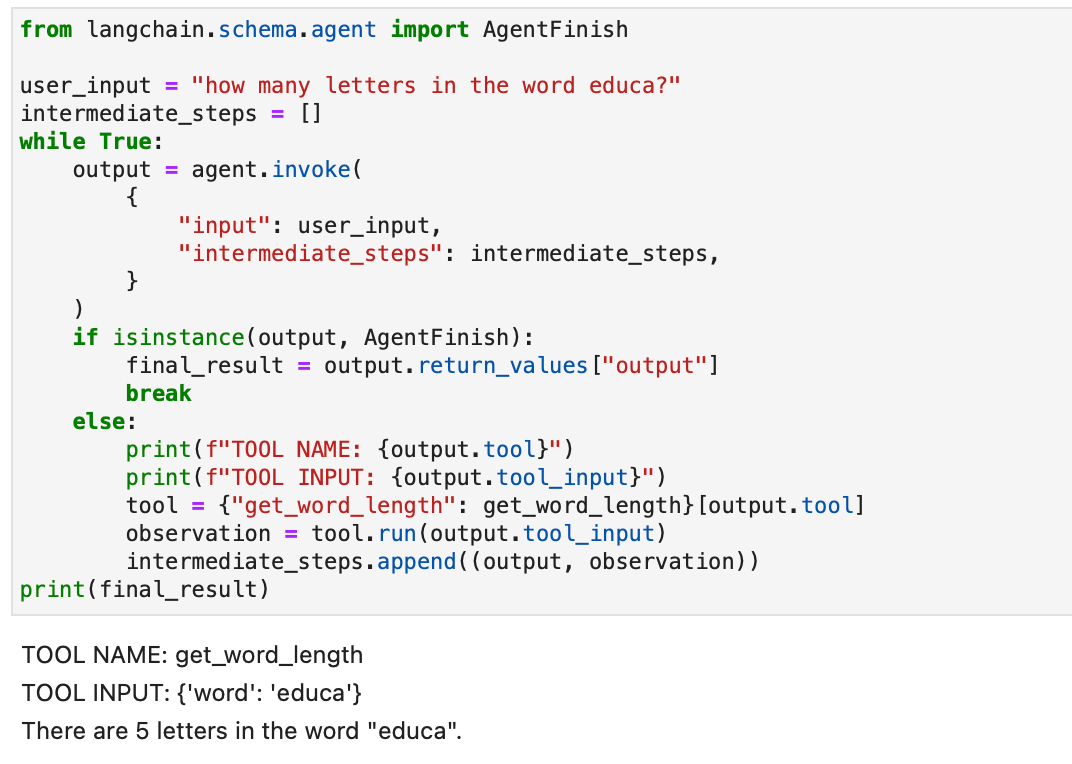

We've created the agent, but now we need to write a runtime for it. The simplest runtime is one that continuously calls the agent, executes actions, and repeats until the agent finishes. Here's an example:

from langchain.schema.agent import AgentFinish

user_input = "how many letters in the word educa?"

intermediate_steps = []

while True:

output = agent.invoke(

{

"input": user_input,

"intermediate_steps": intermediate_steps,

}

)

if isinstance(output, AgentFinish):

final_result = output.return_values["output"]

break

else:

print(f"TOOL NAME: {output.tool}")

print(f"TOOL INPUT: {output.tool_input}")

tool = {"get_word_length": get_word_length}[output.tool]

observation = tool.run(output.tool_input)

intermediate_steps.append((output, observation))

print(final_result)

In this loop, we repeatedly call the agent, execute actions, and update the intermediate steps until the agent finishes. We also handle tool interactions within the loop.

To simplify this process, LangChain provides the AgentExecutor class, which encapsulates agent execution and offers error handling, early stopping, tracing, and other improvements. Let's use AgentExecutor to interact with the agent:

from langchain.agents import AgentExecutor

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

agent_executor.invoke({"input": "how many letters in the word educa?"})

AgentExecutor simplifies the execution process and provides a convenient way to interact with the agent.

Memory is also discussed in detail later.

The agent we've created so far is stateless, meaning it doesn't remember previous interactions. To enable follow-up questions and conversations, we need to add memory to the agent. This involves two steps:

- Add a memory variable in the prompt to store chat history.

- Keep track of the chat history during interactions.

Let's start by adding a memory placeholder in the prompt:

from langchain.prompts import MessagesPlaceholder

MEMORY_KEY = "chat_history"

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a very powerful assistant but not great at calculating word lengths.",

),

MessagesPlaceholder(variable_name=MEMORY_KEY),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

]

)

Now, create a list to track the chat history:

from langchain.schema.messages import HumanMessage, AIMessage

chat_history = []

In the agent creation step, we'll include the memory as well:

agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai_function_messages(

x["intermediate_steps"]

),

"chat_history": lambda x: x["chat_history"],

}

| prompt

| llm_with_tools

| OpenAIFunctionsAgentOutputParser()

)

Now, when running the agent, make sure to update the chat history:

input1 = "how many letters in the word educa?"

result = agent_executor.invoke({"input": input1, "chat_history": chat_history})

chat_history.extend([

HumanMessage(content=input1),

AIMessage(content=result["output"]),

])

agent_executor.invoke({"input": "is that a real word?", "chat_history": chat_history})

This enables the agent to maintain a conversation history and answer follow-up questions based on previous interactions.

Congratulations! You've successfully created and executed your first end-to-end agent in LangChain. To delve deeper into LangChain's capabilities, you can explore:

- Different agent types supported.

- Pre-built Agents

- How to work with tools and tool integrations.

Agent Types

LangChain offers various agent types, each suited for specific use cases. Here are some of the available agents:

- Zero-shot ReAct: This agent uses the ReAct framework to choose tools based solely on their descriptions. It requires descriptions for each tool and is highly versatile.

- Structured input ReAct: This agent handles multi-input tools and is suitable for complex tasks like navigating a web browser. It uses a tools' argument schema for structured input.

- OpenAI Functions: Specifically designed for models fine-tuned for function calling, this agent is compatible with models like gpt-3.5-turbo-0613 and gpt-4-0613. We used this to create our first agent above.

- Conversational: Designed for conversational settings, this agent uses ReAct for tool selection and utilizes memory to remember previous interactions.

- Self-ask with search: This agent relies on a single tool, "Intermediate Answer," which looks up factual answers to questions. It's equivalent to the original self-ask with search paper.

- ReAct document store: This agent interacts with a document store using the ReAct framework. It requires "Search" and "Lookup" tools and is similar to the original ReAct paper's Wikipedia example.

Explore these agent types to find the one that best suits your needs in LangChain. These agents allow you to bind set of tools within them to handle actions and generate responses. Learn more on how to build your own agent with tools here.

Prebuilt Agents

Let's continue our exploration of agents, focusing on prebuilt agents available in LangChain.

Gmail

LangChain offers a Gmail toolkit that allows you to connect your LangChain email to the Gmail API. To get started, you'll need to set up your credentials, which are explained in the Gmail API documentation. Once you have downloaded the credentials.json file, you can proceed with using the Gmail API. Additionally, you'll need to install some required libraries using the following commands:

pip install --upgrade google-api-python-client > /dev/null

pip install --upgrade google-auth-oauthlib > /dev/null

pip install --upgrade google-auth-httplib2 > /dev/null

pip install beautifulsoup4 > /dev/null # Optional for parsing HTML messages

You can create the Gmail toolkit as follows:

from langchain.agents.agent_toolkits import GmailToolkit

toolkit = GmailToolkit()

You can also customize authentication as per your needs. Behind the scenes, a googleapi resource is created using the following methods:

from langchain.tools.gmail.utils import build_resource_service, get_gmail_credentials

credentials = get_gmail_credentials(

token_file="token.json",

scopes=["https://mail.google.com/"],

client_secrets_file="credentials.json",

)

api_resource = build_resource_service(credentials=credentials)

toolkit = GmailToolkit(api_resource=api_resource)

The toolkit offers various tools that can be used within an agent, including:

GmailCreateDraft: Create a draft email with specified message fields.GmailSendMessage: Send email messages.GmailSearch: Search for email messages or threads.GmailGetMessage: Fetch an email by message ID.GmailGetThread: Search for email messages.

To use these tools within an agent, you can initialize the agent as follows:

from langchain.llms import OpenAI

from langchain.agents import initialize_agent, AgentType

llm = OpenAI(temperature=0)

agent = initialize_agent(

tools=toolkit.get_tools(),

llm=llm,

agent=AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION,

)

Here are a couple of examples of how these tools can be used:

- Create a Gmail draft for editing:

agent.run(

"Create a gmail draft for me to edit of a letter from the perspective of a sentient parrot "

"who is looking to collaborate on some research with her estranged friend, a cat. "

"Under no circumstances may you send the message, however."

)

- Search for the latest email in your drafts:

agent.run("Could you search in my drafts for the latest email?")

These examples demonstrate the capabilities of LangChain's Gmail toolkit within an agent, enabling you to interact with Gmail programmatically.

SQL Database Agent

This section provides an overview of an agent designed to interact with SQL databases, particularly the Chinook database. This agent can answer general questions about a database and recover from errors. Please note that it is still in active development, and not all answers may be correct. Be cautious when running it on sensitive data, as it may perform DML statements on your database.

To use this agent, you can initialize it as follows:

from langchain.agents import create_sql_agent

from langchain.agents.agent_toolkits import SQLDatabaseToolkit

from langchain.sql_database import SQLDatabase

from langchain.llms.openai import OpenAI

from langchain.agents import AgentExecutor

from langchain.agents.agent_types import AgentType

from langchain.chat_models import ChatOpenAI

db = SQLDatabase.from_uri("sqlite:///../../../../../notebooks/Chinook.db")

toolkit = SQLDatabaseToolkit(db=db, llm=OpenAI(temperature=0))

agent_executor = create_sql_agent(

llm=OpenAI(temperature=0),

toolkit=toolkit,

verbose=True,

agent_type=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

)

This agent can be initialized using the ZERO_SHOT_REACT_DESCRIPTION agent type. It is designed to answer questions and provide descriptions. Alternatively, you can initialize the agent using the OPENAI_FUNCTIONS agent type with OpenAI's GPT-3.5-turbo model, which we used in our earlier client.

Disclaimer

- The query chain may generate insert/update/delete queries. Be cautious, and use a custom prompt or create a SQL user without write permissions if needed.

- Be aware that running certain queries, such as "run the biggest query possible," could overload your SQL database, especially if it contains millions of rows.

- Data warehouse-oriented databases often support user-level quotas to limit resource usage.

You can ask the agent to describe a table, such as the "playlisttrack" table. Here's an example of how to do it:

agent_executor.run("Describe the playlisttrack table")

The agent will provide information about the table's schema and sample rows.

If you mistakenly ask about a table that doesn't exist, the agent can recover and provide information about the closest matching table. For example:

agent_executor.run("Describe the playlistsong table")

The agent will find the nearest matching table and provide information about it.

You can also ask the agent to run queries on the database. For instance:

agent_executor.run("List the total sales per country. Which country's customers spent the most?")

The agent will execute the query and provide the result, such as the country with the highest total sales.

To get the total number of tracks in each playlist, you can use the following query:

agent_executor.run("Show the total number of tracks in each playlist. The Playlist name should be included in the result.")

The agent will return the playlist names along with the corresponding total track counts.

In cases where the agent encounters errors, it can recover and provide accurate responses. For instance:

agent_executor.run("Who are the top 3 best selling artists?")

Even after encountering an initial error, the agent will adjust and provide the correct answer, which, in this case, is the top 3 best-selling artists.

Pandas DataFrame Agent

This section introduces an agent designed to interact with Pandas DataFrames for question-answering purposes. Please note that this agent utilizes the Python agent under the hood to execute Python code generated by a language model (LLM). Exercise caution when using this agent to prevent potential harm from malicious Python code generated by the LLM.

You can initialize the Pandas DataFrame agent as follows:

from langchain_experimental.agents.agent_toolkits import create_pandas_dataframe_agent

from langchain.chat_models import ChatOpenAI

from langchain.agents.agent_types import AgentType

from langchain.llms import OpenAI

import pandas as pd

df = pd.read_csv("titanic.csv")

# Using ZERO_SHOT_REACT_DESCRIPTION agent type

agent = create_pandas_dataframe_agent(OpenAI(temperature=0), df, verbose=True)

# Alternatively, using OPENAI_FUNCTIONS agent type

# agent = create_pandas_dataframe_agent(

# ChatOpenAI(temperature=0, model="gpt-3.5-turbo-0613"),

# df,

# verbose=True,

# agent_type=AgentType.OPENAI_FUNCTIONS,

# )

You can ask the agent to count the number of rows in the DataFrame:

agent.run("how many rows are there?")

The agent will execute the code df.shape[0] and provide the answer, such as "There are 891 rows in the dataframe."

You can also ask the agent to filter rows based on specific criteria, such as finding the number of people with more than 3 siblings:

agent.run("how many people have more than 3 siblings")

The agent will execute the code df[df['SibSp'] > 3].shape[0] and provide the answer, such as "30 people have more than 3 siblings."

If you want to calculate the square root of the average age, you can ask the agent:

agent.run("whats the square root of the average age?")

The agent will calculate the average age using df['Age'].mean() and then calculate the square root using math.sqrt(). It will provide the answer, such as "The square root of the average age is 5.449689683556195."

Let's create a copy of the DataFrame, and missing age values are filled with the mean age:

df1 = df.copy()

df1["Age"] = df1["Age"].fillna(df1["Age"].mean())

Then, you can initialize the agent with both DataFrames and ask it a question:

agent = create_pandas_dataframe_agent(OpenAI(temperature=0), [df, df1], verbose=True)

agent.run("how many rows in the age column are different?")

The agent will compare the age columns in both DataFrames and provide the answer, such as "177 rows in the age column are different."

Jira Toolkit

This section explains how to use the Jira toolkit, which allows agents to interact with a Jira instance. You can perform various actions such as searching for issues and creating issues using this toolkit. It utilizes the atlassian-python-api library. To use this toolkit, you need to set environment variables for your Jira instance, including JIRA_API_TOKEN, JIRA_USERNAME, and JIRA_INSTANCE_URL. Additionally, you may need to set your OpenAI API key as an environment variable.

To get started, install the atlassian-python-api library and set the required environment variables:

%pip install atlassian-python-api

import os

from langchain.agents import AgentType

from langchain.agents import initialize_agent

from langchain.agents.agent_toolkits.jira.toolkit import JiraToolkit

from langchain.llms import OpenAI

from langchain.utilities.jira import JiraAPIWrapper

os.environ["JIRA_API_TOKEN"] = "abc"

os.environ["JIRA_USERNAME"] = "123"

os.environ["JIRA_INSTANCE_URL"] = "https://jira.atlassian.com"

os.environ["OPENAI_API_KEY"] = "xyz"

llm = OpenAI(temperature=0)

jira = JiraAPIWrapper()

toolkit = JiraToolkit.from_jira_api_wrapper(jira)

agent = initialize_agent(

toolkit.get_tools(), llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

You can instruct the agent to create a new issue in a specific project with a summary and description:

agent.run("make a new issue in project PW to remind me to make more fried rice")

The agent will execute the necessary actions to create the issue and provide a response, such as "A new issue has been created in project PW with the summary 'Make more fried rice' and description 'Reminder to make more fried rice'."

This allows you to interact with your Jira instance using natural language instructions and the Jira toolkit.

Module IV : Chains

LangChain is a tool designed for utilizing Large Language Models (LLMs) in complex applications. It provides frameworks for creating chains of components, including LLMs and other types of components. Two primary frameworks

- The LangChain Expression Language (LCEL)

- Legacy Chain interface

The LangChain Expression Language (LCEL) is a syntax that allows for intuitive composition of chains. It supports advanced features like streaming, asynchronous calls, batching, parallelization, retries, fallbacks, and tracing. For example, you can compose a prompt, model, and output parser in LCEL as shown in the following code:

from langchain.prompts import ChatPromptTemplate

from langchain.schema import StrOutputParser

model = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)

prompt = ChatPromptTemplate.from_messages([

("system", "You're a very knowledgeable historian who provides accurate and eloquent answers to historical questions."),

("human", "{question}")

])

runnable = prompt | model | StrOutputParser()

for chunk in runnable.stream({"question": "What are the seven wonders of the world"}):

print(chunk, end="", flush=True)

Alternatively, the LLMChain is an option similar to LCEL for composing components. The LLMChain example is as follows:

from langchain.chains import LLMChain

chain = LLMChain(llm=model, prompt=prompt, output_parser=StrOutputParser())

chain.run(question="What are the seven wonders of the world")

Chains in LangChain can also be stateful by incorporating a Memory object. This allows for data persistence across calls, as shown in this example:

from langchain.chains import ConversationChain

from langchain.memory import ConversationBufferMemory

conversation = ConversationChain(llm=chat, memory=ConversationBufferMemory())

conversation.run("Answer briefly. What are the first 3 colors of a rainbow?")

conversation.run("And the next 4?")

LangChain also supports integration with OpenAI's function-calling APIs, which is useful for obtaining structured outputs and executing functions within a chain. For getting structured outputs, you can specify them using Pydantic classes or JsonSchema, as illustrated below:

from langchain.pydantic_v1 import BaseModel, Field

from langchain.chains.openai_functions import create_structured_output_runnable

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

class Person(BaseModel):

name: str = Field(..., description="The person's name")

age: int = Field(..., description="The person's age")

fav_food: Optional[str] = Field(None, description="The person's favorite food")

llm = ChatOpenAI(model="gpt-4", temperature=0)

prompt = ChatPromptTemplate.from_messages([

# Prompt messages here

])

runnable = create_structured_output_runnable(Person, llm, prompt)

runnable.invoke({"input": "Sally is 13"})

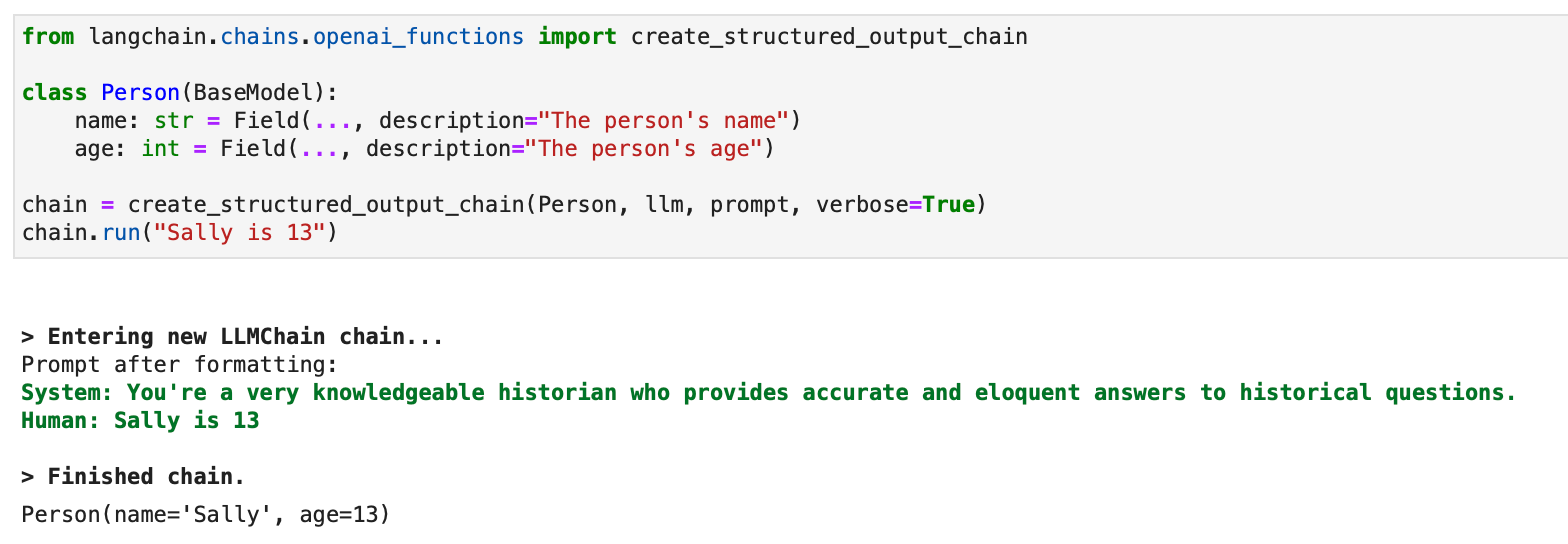

For structured outputs, a legacy approach using LLMChain is also available:

from langchain.chains.openai_functions import create_structured_output_chain

class Person(BaseModel):

name: str = Field(..., description="The person's name")

age: int = Field(..., description="The person's age")

chain = create_structured_output_chain(Person, llm, prompt, verbose=True)

chain.run("Sally is 13")

LangChain leverages OpenAI functions to create various specific chains for different purposes. These include chains for extraction, tagging, OpenAPI, and QA with citations.

In the context of extraction, the process is similar to the structured output chain but focuses on information or entity extraction. For tagging, the idea is to label a document with classes such as sentiment, language, style, covered topics, or political tendency.

An example of how tagging works in LangChain can be demonstrated with a Python code. The process begins with installing the necessary packages and setting up the environment:

pip install langchain openai

# Set env var OPENAI_API_KEY or load from a .env file:

# import dotenv

# dotenv.load_dotenv()

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.chains import create_tagging_chain, create_tagging_chain_pydantic

The schema for tagging is defined, specifying the properties and their expected types:

schema = {

"properties": {

"sentiment": {"type": "string"},

"aggressiveness": {"type": "integer"},

"language": {"type": "string"},

}

}

llm = ChatOpenAI(temperature=0, model="gpt-3.5-turbo-0613")

chain = create_tagging_chain(schema, llm)

Examples of running the tagging chain with different inputs show the model's ability to interpret sentiments, languages, and aggressiveness:

inp = "Estoy increiblemente contento de haberte conocido! Creo que seremos muy buenos amigos!"

chain.run(inp)

# {'sentiment': 'positive', 'language': 'Spanish'}

inp = "Estoy muy enojado con vos! Te voy a dar tu merecido!"

chain.run(inp)

# {'sentiment': 'enojado', 'aggressiveness': 1, 'language': 'es'}

For finer control, the schema can be defined more specifically, including possible values, descriptions, and required properties. An example of this enhanced control is shown below:

schema = {

"properties": {

# Schema definitions here

},

"required": ["language", "sentiment", "aggressiveness"],

}

chain = create_tagging_chain(schema, llm)

Pydantic schemas can also be used for defining tagging criteria, providing a Pythonic way to specify required properties and types:

from enum import Enum

from pydantic import BaseModel, Field

class Tags(BaseModel):

# Class fields here

chain = create_tagging_chain_pydantic(Tags, llm)

Additionally, LangChain's metadata tagger document transformer can be used to extract metadata from LangChain Documents, offering similar functionality to the tagging chain but applied to a LangChain Document.

Citing retrieval sources is another feature of LangChain, using OpenAI functions to extract citations from text. This is demonstrated in the following code:

from langchain.chains import create_citation_fuzzy_match_chain

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0, model="gpt-3.5-turbo-0613")

chain = create_citation_fuzzy_match_chain(llm)

# Further code for running the chain and displaying results

In LangChain, chaining in Large Language Model (LLM) applications typically involves combining a prompt template with an LLM and optionally an output parser. The recommended way to do this is through the LangChain Expression Language (LCEL), although the legacy LLMChain approach is also supported.

Using LCEL, the BasePromptTemplate, BaseLanguageModel, and BaseOutputParser all implement the Runnable interface and can be easily piped into one another. Here's an example demonstrating this:

from langchain.prompts import PromptTemplate

from langchain.chat_models import ChatOpenAI

from langchain.schema import StrOutputParser

prompt = PromptTemplate.from_template(

"What is a good name for a company that makes {product}?"

)