Invoice data extraction is one of the most complex challenges in document processing. As someone who works with enterprise-scale automation systems, I've seen companies struggle with everything from poor scan quality to inconsistent layouts. What seems like a straightforward task—pulling numbers and text from a document—quickly becomes complex when dealing with real-world invoices.

In this guide, I'll walk you through the fundamentals of invoice extraction using Python. You'll learn how to handle both structured and unstructured data, process different types of PDFs, and understand where machine learning fits into the picture.

By the end of this guide, you'll know how to implement invoice extraction using popular Python libraries, what capabilities and limitations to expect, and what it takes to scale these solutions for production use.

What is Invoice Data Extraction?

Before diving into the technical details, let’s understand what invoices are.

An invoice is a document that outlines the details of a transaction between a buyer and a seller, including the date of the transaction, the names and addresses of the buyer and seller, a description of the goods or services provided, the quantity of items, the price per unit, and the total amount due.

Despite the apparent simplicity of invoices, extracting data from them can be a complex and challenging process. This is because invoices may contain both structured and unstructured data.

Structured data refers to data that is organized in a specific format, such as tables or lists. Invoices often include structured data in the form of tables that outline the line items and quantities of goods or services provided.

Unstructured data, on the other hand, refers to data that is not organized in a specific format and can be more difficult to recognise and extract. Invoices may contain unstructured data in the form of free-text descriptions, logos, or images.

Extracting data from invoices can be expensive and can lead to delays in payment processing, especially when dealing with large volumes of invoices. This is where invoice data extraction comes in.

Invoice data extraction refers to the process of extracting structured and unstructured data from invoices. This process can be challenging due to the variety of invoice data types, but can be automated using tools such as Python.

Challenges in invoice data extraction

Here's the thing about invoice extraction — it's never as straightforward as it looks. While building extraction systems for various organizations, I've learned that certain challenges appear again and again.

Some of the main problems you'll need to solve:

- Variety of invoice formats: Invoices may come in different formats, including paper, email, PDF, or EDI, which can make it difficult to extract and process data consistently.

- Data quality and accuracy: Manually processing invoices can be prone to errors, leading to delays and inaccuracies in payment processing.

- Large volumes of data: Many businesses deal with a high volume of invoices, which can be difficult and time-consuming to process manually.

- Different languages and font-sizes: Invoices from international vendors may be in different languages, which can be difficult to process using automated tools. Similarly, invoices may contain different font sizes and styles, which can impact the accuracy of data extraction.

- Integration with other systems: Extracted data from invoices often needs to be integrated with other systems, such as accounting or enterprise resource planning (ERP) software, which can add an extra layer of complexity to the process.

Python for Invoice Data Extraction

Python offers several powerful tools for invoice extraction, each suited for different aspects of the problem. Let's look at the key libraries you can use and what each one does best.

These libraries typically plug into an automated data extraction pipeline that handles ingestion → extraction → validation → export:

1. Pytesseract

Pytesseract is a Python wrapper for Google's Tesseract OCR engine, which is one of the most popular OCR engines available. Pytesseract OCR is designed to extract text from scanned images, including invoices, and can be used to extract key-value pairs and other textual information from the header and footer sections of invoices.

2. Pandas

Pandas is a powerful data manipulation library for Python that provides data structures for efficiently storing and manipulating large datasets. Pandas can be used to extract and manipulate tabular data from the line items section of invoices, including product descriptions, quantities, and prices.

3. Tabula

Tabula is a Python library that is specifically designed to extract tabular data from PDFs and other documents. Tabula can be used to extract data from the line items section of invoices, including product descriptions, quantities, and prices, and can be a useful alternative to OCR-based methods for extracting this data.

4. Camelot

Camelot is another Python library that can be used to extract tabular data from PDFs and other documents, and is specifically designed to handle complex table structures. Camelot can be used to extract data from the line items section of invoices, and can be a useful alternative to OCR-based methods for extracting this data.

5. OpenCV

OpenCV is a popular computer vision library for Python that provides tools and techniques for analyzing and manipulating images. OpenCV can be used to extract information from images and logos in the header and footer sections of invoices, and can be used in conjunction with OCR-based methods to improve accuracy and reliability.

6. Pillow

Pillow is a Python library that provides tools and techniques for working with images, including reading, writing, and manipulating image files. Pillow can be used to extract information from images and logos in the header and footer sections of invoices, and can be used in conjunction with OCR-based methods to improve accuracy and reliability.

It's important to note that while the libraries mentioned above are some of the most commonly used for extracting data from invoices, the process of extracting data from invoices can be complex and could require multiple techniques and tools.

Depending on the complexity of the invoice and the specific information you need to extract, you may need to use additional libraries and techniques beyond those mentioned here.

Now, before we dive into a real example of extracting invoices, let’s first discuss the process of preparing invoice data for extraction.

Want to quickly automate manual data extraction processes from invoices and increase efficiency? If yes, Click below to Schedule a Free Demo with Nanonets' Automation Experts.

Preparing Invoice Data for Extraction

Preparing the data before extraction is an important step in the invoice processing pipeline, as it can help ensure that the data is accurate and reliable. I've found that this step often determines whether your extraction succeeds or fails, especially when handling large volumes of invoices or messy unstructured data – because it may contain errors, inconsistencies, or other issues that can impact the accuracy of the extraction process.

One key technique for preparing invoice data for extraction is data cleaning and preprocessing.

Data cleaning and preprocessing involves identifying and correcting errors, inconsistencies, and other issues in the data before the extraction process begins. This can involve a wide range of techniques, including:

- Data normalization: Transforming data into a common format that can be more easily processed and analyzed. This can involve standardizing the format of dates, times, and other data elements, as well as converting data into a consistent data type, such as numeric or categorical data.

- Text cleaning: Involves removing extraneous or irrelevant information from the data, such as stop words, punctuation, and other non-textual characters. This can help improve the accuracy and reliability of text-based extraction techniques, such as OCR and NLP.

- Data validation: Involves checking the data for errors, inconsistencies, and other issues that may impact the accuracy of the extraction process. This can involve comparing the data to external sources, such as customer databases or product catalogs, to ensure that the data is accurate and up-to-date.

- Data augmentation: Adding or modifying data to improve the accuracy and reliability of the extraction process. This can involve adding additional data sources, such as social media or web data, to supplement the invoice data, or using machine learning techniques to generate synthetic data to improve the accuracy of the extraction process.

Extracting Data from Invoices Using Python

Now that we've covered preparation, let's get into the actual extraction process. I'll show you how to combine different Python techniques to handle various invoice formats. While no single approach works for every invoice, we'll start with some fundamental methods that work well for standard electronic invoices.

For example, to extract tables from PDF invoices, you can use the tabula-py library, which extracts data from tables in PDFs. By providing the area of the PDF page where the table is located, you can extract the table and manipulate it using the Pandas library.

On the other hand, non-electronically made invoices, such as scanned or image-based invoices, require more advanced techniques, including computer vision and machine learning. These techniques enable the intelligent recognition of regions of the invoice and the extraction of data.

One of the advantages of using machine learning for invoice extraction is that the algorithms can learn from training data. Once the algorithm has been trained, it can intelligently recognize new invoices without needing to retrain the algorithm. This means that the algorithm can quickly and accurately extract data from new invoices based on previous inputs.

Extracting with Regular Expressions

Let's start with one of the most reliable methods for extracting specific fields: regular expressions. We'll extract common invoice fields like numbers and dates using this approach.

Step 1: Import libraries

To extract information from the invoice text, we use regular expressions and the pdftotext library to read data from PDF invoices.

import pdftotext

import reStep 2: Read the PDF

We first read the PDF invoice using Python's built-in open() function. The 'rb' argument opens the file in binary mode, which is required for reading binary files like PDFs. We then use the pdftotext library to extract the text content from the PDF file.

with open('invoice.pdf', 'rb') as f:

pdf = pdftotext.PDF(f)

text = '\n\n'.join(pdf)Step 3: Use regular expressions to match the text on invoices

We use regular expressions to extract the invoice number, total amount due, invoice date and due date from the invoice text. We compile the regular expressions using the re.compile() function and use the search() function to find the first occurrence of the pattern in the text. We use the group() function to extract the matched text from the pattern, and the strip() function to remove any leading or trailing whitespace from the matched text. If a match is not found, we set the corresponding value to None.

invoice_number = re.search(r'Invoice Number\s*\n\s*\n(.+?)\s*\n', text).group(1).strip()

total_amount_due = re.search(r'Total Due\s*\n\s*\n(.+?)\s*\n', text).group(1).strip()

# Extract the invoice date

invoice_date_pattern = re.compile(r'Invoice Date\s*\n\s*\n(.+?)\s*\n')

invoice_date_match = invoice_date_pattern.search(text)

if invoice_date_match:

invoice_date = invoice_date_match.group(1).strip()

else:

invoice_date = None

# Extract the due date

due_date_pattern = re.compile(r'Due Date\s*\n\s*\n(.+?)\s*\n')

due_date_match = due_date_pattern.search(text)

if due_date_match:

due_date = due_date_match.group(1).strip()

else:

due_date = NoneStep 4: Printing the data

Lastly, we print all the data that’s extracted from the invoice.

print('Invoice Number:', invoice_number)

print('Date:', date)

print('Total Amount Due:', total_amount_due)

print('Invoice Date:', invoice_date)

print('Due Date:', due_date)Input

Output

Invoice Date: January 25, 2016

Due Date: January 31, 2016

Invoice Number: INV-3337

Date: January 25, 2016

Total Amount Due: $93.50Note that the approach described here is specific to the structure and format of the example invoice. While it works well for standardized invoices, real-world documents rarely follow consistent patterns. In my experience, you'll often need to combine these basic techniques with more advanced methods like named entity recognition (NER) or key-value pair extraction, depending on your specific requirements. The key is to start simple and add complexity only where needed.

Extracting Tables from Invoices

Extracting tables from electronically generated PDF invoices can be a straightforward task, thanks to libraries such as Tabula and Camelot. The following code demonstrates how to use these libraries to extract tables from a PDF invoice.

from tabula import read_pdf

from tabulate import tabulate

file = "sample-invoice.pdf"

df = read_pdf(file ,pages="all")

print(tabulate(df[0]))

print(tabulate(df[1]))Input

Output

- ------------ ----------------

0 Order Number 12345

1 Invoice Date January 25, 2016

2 Due Date January 31, 2016

3 Total Due $93.50

- ------------ ----------------

- - ------------------------------- ------ ----- ------

0 1 Web Design $85.00 0.00% $85.00

This is a sample description...

- - ------------------------------- ------ ----- ------If you need to extract specific columns from an invoice (unstructured invoice), and if the invoice contains multiple tables with varying formats, you may need to perform some post-processing to achieve the desired output. However, to address such challenges, advanced techniques such as computer vision and optical character recognition (OCR) can be used to extract data from invoices regardless of their layouts.

Identifying layouts of Invoices to apply OCR

When it comes to handling different invoice layouts, OCR becomes our primary tool. Let's see how to combine Tesseract with OpenCV to identify and extract data from different regions of an invoice.

Step 1: Import necessary libraries

First, we import the necessary libraries: OpenCV (cv2) for image processing, and pytesseract OCR. We also import the Output class from pytesseract to specify the output format of the OCR results.

import cv2

import pytesseract

from pytesseract import Output

Step 2: Read the sample invoice image

We then read the sample invoice image sample-invoice.jpg using cv2.imread() and store it in the img variable.

img = cv2.imread('sample-invoice.jpg')Step 3: Perform OCR on the image and obtain the results in dictionary format

Next, we use pytesseract.image_to_data() to perform OCR on the image and obtain a dictionary of information about the detected text. The output_type=Output.DICT argument specifies that we want the results in dictionary format.

We then print the keys of the resulting dictionary using the keys() function to see the available information that we can extract from the OCR results.

d = pytesseract.image_to_data(img, output_type=Output.DICT)

# Print the keys of the resulting dictionary to see the available information

print(d.keys())Step 4: Visualize the detected text by plotting bounding boxes

To visualize the detected text, we can plot the bounding boxes of each detected word using the information in the dictionary. We first obtain the number of detected text blocks using the len() function, and then loop over each block. For each block, we check if the confidence score of the detected text is greater than 60 (i.e., the detected text is more likely to be correct), and if so, we retrieve the bounding box information and plot a rectangle around the text using cv2.rectangle(). We then display the resulting image using cv2.imshow() and wait for the user to press a key before closing the window.

n_boxes = len(d['text'])

for i in range(n_boxes):

if float(d['conf'][i]) > 60: # Check if confidence score is greater than 60

(x, y, w, h) = (d['left'][i], d['top'][i], d['width'][i], d['height'][i])

img = cv2.rectangle(img, (x, y), (x + w, y + h), (0, 255, 0), 2)

cv2.imshow('img', img)

cv2.waitKey(0)Output

Invoice Extraction using NER

Named Entity Recognition (NER) is a natural language processing technique that can be used to extract structured information from unstructured text. In the context of invoice extraction, NER can be used to identify key entities such as invoice numbers, dates, and amounts.

One popular NLP library that includes NER functionality is spaCy. spaCy provides pre-trained models for NER in several languages, including English. Here's an example of how to use spaCy to extract information from an invoice:

Step 1: Import Spacy and load pre-trained model

In this example, we first load the pre-trained English model with NER using the spacy.load() function.

import spacy

# Load the English pre-trained model with NER

nlp = spacy.load('en_core_web_sm')Step 2: Read the PDF invoice as a string and apply NER model to the invoice text

We then read the invoice PDF file as a string and apply the NER model to the text using the nlp() function.

with open('invoice.pdf', 'r') as f:

text = f.read()

# Apply the NER model to the invoice text

doc = nlp(text)Step 3: Extract invoice number, date, and total amount due

We then iterate over the detected entities in the invoice text using a for loop. We use the label_ attribute of each entity to check if it corresponds to the invoice number, date, or total amount due. We use string matching and lowercasing to identify these entities based on their contextual clues.

invoice_number = None

invoice_date = None

total_amount_due = None

for ent in doc.ents:

if ent.label_ == 'INVOICE_NUMBER':

invoice_number = ent.text.strip()

elif ent.label_ == 'DATE':

if ent.text.strip().lower().startswith('invoice'):

invoice_date = ent.text.strip()

elif ent.label_ == 'MONEY':

if 'total' in ent.text.strip().lower():

total_amount_due = ent.text.strip()Step 4: Print the extracted information

Finally, we print the extracted information to the console for verification. From my testing, this approach works best with consistent invoice formats. You'll likely need to fine-tune the model for your specific invoice types, but it provides a solid foundation for handling complex layouts.

print('Invoice Number:', invoice_number)

print('Invoice Date:', invoice_date)

print('Total Amount Due:', total_amount_due)In the next section, let’s discuss some of the common challenges and solutions for automated invoice extraction.

Common Challenges and Solutions

Even with all these tools at our disposal, invoice extraction isn't always smooth sailing. Here are the most common challenges you'll encounter and practical ways to address them:

1. Inconsistent formats

Invoices can come in various formats, including paper, PDF, and email, which can make it challenging to extract and process data consistently. Additionally, the structure of the invoice may not always be the same, which can cause issues with data extraction.

2. Poor quality scans

Low-quality scans or scans with skewed angles can lead to errors in data extraction. To improve the accuracy of data extraction, businesses can use image preprocessing techniques such as deskewing, binarization, and noise reduction to improve the quality of the scan.

3. Different languages and font sizes

Invoices from international vendors may be in different languages, which can be difficult to process using automated tools. Similarly, invoices may contain different font sizes and styles, which can impact the accuracy of data extraction. To overcome this challenge, businesses can use machine learning algorithms and techniques such as optical character recognition (OCR) to extract data accurately regardless of language or font size.

4. Complex invoice structures

Invoices may contain complex structures such as nested tables or mixed data types, which can be difficult to extract and process. To overcome this challenge, businesses can use libraries such as Pandas to handle complex structures and extract data accurately.

5. Integration with other systems (ERPs)

Extracted data from invoices often needs to be integrated with other systems, such as accounting or enterprise resource planning (ERP) software, which can add an extra layer of complexity to the process. To overcome this challenge, businesses can use APIs or database connectors to integrate the extracted data with other systems.

Handling these challenges gets easier with experience, but scaling to process thousands of invoices daily requires more robust solutions. That's where automated platforms like Nanonets make a difference.

Automated invoice data extraction with Nanonets

With Nanonets, you can easily create and train machine learning models for invoice data extraction using an intuitive web-based GUI.

You can access cloud-hosted models that use state-of-the-art algorithms to provide you with accurate results, without worrying about getting a GCP instance or GPUs for training.

The Nanonets OCR API allows you to build OCR models with ease. You do not have to worry about pre-processing your images or worry about matching templates or build rule based engines to increase the accuracy of your OCR model.

You can upload your data, annotate it, set the model to train and wait for getting predictions through a browser based UI without writing a single line of code, worrying about GPUs or finding the right architectures for your deep learning models. You can also acquire the JSON responses of each prediction to integrate it with your own systems and build machine learning powered apps built on state of the art algorithms and a strong infrastructure.

Using the GUI: head over to nanonets.com

You can also use the Nanonets-OCR API by following the steps below:

Step 1: Clone the Repo, Install dependencies

git clone https://github.com/NanoNets/nanonets-ocr-sample-python.git

cd nanonets-ocr-sample-python

sudo pip install requests tqdm

Step 2: Get your free API Key

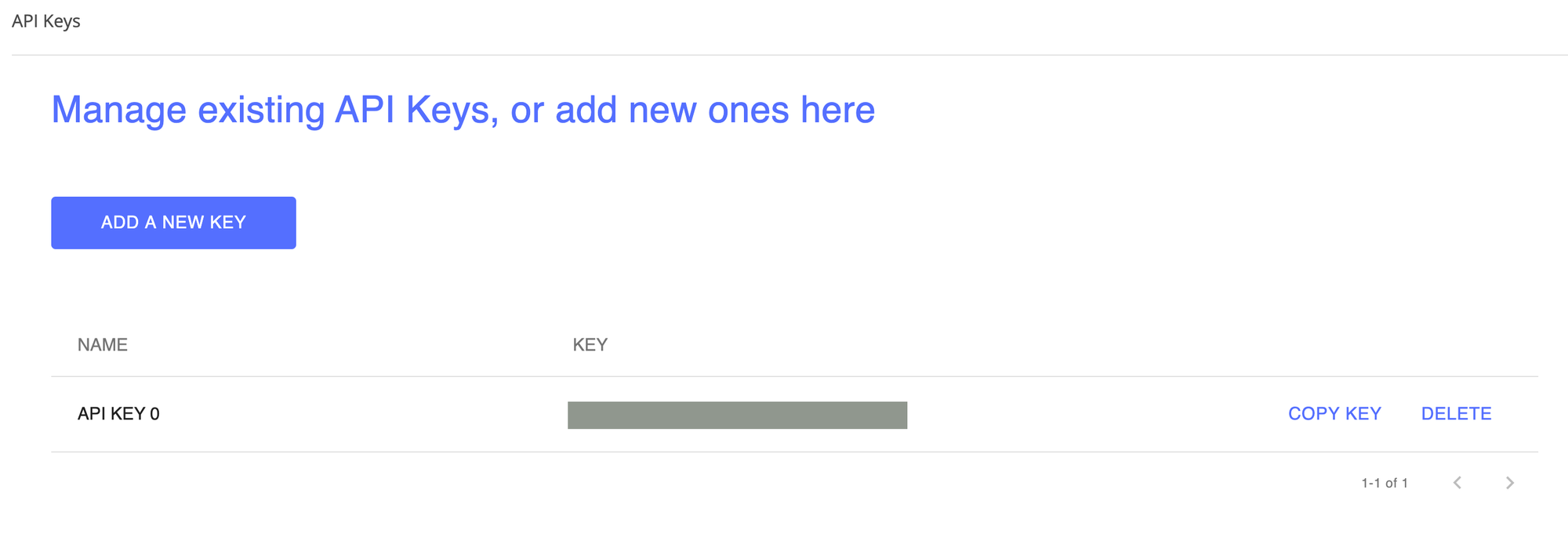

Get your free API Key from here

Step 3: Set the API key as an Environment Variable

export NANONETS_API_KEY=YOUR_API_KEY_GOES_HERE

Step 4: Create a New Model

python ./code/create-model.py

Note: This generates a MODEL_ID that you need for the next step

Step 5: Add Model Id as Environment Variable

export NANONETS_MODEL_ID=YOUR_MODEL_ID

Note: you will get YOUR_MODEL_ID from the previous step

Step 6: Upload the Training Data

The training data is found in images (image files) and annotations (annotations for the image files)

python ./code/upload-training.py

Step 7: Train Model

Once the Images have been uploaded, begin training the Model

python ./code/train-model.py

Step 8: Get Model State

The model takes ~2 hours to train. You will get an email once the model is trained. In the meanwhile you check the state of the model

python ./code/model-state.py

Step 9: Make Prediction

Once the model is trained. You can make predictions using the model

python ./code/prediction.py ./images/151.jpg

Want to quickly automate manual data extraction processes from invoices and increase efficiency? If yes, Click below to Schedule a Free Demo with Nanonets' Automation Experts.

Summary

Invoice data extraction is a critical process for businesses that deals with a high volume of invoices. Accurately extracting data from invoices can significantly reduce errors, streamline payment processing, and ultimately improve your bottom line.

Python is a powerful tool that can simplify and automate the invoice data extraction process. Its versatility and numerous libraries make it an ideal choice for businesses looking to improve their invoice data extraction capabilities.

Moreover, with Nanonets, you can streamline your invoice data extraction process even further. Our easy-to-use platform offers a range of features, including an intuitive web-based GUI, cloud-hosted models, state-of-the-art algorithms, and field extraction made easy.

So, if you're looking for an efficient and cost-effective solution for invoice data extraction, look no further than Nanonets. Sign up for our service today and start optimizing your business processes!