If you're drowning in documents (and let's face it, who isn't?), you've probably realized that traditional OCR is like bringing a knife to a gunfight. Sure, it can read text, but it has no clue that the number sitting next to "Total Due" is probably more important than "Page 2 of 47."

That's where LayoutLM comes in – it is the answer to the age-old question: "What if we taught AI to actually understand documents instead of just reading them like a confused first-grader?"

What makes LayoutLM different from your legacy OCR

We've all been there. You feed a perfectly good invoice into an OCR system, and it spits back a text soup that would make alphabet soup jealous. The problem? Traditional OCR treats documents like they're just walls of text, completely ignoring that beautiful spatial arrangement that humans use to make sense of information.

LayoutLM takes a fundamentally different approach. Instead of just extracting text, it understands three critical aspects of any document:

- The actual text content (what the words say)

- The spatial layout (where things are positioned)

- The visual features (how things look)

Think of it this way: if traditional OCR is like reading a book with your eyes closed, LayoutLM is like having a conversation with someone who actually understands document design. It knows that in an invoice, the big bold number at the bottom right is probably the total, and those neat rows in the middle? That's your line items talking.

Unlike previous text-only models like BERT, LayoutLM adds two crucial pieces of information: 2D position (where the text is) and visual cues (what the text looks like). Before LayoutLM, AI would read a document as one long string of words, completely blind to the visual structure that gives the text its meaning.

How LayoutLM actually works

Imagine how you read an invoice. You don't just see a jumble of words; you see that the vendor's name is in large font at the top, the line items are in a neat table, and the total amount is at the bottom right. The position is critical. LayoutLM was the first major model designed to read this way, successfully combining text, layout, and image information into a singular model.

Text embeddings

At its core, LayoutLM starts with BERT-based text embeddings. If BERT is new to you, think of it as the Shakespeare of language models – it understands context, nuance, and relationships between words. But while BERT stops at understanding language, LayoutLM is just getting warmed up.

Spatial embeddings

Here's where things get interesting. LayoutLM adds spatial embeddings that capture the 2D position of every single token on the page. The LayoutLMv1 model, specifically used the four corner coordinates of a word's bounding box (x0,y0,x1,y1). The inclusion of width and height as direct embeddings was an enhancement introduced in LayoutLMv2.

Visual features

LayoutLMv2 and v3 took things even further by incorporating actual visual features. Using either ResNet (v2) or patch embeddings similar to Vision Transformers (v3), these models can literally "see" the document. Bold text? Different fonts? Company logos? Color coding? LayoutLM notices all of it.

The LayoutLM trilogy and the AI universe it created

The original LayoutLM series established the core technology. By 2025, this has evolved into a new era of more powerful and universal AI models.

The foundational trilogy (2020-2022):

- LayoutLMv1: The pioneer that first combined text and layout information. It used a Masked Visual-Language Model (MVLM) objective, where it learned to predict masked words using both the text and layout context.

- LayoutLMv2: The sequel that integrated visual features directly into the pre-training process. It also added new training objectives like Text-Image Alignment and Text-Image Matching to create a tighter vision-language connection.

- LayoutLMv3: The final act that streamlined the architecture with a more efficient design, achieving better performance with less complexity.

The new era (2023-2025):

After the LayoutLM trilogy, its principles became standard, and the field exploded.

- Shift to universal models: We saw the emergence of models like Microsoft's UDOP (Universal Document Processing), which unifies vision, text, and layout into a single, powerful transformer capable of both understanding and generating documents.

- The rise of vision foundation models: The game changed again with models like Microsoft's Florence-2, a versatile vision model that can handle a massive range of tasks—including OCR, object detection, and complex document understanding—all through a unified, prompt-based interface.

- The impact of general multimodal AI: Perhaps the biggest shift has been the arrival of massive, general-purpose models like GPT-4, Claude, and Gemini. These models demonstrate astounding "zero-shot" capabilities, where you can show them a document and simply ask a question to get an answer, often without any specialized training.

Where can AI like LayoutLM be applied?

The technology underpinning LayoutLM is versatile and has been successfully applied to a wide range of use cases, including:

Invoice processing

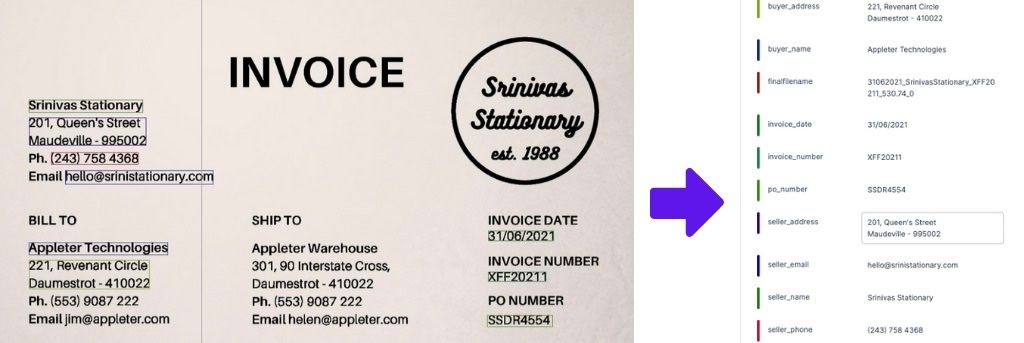

Remember the last time you had to manually enter invoice data? Yeah, we're trying to forget too. With LayoutLM integrated into Nanonets' invoice processing solution, we can automatically extract:

- Vendor information (even when their logo is the size of a postage stamp)

- Line items (yes, even those pesky multi-line descriptions)

- Tax calculations (because math is hard)

- Payment terms (buried in that fine print you never read)

One of our customers in procurement shared with us that they increased their processing volume from 50 invoices a day to 500. That's not a typo – that's the power of understanding layout.

Receipt processing

Receipts are the bane of expense reporting. They're crumpled, faded, and formatted by what seems like a random number generator. But LayoutLM doesn't care. It can extract:

- Merchant details (even from that hole-in-the-wall restaurant)

- Individual items with prices (yes, even that complicated Starbucks order)

- Tax breakdowns (for your accounting team's sanity)

- Payment methods (corporate card vs. personal)

Contract analysis

Legal documents are where LayoutLM really shines. It understands:

- Clause hierarchies (Section 2.3.1 is under Section 2.3, which is under Section 2)

- Signature blocks (who signed where and when)

- Tables of terms and conditions

- Cross-references between sections

Forms processing

Whether it's insurance claims, loan applications, or government forms, LayoutLM handles them all. The model understands:

- Checkbox states (checked, unchecked, or that weird half-check)

- Handwritten entries in form fields

- Multi-page forms with continued sections

- Complex table structures with merged cells

Why LayoutLM? The leap to multimodal understanding

How does a deep learning model learn to correctly assign labels to text? Before LayoutLM, several approaches existed, each with its own limitations:

- Text-only models: Using text embeddings from large language models like BERT is not very effective on its own, as it ignores the rich contextual clues provided by the document's layout.

- Image-based models: Computer vision models like Faster R-CNN can use visual information to detect text blocks but don't fully utilize the semantic content of the text itself.

- Graph-based models: These models combine textual and locational information but often neglect the visual cues present in the document image.

LayoutLM was one of the first models to successfully combine all three dimensions of information—text, layout (location), and image—into a singular, powerful framework. It achieved this by extending the proven architecture of BERT to understand not just what the words are, but where they are on the page and what they look like.

LayoutLM tutorial: How it works

This section breaks down the core components of the original LayoutLM model.

1. OCR Text and Bounding Box extraction

The first step in any LayoutLM pipeline is to process a document image with an OCR engine. This process extracts two crucial pieces of information: the text content of the document and the location of each word, represented by a "bounding box." A bounding box is a rectangle defined by coordinates (e.g., top-left and bottom-right corners) that encapsulates a piece of text on the page.

2. Language and location embeddings

LayoutLM is built on the BERT architecture, a powerful Transformer model. The key innovation of LayoutLM was adding new types of input embeddings to teach this language model how to understand 2D space:

- Text Embeddings: Standard word embeddings that represent the semantic meaning of each token (word or sub-word).

- 1D Position Embeddings: Standard positional embeddings used in BERT to understand the sequence order of words.

- 2D Position Embeddings: This is the breakthrough feature. For each word, its bounding box coordinates (x0,y0,x1,y1) are normalized to a 1000x1000 grid and passed through four separate embedding layers. These spatial embeddings are then added to the text and 1D position embeddings. This allows the model's self-attention mechanism to learn that words that are visually close are often semantically related, enabling it to understand structures like forms and tables without explicit rules.

3. Image embeddings

To incorporate visual and stylistic features like font type, color, or emphasis, LayoutLM also introduced an optional image embedding. This was generated by applying a pre-trained object detection model (Faster R-CNN) to the image regions corresponding to each word. However, this feature added significant computational overhead and was found to have a limited impact on some tasks, so it was not always used.

4. Pre-training LayoutLM

To learn how to fuse these different modalities, LayoutLM was pre-trained on the IIT-CDIP Test Collection 1.0, a massive dataset of over 11 million scanned document images from U.S. tobacco industry lawsuits. This pre-training used two main objectives:

- Masked Visual-Language Model (MVLM): Similar to BERT's Masked Language Model, some text tokens are randomly masked. The model must predict the original token using the surrounding context. Crucially, the 2D position embedding of the masked word is kept, forcing the model to learn from both linguistic and spatial clues.

- Multi-label Document Classification (MDC): An optional task where the model learns to classify documents into categories (e.g., "letter," "memo") using the document labels from the IIT-CDIP dataset. This was intended to help the model learn document-level representations. However, later work found this could sometimes hurt performance on information extraction tasks and it was often omitted.

5. Fine-tuning for downstream tasks

After pre-training, the LayoutLM model can be fine-tuned for specific tasks, where it has set new state-of-the-art benchmarks:

- Form understanding (FUNSD dataset): This involves assigning labels (like question, answer, header) to text blocks.

- Receipt understanding (SROIE dataset): This focuses on extracting specific fields from scanned receipts. .

- Document image classification (RVL-CDIP dataset): This task involves classifying an entire document image into one of 16 categories.

Using LayoutLM with Hugging Face

One of the primary reasons for LayoutLM's popularity is its availability on the Hugging Face Hub, which makes it significantly easier for developers to use. The transformers library provides pre-trained models, tokenizers, and configuration classes for LayoutLM.

To fine-tune LayoutLM for a custom task, you typically need to:

- Install Libraries: Ensure you have torch and transformers installed.

- Prepare Data: Process your documents with an OCR engine to get words and their normalized bounding boxes (scaled to a 0-1000 range).

- Tokenize and Align: Use the LayoutLMTokenizer to convert text into tokens. A key step is to ensure that each token is aligned with the correct bounding box from the original word.

- Fine-tune: Use the LayoutLMForTokenClassification class for tasks like NER (labeling text) or LayoutLMForSequenceClassification for document classification. The model takes input_ids, attention_mask, token_type_ids, and the crucial bbox tensor as input.

It's important to note that the base Hugging Face implementation of the original LayoutLM does not include the visual feature embeddings from the Faster R-CNN model; that capability was more deeply integrated in LayoutLMv2.

How LayoutLM is used: Fine-tuning for downstream tasks

LayoutLM's true power is unlocked when it's fine-tuned for specific business tasks. Because it's available on Hugging Face, developers can get started relatively easily. The main tasks include:

- Form understanding (text labeling): This involves linking a label, like "Invoice Number," to a specific piece of text in a document. This is treated as a token classification task.

- Document image classification: This involves categorizing an entire document (e.g., as an "invoice" or "purchase order") based on its combined text, layout, and image features.

For developers looking to see how this works in practice, here are some examples using the Hugging Face transformers library.

Example: LayoutLM for text labeling (form understanding)

To assign labels to different parts of a document, you use the LayoutLMForTokenClassification class. The code below shows the basic setup.

from transformers import LayoutLMTokenizer, LayoutLMForTokenClassification

import torch

tokenizer = LayoutLMTokenizer.from_pretrained("microsoft/layoutlm-base-uncased")

model = LayoutLMForTokenClassification.from_pretrained("microsoft/layoutlm-base-uncased")

words = ["Hello", "world"]

# Bounding boxes must be normalized to a 0-1000 scale

normalized_word_boxes = [[637, 773, 693, 782], [698, 773, 733, 782]]

token_boxes = []

for word, box in zip(words, normalized_word_boxes):

word_tokens = tokenizer.tokenize(word)

token_boxes.extend([box] * len(word_tokens))

# Add bounding boxes for special tokens

token_boxes = [[0, 0, 0, 0]] + token_boxes + [[1000, 1000, 1000, 1000]]

encoding = tokenizer(" ".join(words), return_tensors="pt")

input_ids = encoding["input_ids"]

attention_mask = encoding["attention_mask"]

bbox = torch.tensor([token_boxes])

# Example labels (e.g., 1 for a field, 0 for not a field)

token_labels = torch.tensor([1, 1, 0, 0]).unsqueeze(0)

outputs = model(

input_ids=input_ids,

bbox=bbox,

attention_mask=attention_mask,

labels=token_labels,

)

loss = outputs.loss

logits = outputs.logits

Example: LayoutLM for document classification

To classify an entire document, you use the LayoutLMForSequenceClassification class, which uses the final representation of the [CLS] token for its prediction.

from transformers import LayoutLMTokenizer, LayoutLMForSequenceClassification

import torch

tokenizer = LayoutLMTokenizer.from_pretrained("microsoft/layoutlm-base-uncased")

model = LayoutLMForSequenceClassification.from_pretrained("microsoft/layoutlm-base-uncased")

words = ["Hello", "world"]

normalized_word_boxes = [[637, 773, 693, 782], [698, 773, 733, 782]]

token_boxes = []

for word, box in zip(words, normalized_word_boxes):

word_tokens = tokenizer.tokenize(word)

token_boxes.extend([box] * len(word_tokens))

token_boxes = [[0, 0, 0, 0]] + token_boxes + [[1000, 1000, 1000, 1000]]

encoding = tokenizer(" ".join(words), return_tensors="pt")

input_ids = encoding["input_ids"]

attention_mask = encoding["attention_mask"]

bbox = torch.tensor([token_boxes])

# Example document label (e.g., 1 for "invoice")

sequence_label = torch.tensor([1])

outputs = model(

input_ids=input_ids,

bbox=bbox,

attention_mask=attention_mask,

labels=sequence_label,

)

loss = outputs.loss

logits = outputs.logits

Why a powerful model (and code) is just the starting point

As you can see from the code, even a simple example requires significant setup: OCR, bounding box normalization, tokenization, and managing tensor shapes. This is just the tip of the iceberg. When you try to build a real-world business solution, you run into even bigger challenges that the model itself can't solve.

Here are the missing pieces:

- Automated import: The code doesn't fetch documents for you. You need a system that can automatically pull invoices from an email inbox, grab purchase orders from a shared Google Drive, or connect to a SharePoint folder.

- Document classification: Your inbox doesn't just contain invoices. A real workflow needs to automatically sort invoices from purchase orders and contracts before you can even run the right model.

- Data validation and approvals: A model won't know your company's business rules. You need a workflow that can automatically flag duplicate invoices, check if a PO number matches your database, or route any invoice over $5,000 to a manager for manual approval.

- Seamless export & integration: The extracted data is only useful if it gets into your other systems. A complete solution requires pre-built integrations to push clean, structured data into your ERP (like SAP), accounting software (like QuickBooks or Salesforce), or internal databases.

- A usable interface: Your finance and operations teams can't work with Python scripts. They need a simple, intuitive interface to view extracted data, make quick corrections, and approve documents with a single click.

Nanonets: The complete solution built for business

Nanonets provides the entire end-to-end workflow platform, using the best-in-class AI models under the hood so you get the business outcome without the technical complexity. We built Nanonets because we saw this exact gap between powerful AI and a practical, usable business solution.

Here’s how we solve the whole problem:

- We're model agnostic: We abstract away the complexity of the AI landscape. You don't need to worry about choosing between LayoutLMv3, Florence-2, or another model; our platform automatically uses the best tool for the job to deliver the highest accuracy for your specific documents.

- Zero-fuss workflow automation: Our no-code platform lets you build the exact workflow you need in minutes. For our client Hometown Holdings, this meant a fully automated process from ingesting utility bills via email to exporting data into Rent Manager, saving them 4,160 employee hours annually.

- Instant learning & reliability: Our platform learns from every user correction. When a new document format arrives, you just correct it once, and the model learns instantly. This was crucial for our client Suzano, who had to process purchase orders from over 70 customers in hundreds of different templates. Nanonets reduced their processing time from 8 minutes to just 48 seconds per document.

- Domain-specific performance: Recent research shows that pre-training models on domain-relevant documents significantly improves performance and reduces errors. This is exactly what the Nanonets platform facilitates through continuous, real-time learning on your specific documents, ensuring the model is always optimized for your unique needs.

Getting started with LayoutLM (without the PhD in machine learning)

If you're ready to implement LayoutLM but don't want to build everything from scratch, Nanonets offers a complete document AI platform that uses LayoutLM and other state-of-the-art models under the hood. You get:

- Pre-trained models for common document types

- No-code interface for training custom models

- API access for developers

- Human-in-the-loop validation when needed

- Integrations with your existing tools

The best part? You can start with a free trial and process your first documents in minutes, not months.

FAQs

What is the difference between LayoutLM and traditional OCR?

Traditional OCR (Optical Character Recognition) converts a document image into plain text. It extracts what the text says but has no understanding of the document's structure. LayoutLM goes a step further by combining that text with its visual layout information (where the text is on the page). This allows it to understand context, like identifying that a number is a "Total Amount" because of its position, which traditional OCR cannot do.

How do I use LayoutLM with Hugging Face Transformers?

Implementing LayoutLM with Hugging Face requires loading the model (e.g., LayoutLMForTokenClassification) and its tokenizer. The key step is providing bbox (bounding box) coordinates for each token along with the input_ids. You must first use an OCR tool like Tesseract to get the text and coordinates, then normalize those coordinates to a 0-1000 scale before passing them to the model.

What accuracy can I expect from LayoutLM and newer models?

Accuracy depends heavily on the document type and quality. For well-structured documents like receipts, LayoutLM can achieve F1-scores up to 95%. On more complex forms, it scores around 79%. Newer models can offer higher accuracy, but performance still varies. The most crucial factors are the quality of the scanned document and how closely your documents match the model's training data. Recent studies show that fine-tuning on domain-specific documents is a key factor in achieving the highest accuracy.

How does this technology improve invoice processing?

LayoutLM and similar models automate invoice processing by intelligently extracting key information like vendor details, invoice numbers, line items, and totals. Unlike older, template-based systems, these models adapt to different layouts automatically by leveraging both text and positioning. This capability dramatically reduces manual data entry time (we have brought down time required from 20 minutes per document to under 10 seconds), improves accuracy, and enables straight-through processing for a higher percentage of invoices.

How do you fine-tune LayoutLM for custom document types?

Fine-tuning LayoutLM requires a labeled dataset of your custom documents, including both the text and precise bounding box coordinates for each token. This data is used to train the pre-trained model to recognize the specific patterns in your documents. It's a powerful but resource-intensive process, requiring data collection, annotation, and significant computational power for training.

How do you fine-tune LayoutLM for custom document types?

Fine-tuning LayoutLM requires a labeled dataset of your custom documents, including both the text and precise bounding box coordinates for each token. This data is used to train the pre-trained model to recognize the specific patterns in your documents. It's a powerful but resource-intensive process, requiring data collection, annotation, and significant computational power for training.

What accuracy rates can I expect from LayoutLM on different document types?

LayoutLM achieves varying accuracy rates depending on document structure and complexity, with performance typically ranging from 85-95% for well-structured documents like forms and invoices, and 70-85% for more complex or unstructured documents.

The model performs exceptionally well on the FUNSD dataset (form understanding), achieving around 79% F1 score, and on the SROIE dataset (receipt understanding), reaching approximately 95% accuracy for key information extraction tasks. Document classification tasks generally see higher accuracy rates (90-95%) compared to token-level tasks like named entity recognition (80-90%), while performance can drop significantly for poor quality scanned documents, handwritten text, or documents with unusual layouts that differ substantially from training data.

Factors affecting accuracy include document image quality, consistency of layout structure, text clarity, and how closely the target documents match the model's training distribution.