In several organizations, details from documents like Passports and ID Cards are often manually noted down or captured to submit copies for KYC tasks. To digitalize these, the reviewers have to manually verify and then type down information like name, address, and enter it into databases or ERP systems, which is a hectic and time-consuming task. Hence, to make this process efficient, most of the people use Optical Character Recognition (OCR).

If you're not aware of this, think of it as a computer algorithm that can read images of typed or handwritten text into text format. In this blog, we'll be discussing how developers and companies can automate information extraction for KYC documents, including reading complex fields like mrz code Additionally, we’ll also review the challenges that need to be addressed during the process to create an MRZ passport reader. Lastly, we'll discuss how these OCR models can be trained more efficiently and use them as an id/passport scanner online API within your app using Nanonets. Below are the table of contents.

Working of Traditional OCR

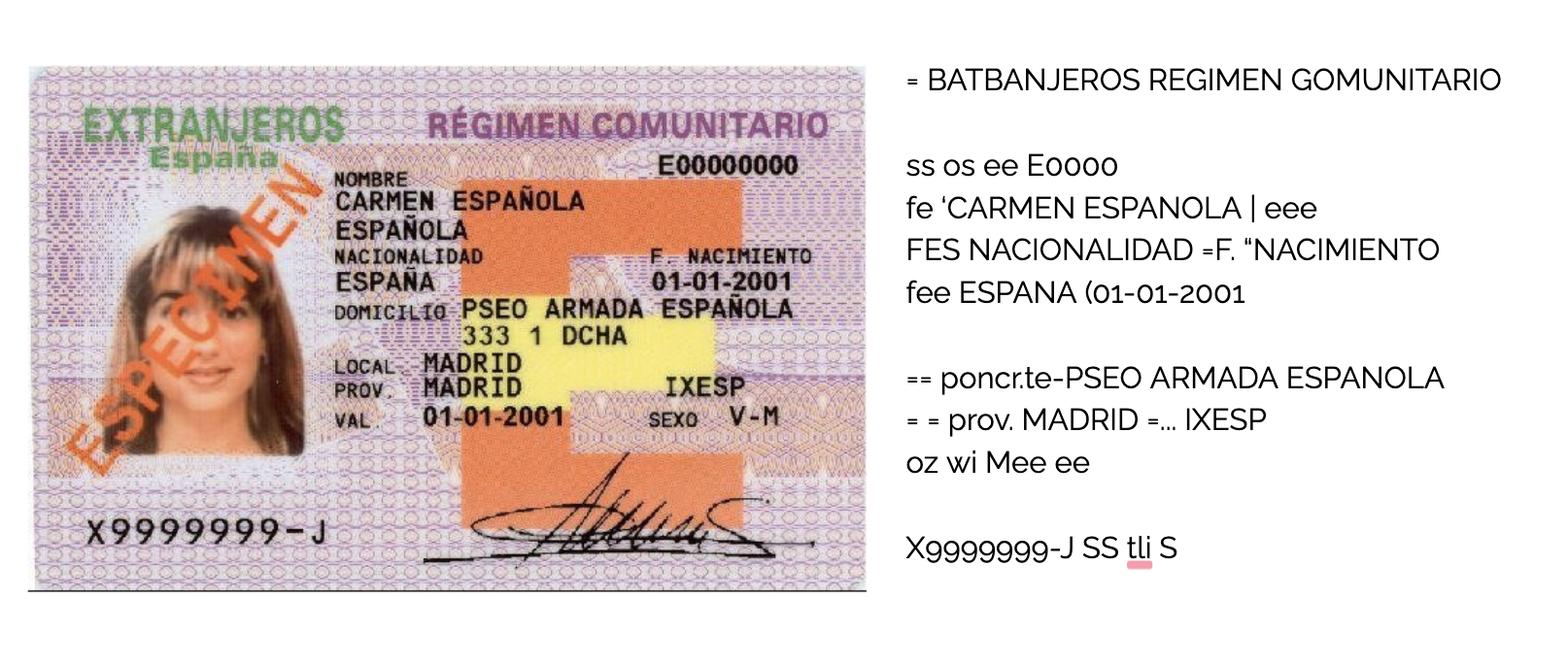

The OCR techniques are not new, but they have been continuously evolving with time. Out of these, one popular and commonly used OCR engine is Tesseract. It's an open-source python-based software developed by Google. However, even popular tools like Tesseract fail to extract text in some complex scenarios. They blindly extract text from given images without any processing or rules. For example, consider our problem of extracting information from Passports and ID cards, here we'll be needing only a few essential attributes like Name, ID Number, Sex, Age and Address. The rest all can be ignored. Nevertheless, OCRs are not intelligent; their only goal is to extract text wherever identified. To make this more simple for you here is an output of Tesseract OCR when performed to a driving ID.

Alright, it's now evident that OCR alone cannot perform efficiently for processing different documents types. Hence, we'll have to make use of machine learning and deep learning algorithms to make OCR more intelligent. These algorithms should help OCR for key-value pair extraction, image augmentation (making sure the scanned images are consistent and aligned correctly), multilingual text extraction etc. In the next section, we’ll look into the key-value pair extraction in documents.

Key-Value Pair Extraction for Passports and ID Cards

Key-value pair extraction is a technique used commonly for documents to identify the location of particular fields in form documents from block objects and then later stored in a map. For example, consider the Driving ID. Here, there are specific fields where labels like DOB, ISS, Street Address, Sex, Eyes etc. Now, the goal of key-value pair extraction is to identify these labels (Keys) and the values associated with it. We'll also have to make sure that this algorithm is applicable for different templates, as in the same algorithm should be appropriate for documents of other formats. Here's a sample JSON output where OCR is applied after extracting the keys and the values.

{

"predictions": [

{

"label": "Name",

"ocr_text": "JOHN A ",

},

{

"label": "DOB",

"ocr_text": "01/06/1958 ",

},

{

"label": "Address",

"ocr_text": "123 STREET ADDRESS YOUR CITY WA 99999-1234 ",

},

{

"label": "Sex",

"ocr_text": "M ",

},

{

"label": "LicenseNo",

"ocr_text": "WDLABCD456DG 1234567XX1101 ",

}

]

}

Now, here comes the question! How to build a state of the art information extraction (IE) algorithm. Being honest, it requires a lot of expertise as well as experimenting in fields of deep learning. However, there are a few Neural Network Architectures (deep learning models) like CUTIE, Attention OCR and Graph Neural Networks to achieve this. Still, these require a vast pipeline right from settings up data to deploying them into production. We can also use Named Entity Recognition algorithms and Natural Language Processing (NLP) based solution for key-value extraction.

For passports, we can extract most of the key-value pairs like Name, ID, DOB easily except for the MRZ code. Let's see what is about and how we can extract it?

What is MRZ Code on Passport?

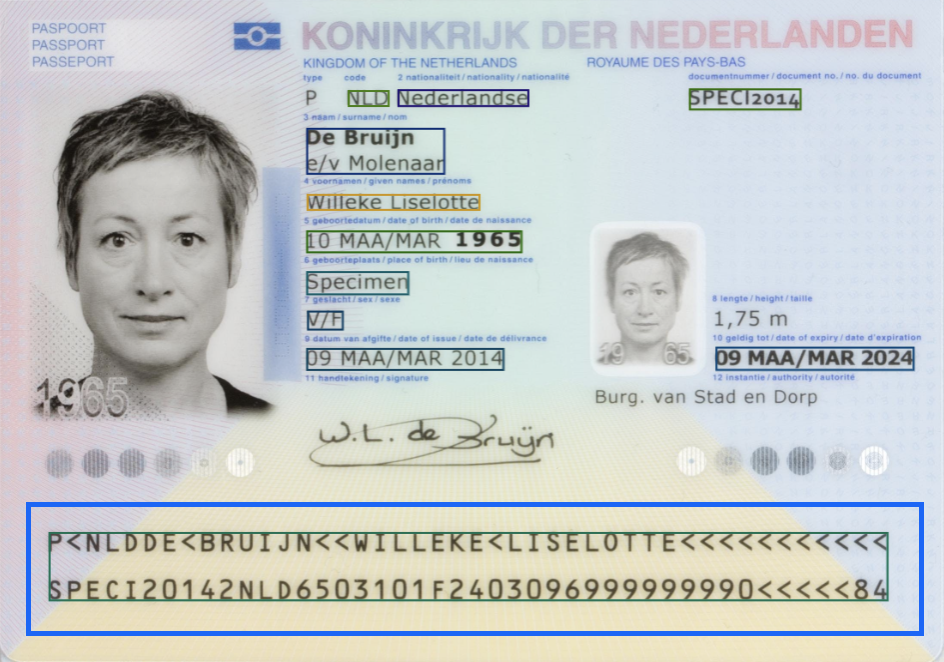

The MRZ region in passports or travel cards that contain either 30 or 44 characters. These unique MRZ codes on passports encodes identifying information of a given citizen, including the type of passport, passport ID, issuing country, name, nationality, expiration date, etc. Below is a screenshot of a passport with MRZ code.

Many solutions or algorithms do not propose extracting these MRZ code, in the next sections, we'll see how Nanonets extract complex fields like MRZ these field with ease.

In the next section, let’s discuss a few commonly encountered problems and challenges for IE tasks.

Try Nanonets to extract information from identity documents and automate the process!

Drawbacks and Challenges

Sometimes while scanning images of ID cards or Passports, we encounter different issues like capturing in wrong angles or dim lighting conditions. Also, after they are captured, it's equally important to check if they are original or faked. In this section, we'll discuss these critical challenges and how they can be addressed.

Improper Scanning: This is one of the most common problems while we are doing OCR. For high-quality scanned and aligned images, the OCR has a high accuracy of producing entirely searchable editable files. However, when a scan is distorted or when the text is blurred, OCR tools might have difficulty reading it, occasionally making inaccurate results. To overcome this, we must be familiar with techniques like image transforms and de-skewing, which help us align the image in a proper position.

Fraud & Blurry Image Checks: Documents, like are Passports and ID cards, are often used for KYC verifications. Hence, if a machine-based solution is used for automation, it's vital to check if they are original or fake by the machine itself. These are some of the traits which can help us check if the image is fake or not.

- Identify backgrounds for bent or distorted parts.

- Beware of low-quality images.

- Check for blurred or edited texts.

One algorithm that's familiar to overcome this task is the "Variance of Laplacian." It helps us find and examine the distribution of low and high frequencies in the given image.

Multilingual OCR: Passports and ID cards exist in various languages and are typed in different fonts based on the origin of the country. Hence, we'll have to make sure to train the OCR with different languages.

Complicated right? Don't worry, we at Nanonets make your work much more comfortable with our ready to deploy state of the art OCR and Deep Learning Algorithms. Irrespective of templates, fonts and language our models perform information extraction tasks on scanned Passports, ID Cards, Driving Licences within no time. The outputs from these models can be directly integrated to ERP or KYC systems without any human intervention. Let's see their performance in a few examples in the next section.

Enter Nanonets

The Nanonets OCR API allows you to build OCR models with ease. You can upload your data, annotate it, set the model to train, and wait for getting predictions through a browser-based UI without writing a single line of code, worrying about GPUs or finding the right architectures for your deep learning models.

You can try it on your own for free from here.

Here are some of the outputs that are generated using the Nanonets API. The Key-Value pairs are highlighted using bounding boxes. It’s hard to see them as they are very precisely marked as the key-value pairs.

Nanonets helps you extract data from different ranges of IDs and passports, irrespective of language and templates. Our OCR API can readily identify the following fields in any desired outputs like CSV, Excel, JSON.

- Full name

- Nationality

- Date of birth

- Birthplace

- MRZ code

- Gender

- Date of issue

- Expiry date

- Height

- Document number

- Crop of the passport photo

- Personal identification number

You can also use the Nanonets-OCR API by following the steps below:

- Create a Free Nanonets Account: Create your free Nanonets account and search for Driver License or Passports Models under My Models tab in your dashboard.

- Upload your Images: Next, choosing the model, you can add your ID Cards or passports to your dashboard. Immediately after a couple of seconds, Nanonets will draw bounding boxes of the important key-value pairs using state of the art deep learning algorithms.

- Verify your Images: You can also verify your images, in case if they are not identified as expected. But, don’t worry, our models learn from the verified images.

- Integrate your Model: Lastly, Nanonets can also be integrated into your software or as an SDK on mobile devices. You can find the API’s under the integrate tag. You can also export the extracted data in your desired format.

Data Privacy

We at Nanonets take data privacy and security very seriously. We are following access management and restrictions based on the need-to-know principle. The non-disclosure agreements bound all our employees and contractors. When we transmit data, we use encrypted communication channels (SSL/TLS encryption). We store and process Customer data only with the three major cloud platform providers - Amazon AWS, Google Cloud, and Microsoft Azure.

Nanonets OCR API has many interesting use cases that could optimize your business performance, save costs and boost growth. Find out how Nanonets' use cases can apply to your product.

Further Reading

- High-speed OCR algorithm for portable passport readers

- An Examination of Character Recognition on ID card using Template Matching Approach

- Passport MRZ reading with Tesseract.js OCR library