Top 10 Web Scraping Tools in 2024

A small e-commerce company ran a web scraping script to monitor competitor prices every day. One day, a significant drop in prices was observed on a competitor's website. Using this insight, they quickly adjusted the prices on their website, launched a quick campaign, & promoted the discounts on social media. This highlights the crucial impact of web scraping tools on driving business growth by leveraging competitive advantage.

Web pages contain a lot of relevant data that needs to be extracted for multiple use cases, like monitoring competitors’ products and prices, analyzing marketing trends, scraping data for real estate listings, flight & hotel tickets, job listings, and more. This can be done using web scraping. Web scraping tools simplify the process of extracting this data from websites.

Web scraping tools help save a lot of time and effort compared to manually scraping webpages. Choosing the right tool for your use case is very important. That’s why you need to do a thorough analysis of the prospective tools to evaluate them on multiple parameters.However, this process can be time-consuming and confusing, given the multitude of tools available in the market.

We’ll explore the top web scraping tools for scraping data from web pages easily and efficiently.

Here are the top 10 web scraping tools featured in this article:

- Nanonets Web Scraping Tool

- Smartproxy

- Scraper API

- Web Scraper

- Grepsr

- ParseHub

- Scrapy

- Mozenda

- Dexi

- ScrapingBee

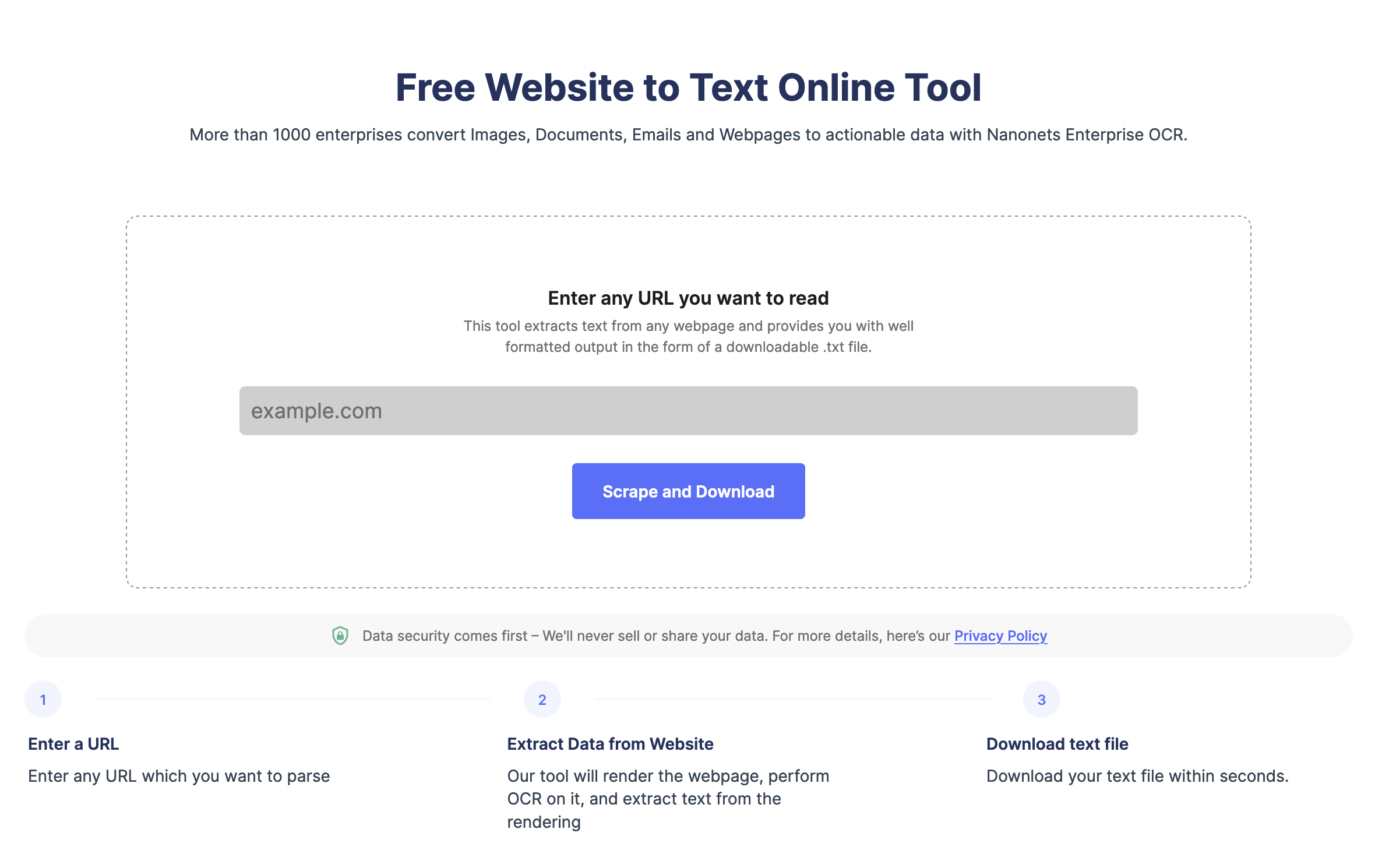

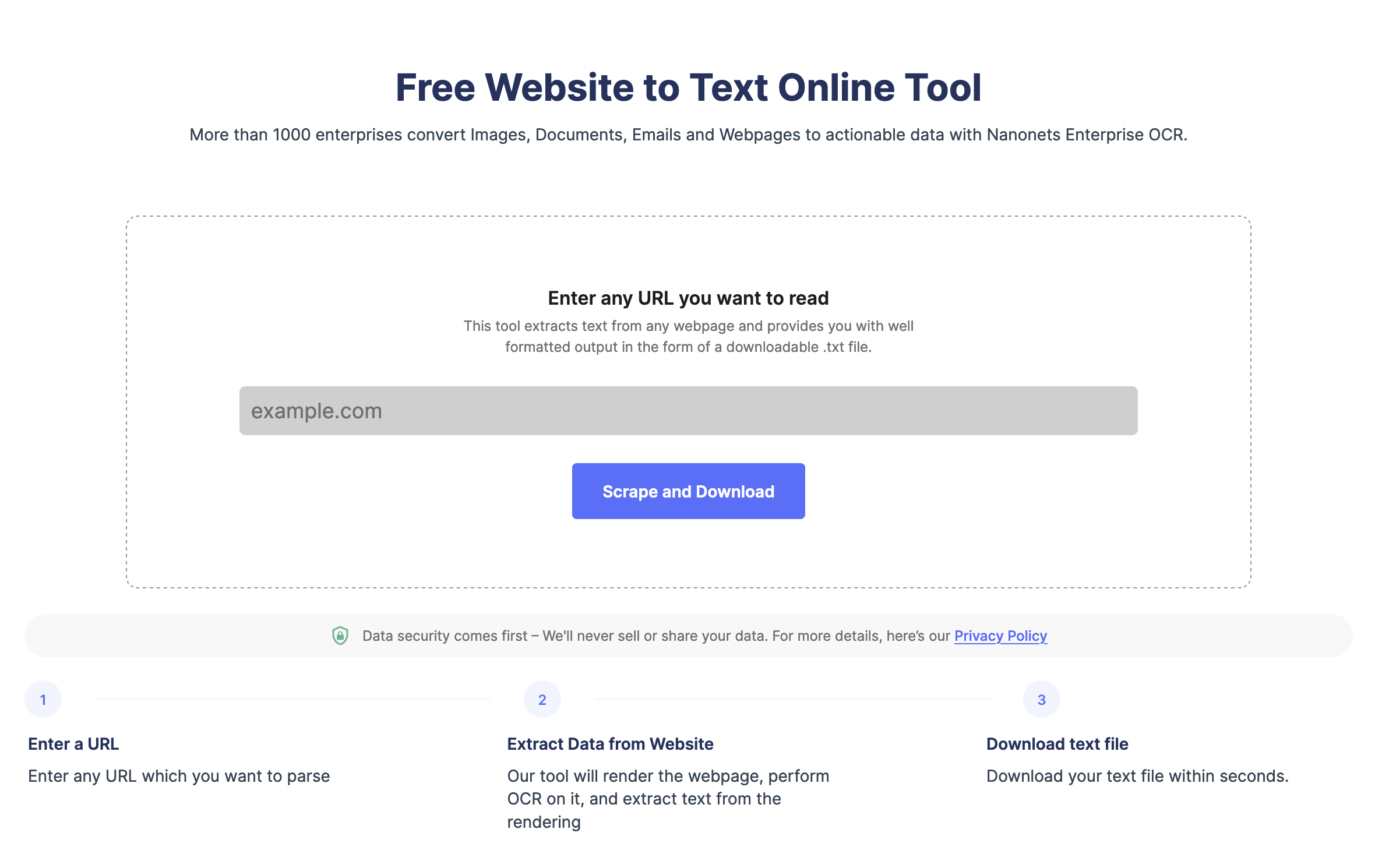

Extract text from any webpage in just one click. Head over to Nanonets website scraper, Add the URL and click "Scrape," and download the webpage text as a file instantly. Try it for free now.

What is Web Scraping?

Web scraping is the process of collecting/extracting data from websites and storing it in a useful form for your business. Web scrapers need to be versatile, as they can be used for extracting different types of data from different types of resources. Web scraping can be as simple as copying and pasting the data from any website onto an Excel sheet or as complex as extracting data from multiple websites with different web structures that might contain anti-scraping mechanisms like CAPTCHAs or IP restrictions. This is why understanding your requirements before choosing the right web scraping tool is equally important.

Importance of Scraping Web Data?

Some of the top use cases where web scraping is needed are:

1. Competitive Pricing and Product Monitoring: This is mostly required in e-commerce where tracking and monitoring product information and pricing data might provide a competitive advantage.

2. Real Estate: To extract real estate listing data from aggregator websites to identify investment opportunities

3. Market Research and Analysis: Can be used for gathering customer reviews and ratings to understand consumer sentiment and preferences.

4. SEO & Marketing: Monitoring search trends for queries and analysing competitors’ SERP presence for improving SEO strategy

5. Job Market Analysis: Used for extracting data from various job boards and company websites to analyze demand for different roles and skills. Can also be used for understanding compensation trends.

How does a Web Scraping tool work?

A web scraping tool, or data scraping tool, extracts the data present on a website by following a set of common principles. These principles are:

- Providing the Target URL: You can either manually enter the URL or automate the process using a script.

- Sending the HTTP request: web scraping tool will then send the HTTP request to access the website’s server to fetch the content. Once the request goes through, the scraper will be able to access the page content.

- Parsing HTML content: The web scarper then parses the data to extract important information by navigating the HTML structure of the webpage.

- Extracting Data: Once the desired content is located, the scraper extracts the data contained within it. This could be text, links, images, or any other type of content present on the page.

- Storing Data: The extracted data is then sent to be stored in a structured format, such as a database, CSV file, or JSON file. This makes it easy to access and analyze the data later.

Scrape any webpage in seconds using the Nanonets website scraping tool. It's free. Try now.

How to choose the right tool for your Web Scraping needs?

Choosing the ideal web scraping tools for your use case will depend on evaluating the tool across various parameters to align with your specific needs. Here are the top parameters you need to consider while choosing the web scraping tool:

- Data Type: Determine the type of data that you need to scrape, like text (in the .html or plain text format), tables (.json, .csv, or .xlsx format), images (in the jpeg, png or webp format) or may be date & time data (in the ISO 8601 format)

- Scraping Frequency: Decide how often you need to scrape the data (example: hourly, daily or weekly)

- Volume of Data: Evaluate the amount of data you need to scrape from the website.

- Website Complexity: Consider whether the target website uses static HTML, dynamic pages that contain JavaScript, or pages that require login or authentication.

- Extent of Scraping: Determine if you need to extract data from only a few elements or pages or from the entire website.

- Ease of Use: Explore tools with good user interface or intuitive scripting language if you have little to no coding ability.

- Customizability: Ensure the tool allows for customization to handle complex scraping tasks and adapt to changes in website structure.

- Speed: Evaluate the tool’s performance in terms of scraping speed and efficiency.

- Anti-Scraping Measures: Choose a tool that can bypass/solve CAPTCHAs & supports proxy rotation for IP blockers

- Learning Curve: Evaluate a tool based on how easy it is to learn and use the tool. Also, consider how much effort it will take for you to maintain the scraping setup.

- Budget: Compare the costs of different tools, including any subscription or usage fees.

By evaluating these factors carefully, you can choose a tool that best fits your requirements and ensures that you possess efficient web scraping capabilities.

Now let’s get into the best Web scraping tools:

Top 10 Web Scraping Tools

The best web scraper tools are:

1. Nanonets Web Scraping Tool

Nanonets is a leading AI-based OCR and document processing platform, it leverages machine learning to recognize text & patterns in images and documents. With a powerful OCR API, Nanonets has the ability to scrape webpages with 100% accuracy. Nanonets’ ability to automate web scraping using automated workflows sets it apart from other tools present in this list.

Users can set up workflows to automatically scrape webpages, format the extracted data and then export the scraped data to 500+ integrations at a click of a button.

Key Features of Nanonets:

- Real-time data extraction from any kind of webpage

- Extracts HTML tables with high accuracy

- Automatic Data formatting

- Multiple export options.

Ideal for:

- Scraping Financial Statements and Reports: Nanonets can be used to extract data from financial reports and statements.

- Legal Document Extraction: Nanonets can scrape and extract data from legal documents.

Pricing: Use free version for extracting website data into a downloadable .txt file For scraping Tables, Images, forms & more: Request a Demo

Pros & Cons of Nanonets:

Pros:

- 24x7 live support

- Can handle data extraction from all types of webpages - Java, Headless or Static Pages

- No-code user interface

- Highly efficient workflow automation capabilities

Cons:

- Can’t Scrape Videos

2. Smartproxy

Smartproxy is a proxy service provider and web data collection platform, it possesses some decent web scraping capabilities to extract data and content from websites. It provides the data in the form of raw HTML from websites. It accomplishes this task by sending an API request. Not only this, but this tool also keeps on sending requests so that the data or content required by the company should be extracted with utmost accuracy.

Key Features of Smartproxy:

- Provides real-time data collection

- Provides real-time proxy-like integration

- Data extracted in raw HTML

Ideal for:

Smartproxy is primarily a Residential proxy tool, but it also has a decent capability for scraping Social Media, SERP & e-commerce data.

Pricing: Free Trial available for up to 1000 requests Web Scraping API Pricing ranges from $2 per 1000 requests to $0.8 per 1000 requests

Pros & Cons of Smartproxy:

Pros:

- Global proxies power this tool.

- Provides live customer support to the users

- No CAPTCHAs as it comes with advanced proxy rotation

Cons:

- Sometimes email support is slow

- It does not allow for web elements to be rendered

- Expensive plan

- Should incorporate more auto extractors

- Requests could get a timeout

3. Scraper API

ScraperAPI is a web scraping tool that handles proxies, CAPTCHAs, and browser automation, allowing users to scrape websites efficiently and at scale. It allows easy integration; you just need to get a request and a URL. Users can also explore more advanced use cases in the documentation.

Features of Scraper API:

- Allows easy integration

- Allows users to scrape JavaScript-rendered pages as well

Ideal for:

Scraper API is ideal for individuals/enterprises that are looking to scrape websites with strict proxy blockers & other Anti-scraping measures like Amazon, Walmart, Google, etc.

Pricing: starts from $49/month

Pros & Cons of Scraper API

Pros:

- Easy to use

- Completely customizable

- It is fast and reliable

Cons:

- There are some websites where this tool does not function

- Expensive: features, such as javascript scraping, are very expensive

- Plan Scalability

- While calling the API, the headers of the response are not there

4. Web Scraper

Web scraper started as a browser extension for chrome, they are now also a cloud-based web scraping platform. It has an easy-to-use interface, so it can also be used by beginners.

Features of Web Scraper:

- It enables data extraction from websites with categories and sub-categories

- Modifies data extraction as the site structure changes

Ideal for:

Webscraper.io is ideal for individuals and enterprises looking to simplify advanced and complex web scraping tasks by allowing users to extract data through point-and-click methods, select text, and replicate similar results. It also excels in extracting data from dynamic sites with multiple levels of navigation.

Pricing: Free browser extension, but highly restricted in capabilities. Paid plan starts from $50/month

Pros & Cons of Web Scraper

Pros:

- It is a cloud-based web scraper

- Extracted data is accessible through the API

Cons:

- Should provide extra credits in the trial plan

- Expensive for small users

- Internal server issues

- Website response is very slow sometimes

- It should include more video documentation.

5. Grepsr

Grepsr is a data extraction and web scraping platform that automates data collection from websites, providing structured data for analysis and business use.

Ideal for:

GREPSR simplifies the web scraping process with its intuitive interface and flexible features. It is designed to handle both straightforward and complex data extraction tasks, making it a versatile choice for e-commerce monitoring and data collection for research purposes.

Pricing: starts @ $350

Pros & Cons of Grepsr

Pros:

- It supports multiple output formats.

- Provided the service of unlimited bandwidth

Cons:

- Sometimes it can be inconvenient to extract data

- Being in a different timezone can lead to latency

- There are errors while extracting the data

- Sometimes the request gets timed out

- Sometimes data needs to be re-processed due to inconsistency.

6. ParseHub

ParseHub is a famous web scraping tool that has an easy-to-use interface. It provides an easy way to extract data from websites. Moreover, it can extract the data from multiple pages and interact with AJAX, dropdown, etc.

Features of ParseHub:

- Allows data aggregation from multiple websites

- REST API for building mobile and web apps

Ideal for:

ParseHub web scraping tool has an intuitive user interface which makes it a very good choice for people who don’t possess good coding skills. Ideal for Marketers, Students & Small Business owners

Pricing: Free for 200 pages

Standard plan: $189/month

Professional plan: $599/month

Pros & Cons of ParseHub

Pros:

- Easy-to-use interface

- Beginner friendly

Cons:

- Only available as a desktop app

- Users face problems with bugs

- Expensive

- Low limit on the Free Version

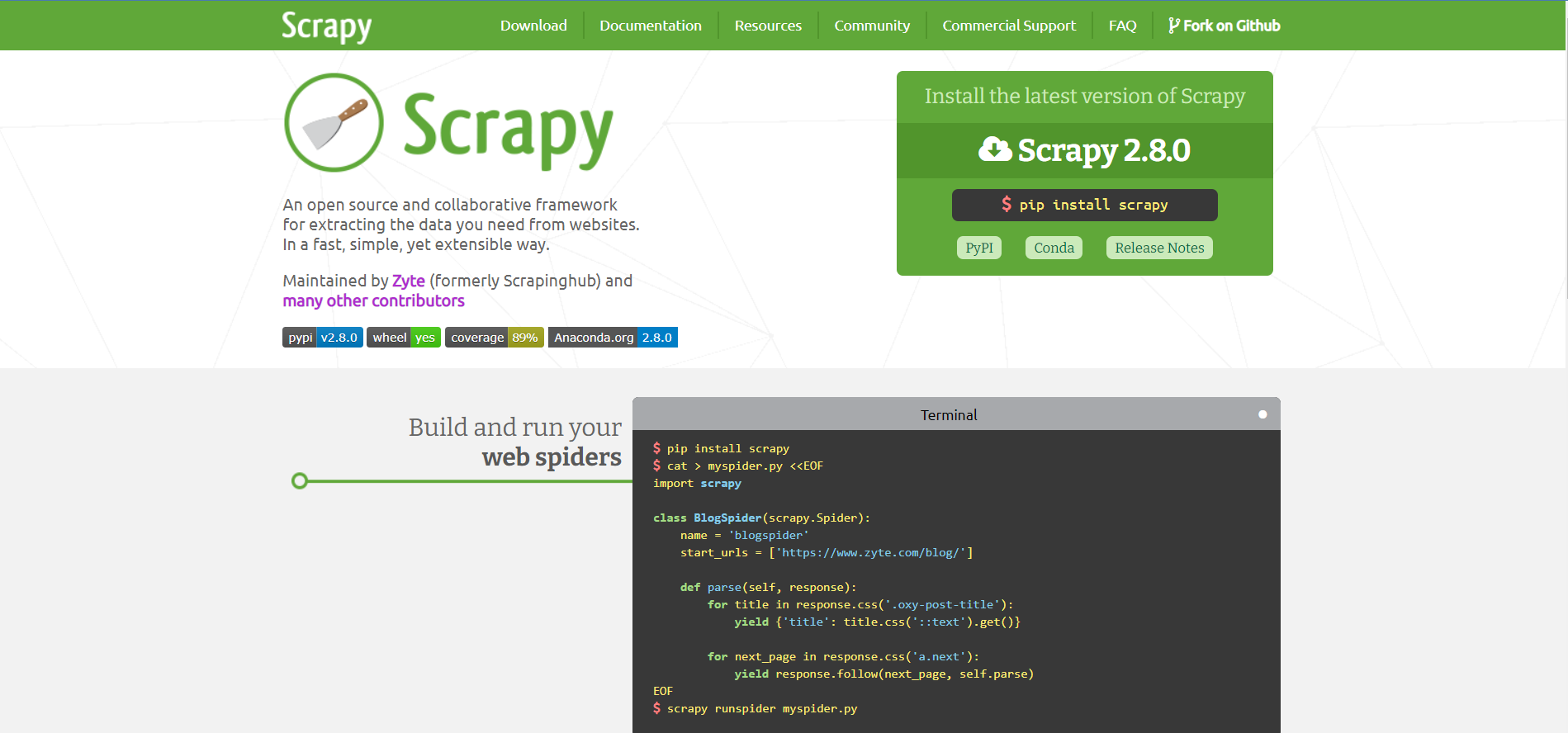

7. Scrapy

Scrapy is an open-source web scraping framework designed for Python, that allows users to extract data from different websites. It works on Mac, Windows, Linux, and BSD.

Features of Scrapy:

- This tool is easily extensible and portable.

- Helps to create own web spiders.

- These web spiders can be deployed to Scrapy cloud or servers.

Ideal for:

Scrapy is an efficient framework for developers and data engineers who need to build custom web scrapers, handle large-scale data extraction, and manage complex data scraping projects.

Pricing: Free

Pros & Cons of Scrapy

Pros:

- Scalability

- Decent customer support

Cons:

- Expensive

- Steep learning curve

- Lack of monitoring and alerting system

- logging system is inconvenient to use

8. Mozenda

Mozenda is a web scraping tool that also provides data harvesting and wrangling services. It has a user-friendly interface with advanced data extraction capabilities and is available on both cloud and on-premise.

Features of Mozenda:

- This tool helps to accomplish simultaneous processing

- Data collection can be controlled through API

- It allows data scraping for websites from several geographical locations.

- Provided the facility of email notifications.

Ideal for:

Mozenda is ideal for businesses and professionals needing robust data extraction, harvesting, and wrangling services with an easy-to-use interface

Pricing:

Project: $300/month Professional: $400/month

Enterprise: $450/month

High Capacity: $40K/year

Pros & Cons of Mozenda:

Pros:

- Cloud-based and on-premises solutions for data extraction

- Allows users to download files and images

- Excellent API features

Cons:

- Complicated scraping requirements that are hard to achieve

- It can be hard to find relevant documentation

- Hard to understand and use programming terms

- Does not provide enough testing functionality.

- Users may face RAM issues when dealing with huge websites.

9. Dexi

Dexi is a web scraping tool that lets users design scraping workflows using a visual editor, making data extraction from websites intuitive and customizable.

Features of Dexi:

- It allows data extraction from any site

- This tool has features for aggregating, transforming, manipulating, and combining data.

- It has tools for debugging.

Ideal for:

Dexi is ideal for users who want to design and automate web scraping workflows using a visual editor.

Pricing: Starts @$119/month

Pros & Cons of Dexi

Pros:

- This tool is easily scalable

- It supports many third-party services.

Cons:

- This tool is very complicated to understand

- It lacks some advanced functionality

- Documentation could be enhanced

- API endpoints are not available

- Non Intuitive UI UX

10. ScrapingBee

ScrapingBee is an effective web scraping tool that enables users to extract the desired data without having to deal with headless browsers and proxies. It is designed to provide hassle-free data collection.

Key Features of ScrapingBee:

- Handles headless browsers

- Automatic proxy rotation

- Simplified API integration

Ideal for:

ScrapingBee is ideal for businesses looking for a simple and scalable web scraping platform for handling proxies, and bypassing CAPTCHAs with minimal configuration.

Pricing: Offers competitive pricing plans with a free tier for small-scale projects.

Freelance: $49/mo

Startup: $99/mo

Business: $249/mo

Business: $599+/mo

Pros & Cons of ScrapingBee:

Pros:

- Easy to integrate and use

- Reliable data extraction with proxy management

- Good customer support

Cons:

- Limited customization options

- Limited concurrent scraping for lower plans

- Pricing can be high for large-scale projects

Comparing all the Web Scraping Tools

Here is a comparison table to compare the web scraping tools mentioned above:

| Tool | Key Features | Ideal Use Cases | Pricing | Pros | Cons |

|---|---|---|---|---|---|

| Nanonets | Real-time data extraction, OCR, workflow automation, HTML table extraction | Financial reports, legal documents, academic research, e-commerce, job listings | Free (basic), Demo for advanced | 24x7 live support, no-code interface, workflow automation | Cannot scrape images/videos |

| Smartproxy | Real-time data collection, proxy integration, raw HTML extraction | E-commerce, market research, SEO monitoring | Free trial (up to 1000 requests), $2-$0.8 per 1000 requests | Global proxies, no CAPTCHAs, live customer support | Slow email support, no web element rendering, expensive, potential timeouts |

| Scraper API | Easy integration, geo-located rotating proxies, scrape JavaScript-rendered pages | E-commerce, market research, real estate, SEO, news & media | Starts from $49/mo | Easy to use, customizable, fast, reliable | Costly, limited functionality on some websites, expensive JavaScript scraping |

| Web Scraper | Data extraction from websites with categories, sub-categories, cloud-based | E-commerce | Free extension, Paid plan from $50/mo | Cloud-based, API access | High pricing for small users, internal server errors, slow website response |

| Grepsr | Multiple output formats, unlimited bandwidth | E-commerce, job listings, real estate, marketing | Starts @ $350 | Supports multiple output formats, unlimited bandwidth | Inconvenient data extraction, latency due to timezones, inconsistent data |

| ParseHub | Data aggregation from multiple websites, REST API, easy-to-use interface | Data aggregation from multiple websites, building mobile/web apps | Free (200 pages), Standard:$189/mo, Professional: $599/mo | Easy-to-use interface, beginner-friendly | Desktop app, bugs, costly, low free version page limit |

| Scrapy | Open-source, extensible, supports multiple OS | Collaborative framework, creating web spiders | Free | Reliable, scalable, excellent support | Expensive, challenging for non-professionals, lack of monitoring/alerting |

| Mozenda | Simultaneous processing, API control, geographical data scraping | Marketing, finance | Project: $300/mo, Professional: $400/mo, Enterprise: $450/mo, High Capacity: $40K/year | Cloud-based/on-premises solutions, API features | Complicated requirements, hard documentation, RAM issues with large websites |

| Dexi | Data extraction, aggregation, debugging, data insights | Company decisions, data processing | Starts @ $119/mo | Easily scalable, supports third-party services | Complicated, lacks advanced functionality, non-intuitive UI/UX |

| ScrapingBee | Proxy Rotation, CAPTCHA handling, JavaScript Rendering | Developers & tech teams looking for easily configurable platform | Starts from $49/mo | Usability, Proxy Rotation & Customer Support | Limited Customization & Higher Pricing |

Conclusion

I’ve listed major web scraping tools here to automate web scraping easily. Web Scraping is a legally grey area, and you should consider its legal implications before using a web scraping tool.

Web scraping tools mentioned above can simplify scraping data from webpages easily. If you need to automate web scraping for larger projects, you can contact Nanonets.

We also have a free website scraping tool to scrape webpages instantly.

If you're tired of the monotony of manually typing out text from countless scanned documents, it's time to embrace the future with Nanonets' Workflow Automation. This innovative platform transforms your tedious tasks into streamlined workflows, allowing you to automate the extraction of data from documents with ease. Connect to your favorite apps and harness the power of AI to elevate your data handling efficiency. Dive into the world of seamless workflow automation and revolutionize your approach to document management at Nanonets' Workflow Automation.

FAQs

How do Web Scrapers Work?

The function of web scrapers is to extract data from websites quickly and accurately. The process of data extraction is as follows:

Making an HTTP request to a server

The first step in the web scraping process is making an HTTP request when a person visits a website. This means asking to access a particular site that contains the data. To access any site, web scraper needs permission, which is why the initial thing to do is send an HTTP request to the site from which there is a need for the content.

Extracting and parsing the website's code

After getting permission to access the website, the work of web scrapers is to read and extract the HTML code of that website. After this, the web scraping tools break the content down into small parts, also known as parsing. It helps to identify and extract elements such as text IDs, tags, etc.

Saving the relevant data locally

After accessing the HTML code, and extracting and parsing it, the next step is to save the data in a local file. The data is saved as a structured format in an Excel file.

Different Types of Web Scrapers

Web Scrapers can be divided based on several different criteria, such as:

Self-Built or Pre-Built Web Scrapers

To program a self-built web scraper, you need advanced knowledge of programming. So to build a more advanced web scraper tool, you need more advanced knowledge to function as per the company's requirements.

While pre-built web scrapers are developed and can be downloaded and operated on the go, it also contains advanced features that can be customized per the needs.

Browser Extension or Software Web Scrapers

Browser extensions web scrapers are easy to function as they can be added to your web browser. However, because these web scrapers can be integrated with the web browser, they are limited because any feature not in the web browser can't be operated on this web scraper.

On the other hand, software web scrapers are not limited to web browsers only. That means they can be downloaded on your PC. In addition, these web scrapers have more advanced features; that is, any feature outside your web browser can be accessed.

Cloud or Local Web Scrapers

Cloud Web Scrapers function on the cloud. It is basically an off-site server that the web scraper company itself provides. It helps the PC to not use its resources to extract data and thus accomplish other functions of the PC.

While local web scrapers function on your PC and use the local resources to extract data, in this case, the web scrapers require more RAM, thus making your PC slow.

What is Web Scraping used for?

Web Scraping can be used in numerous organizations. Some of the uses of web scraping tools are as follows:

Price Monitoring

Many organizations and firms use web scraping techniques to extract the data and price related to particular products and then compare it with other products to make pricing strategies. This helps the company fix the product price to increase its sales and maximize profits.

News Monitoring

Web scraping news sites help extract the data and content about the latest trends of the organization. The data and reports of the companies that are recently in trend are available, and this helps the organization plan its marketing methods.

Sentiment Analysis

To enhance the quality of the products, there is a need to understand the views and feedback of the customers. Due to this, sentiment analysis is done. Web scraping is used to make this analysis by collecting data from various social media sites about particular products. This helps the company to make changes in their products as per the wishes of the customers.

Market Research

Market research is another use of web scraping tools. It involves collecting extracted data in huge volumes to analyze customer trends. This helps them make such products to increase the customers' popularity.

Email Marketing

Web scraping tools are used for email marketing as well. This process involves collecting the email ids of the people from websites. Then the companies send the promotional ads to these email IDs. This has been proven an excellent marking technique in recent years.