LayoutLM Explained

What is document processing?

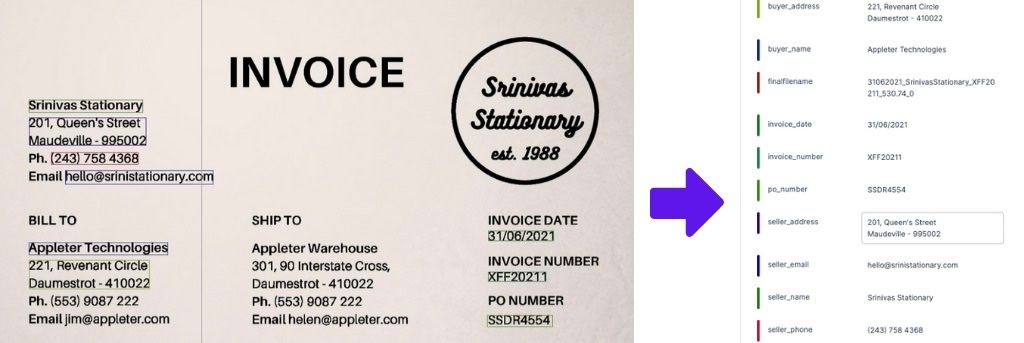

Document processing is the process of automating the extraction of structured data from documents. This could be for any document, say an invoice, a resume, ID cards, etc. The challenging part here is not just the OCR. There are many options available out there at low costs that can extract text and give you the location. The real challenge is labeling these pieces of text accurately and automatically.

Business Impact of Document Processing

Several industries rely heavily on document processing for their day to day operations. Financial organizations need access to SEC filings, insurance filings, an E-Commerce or Supply Chain company might need access to invoices that are being used, the list goes on. The accuracy of this information is just as important as the time being saved, which is why we always recommend using advanced deep learning methods that generalize more, and are more accurate.

According this report by PwC, [link] even the most rudimentary amount of structured data extraction can help save 30-50% of employee time spent on manually copying and pasting data from PDFs to excel spreadsheets. Models like LayoutLM are certainly not rudimentary, they have been built as extremely intelligent agents capable of accurate data extraction at scale, across different use cases. Even with many of our own customers, we have brought down time required to extract data manually down from 20 minutes per document to under 10 seconds. That is a massive shift, enabling workers to be more productive, and for higher throughput overall.

So where can AI similar to LayoutLM be applied? At Nanonets, we have used such technology for

and many other use cases.

Want to use deep learning for processing invoices? You can either sign up for free, or schedule a call with us here

Why LayoutLM?

How does a deep learning model understand whether a given piece of text is an item description in an invoice, or the invoice number? Put simply, how does a model learn how to assign labels correctly?

One method is to use text embeddings from a massive language model like BERT or GPT-3 and run it through a classifier - although this is not very effective. There is a lot of information that one cannot gauge purely using text. Or, one could make use of image based information. This was achieved by using R-CNN and Faster R-CNN models. However, this still does not fully utilize the information available in the documents. Another approach used was with Graph Convolutional Neural Networks, which combined both locational and textual information, but did not take into account image information.

So how do we use all three dimensions of information, i.e. the text, the image, and location of the given text as well? That is where models like LayoutLM come in. Despite being an active area of research for many years prior, LayoutLM was one of the first models that achieved success combining the pieces to create a singular model that performs labelling using positional information, text based information, and also image information.

LayoutLM Tutorial

This article assumes that you understand what a language model is. If not, don’t worry, we wrote an article on that as well! If you would like to learn more about what transformer models are, and what attention is, here is an amazing article by Jay Alammar.

Assuming we have gotten these things out of the way, let’s get started with the tutorial. We will be using the original LayoutLM paper as the main reference.

OCR Text Extraction

The very first thing we do with a document is to extract the text based information from the document, and find their respective locations. By location, we refer to something called a ‘bounding box’. A bounding box is a rectangle that encapsulates the piece of text on the page.

In most cases, it is assumed that the bounding box has origin at the top left corner, and that the positive x-axis is directed from the origin towards the right of the page, and the positive y-axis is directed from the origin to the bottom of the page, with one pixel being considered the unit of measurement.

Language and Location Embeddings

Next, we make use of five different embedding layers. One, is to encode the language related information - i.e. text embeddings.

The other four are reserved for location embeddings. Assuming that we know the values of xmin, ymin, xmax and ymax, we can determine the whole bounding box (if you cannot visualize it, here is a link for you). These coordinates are passed through their respective embedding layers to encode information for location.

The five embeddings - one for text and four for the coordinates - are then added up to create the final value of the embedding that is passed through the LayoutLM. The output is referred to as the LayoutLM embedding.

Image Embeddings

Okay, so we have managed to find the text and location related information by combining their embeddings and passing it through a language model. Now how do we go around the process of combining image related information in it?

While the text and layout information is being encoded, parallely, we use Faster R-CNN to extract the regions of text related to the document. Faster R-CNN is an image model used for object detection. In our case, we use it to detect different pieces of text (assuming each phrase is an object) and then pass the segmented images through a fully connected layer to help generate embeddings for the images as well.

The LayoutLM embeddings as well as the image embeddings are combined to create a final embedding, which can then be used to perform downstream processing.

Pre-training LayoutLM

All of the above makes sense only if we understand the method in which LayoutLM was trained. After all, no matter what kind of connections we establish in a neural network, until and unless it is trained with the right learning objective, it isn’t quite smart. The authors of LayoutLM wanted to pursue a method similar to what was used for pre-training BERT.

Masked Visual Language Model (MVLM)

In order to help the model learn what text there could have been in a certain location, the authors randomly masked a few tokens of text while retaining location related information and embeddings. This enabled LayoutLM to go beyond simple Masked Language Modeling, and helped associate text embeddings with location related modalities as well.

Multi-label Document Classification (MDC)

Using all the information in the document to classify it into categories helps the model understand what information is relevant to a certain class of documents. However, the authors note that for larger datasets, data on document classes may not be readily available. Hence, they have provided results basis both MVLM training alone, and MVLM + MDC training.

Fine Tuning LayoutLM for Downstream Tasks

There are several downstream tasks that can be executed with LayoutLM. We will be discussing the ones that the authors undertook.

Form Understanding

This task entails linking a label type to a given piece of text. Using this, we can extract structured data from any kind of document. Given the final output, i.e. LayouLM embeddings + Image embeddings, they are passed through a fully connected layer and then passed through a softmax to predict class probabilities for label of a given piece of text.

Receipt Understanding

In this task, several slots of information were left empty on receipts, and the model had to correctly position pieces of text onto their respective slots.

Document Image Classification

Information from the text and image of the document is combined to help understand the class of the document by simply passing it through a softmax layer.

Huggingface LayoutLM

One of the main reasons LayoutLM gets discussed so much is because the model was open sourced a while ago. It is available on Hugging Face, so using LayoutLM is significantly easier now.

Before we dive into the specifics of how you can fine-tune LayoutLM for your own needs, there are a few things to take into consideration.

Installing Libraries

To run LayoutLM, you will need the transformers library from Hugging Face, which in turn is dependent on the PyTorch library. To install them (if not already installed), run the following commands

On bounding boxes

To create a uniform embedding scheme regardless of image size, the bounding box coordinates are normalized on a scale of 1000

Configuration

Using the transformers.LayoutLMConfig class, you can set the size of the model to best suit your requirements, since these models are typically heavy and need quite a bit of compute power. Setting it to a smaller model might help you run it locally. You can learn more about the class here.

LayoutLM for Document Classification (Link)

If you want to perform document classification, you will need the class transformers.LayoutLMForSequenceClassification. The sequence here is the sequence of text from the document that you have extracted. Here is a small code sample from Hugging Face.co that will explain how to use it

LayoutLM for Text Labeling (Link)

To perform semantic labeling, i.e. assign labels to different parts of text in the document, you will need the class transformers.LayoutLMForTokenClassification. You may find more details on the same here.Here is a small code sample for you to see how it can work for you

Some Points to Note about Hugging Face LayoutLM

- Currently, the Hugging Face LayoutLM model makes use of the Tesseract open source library for text extraction, which is not very accurate. You might want to consider using a different, paid OCR tool like AWS Textract or Google Cloud Vision

- The existing model only provides the language model, i.e. the LayoutLM embeddings, and not the final layers which combine visual features. LayoutLMv2 (discussed in next section) uses the Detectron library to enable visual feature embeddings as well.

- The classification of labels occurs at a word level, so it is really up to the OCR text extraction engine to ensure all words in a field are in a continuous sequence, or one field might be predicted as two.

LayoutLMv2

LayoutLM came around as a revolution in how data was extracted from documents. However, as far as deep learning research goes, models only improve more and more over time. LayoutLM was similarly succeeded by LayoutLMv2, where the authors made a few significant changes to how the model was trained.

Including 1-D Spatial Embeddings and Visual Token Embeddings

LayoutLMv2 included information regarding 1-D relative location, as well as overall image related information. The reason this is important is because of the new training objectives, which we will now discuss

New Training Objectives

LayoutLMv2 included some modified training objectives. These are as follows:

- Masked Visual Language Modelling: This is the same as in LayoutLM

- Text Image Alignment: Text was randomly covered from the image, while the text tokens were provided to the model. For each token, the model had to learn whether or not the given text was covered. Through this, the model was able to combine information from both visual and textual modalities

- Text Image Matching: The model is asked to check if the given image corresponds to the given text. Negative samples are either fed as false images, or no image embeddings are provided at all. This is done to ensure the model learns more about how the text and images are related.

Using these new methods and embeddings, the model was able to achieve higher F1 scores on almost all of the test datasets as LayoutLM.